# 虚拟化# kvm1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 # 配置yum mount -t iso9660 -o ro,loop xxx /www mount 只能mount块设备。mount文件的时候,会先做成块设备,再mount // 把文件做成块设备 losetup /dev/loop1 xxx.iso losetup -a // mount -t iso9660 -o ro /dev/loop1 /www -> mount -t iso9660 -o ro,loop xxx /www 简写 mount xxx.iso /ppp mount -l # 自定义yum createrepo xxx // 生成repodata baseurl配置和 repodata 同一目录 gpgcheck 需要配合私钥使用。 RPM-GPG-KEY

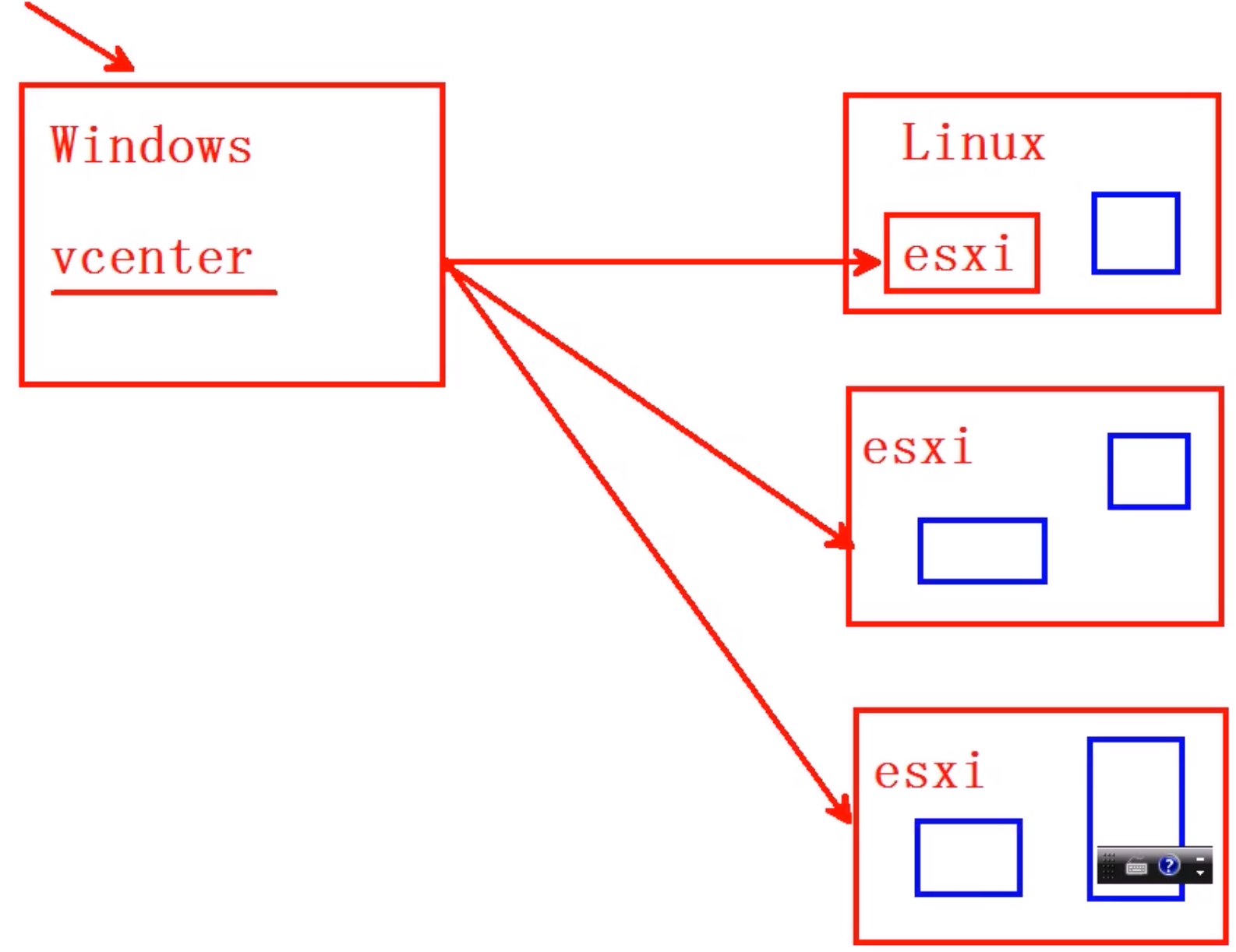

# 虚拟化kvm / vcenter / xen / hyper-v

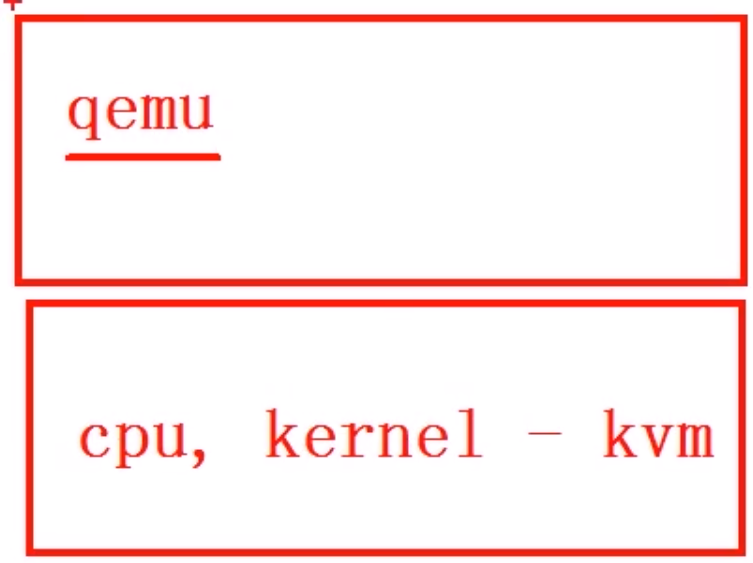

# KVM /QEMU /LIBVIRTDKVM 是 linux 内核的模块,他需要 CPU 的支持,采用硬件辅助虚拟化技术 Intel-VT,AMD-V,内存的相关如 Intel 的 EPT 和 AMD 的 RVI 技术

QEMU 是一个虚拟化的仿真工具,通过 ioctl 与内核 kvm 交互完成对硬件的虚拟化支持

Libvirt 是一个对虚拟化管理的接口和工具,提供用户端程序 virsh ,virt-install, virt-manager, virt-view 与用户交互

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@localhost usr]# ps -efww | grep qemu qemu 4075 1 99 06:41 ? 00:13:46 /usr/libexec/qemu-kvm -name generic -S -machine pc-i440fx-rhel7.0.0,accel=tcg,usb=off,dump-guest-core=off -m 1024 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid 7693d5c3-1153-43aa-a38e-4093f30292a0 -no-user-config -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/domain-3-generic/monitor.sock,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc,driftfix=slew -global kvm-pit.lost_tick_policy=delay -no-hpet -no-reboot -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 -boot strict=on -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x5.0x7 -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x5 -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x5.0x1 -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x5.0x2 -device virtio-serial-pci,id=virtio-serial0,bus=pci.0,addr=0x6 -drive file=/var/lib/libvirt/images/generic.qcow2,format=qcow2,if=none,id=drive-ide0-0-0 -device ide-hd,bus=ide.0,unit=0,drive=drive-ide0-0-0,id=ide0-0-0,bootindex=2 -drive file=/root/CentOS-7-x86_64-Minimal-2009.iso,format=raw,if=none,id=drive-ide0-0-1,readonly=on -device ide-cd,bus=ide.0,unit=1,drive=drive-ide0-0-1,id=ide0-0-1,bootindex=1 -netdev tap,fd=26,id=hostnet0 -device rtl8139,netdev=hostnet0,id=net0,mac=52:54:00:3d:35:10,bus=pci.0,addr=0x3 -chardev pty,id=charserial0 -device isa-serial,chardev=charserial0,id=serial0 -chardev spicevmc,id=charchannel0,name=vdagent -device virtserialport,bus=virtio-serial0.0,nr=1,chardev=charchannel0,id=channel0,name=com.redhat.spice.0 -spice port=5900,addr=127.0.0.1,disable-ticketing,image-compression=off,seamless-migration=on -vga qxl -global qxl-vga.ram_size=67108864 -global qxl-vga.vram_size=67108864 -global qxl-vga.vgamem_mb=16 -global qxl-vga.max_outputs=1 -device intel-hda,id=sound0,bus=pci.0,addr=0x4 -device hda-duplex,id=sound0-codec0,bus=sound0.0,cad=0 -chardev spicevmc,id=charredir0,name=usbredir -device usb-redir,chardev=charredir0,id=redir0,bus=usb.0,port=1 -chardev spicevmc,id=charredir1,name=usbredir -device usb-redir,chardev=charredir1,id=redir1,bus=usb.0,port=2 -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x7 -msg timestamp=on root 4863 2914 0 06:53 pts/0 00:00:00 grep --color=auto qemu // 必选组件 yum install qemu-kvm libvirt-daemon libvirt-client libvirt-daemon-driver-qemu -y systemctl enable --now libvirtd // 可选组件 virt-install 系统安装工具 virt-manager 图形管理工具 virt-v2v 虚拟机迁移工具 virt-p2v 物理机迁移工具

虚拟机的组成

系统设备仿真 (QEMU)

虚拟机管理程序 (LIBVIRT)

一个 XML 文件 (虚拟机配置声明文件)

位置 /etc/libvirt/qemu/

一个磁盘镜像文件 (虚拟机的硬盘)

位置 /var/lib/libvirt/images/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 [root@localhost usr]# cd /etc/libvirt/qemu/ [root@localhost qemu]# ls generic.xml networks [root@localhost qemu]# cat generic.xml <!-- WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE OVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh edit generic or other application using the libvirt API. --> <domain type='qemu'> <name>generic</name> <uuid>7693d5c3-1153-43aa-a38e-4093f30292a0</uuid> <memory unit='KiB'>1048576</memory> <currentMemory unit='KiB'>1048576</currentMemory> <vcpu placement='static'>1</vcpu> <os> <type arch='x86_64' machine='pc-i440fx-rhel7.0.0'>hvm</type> <boot dev='hd'/> </os> <features> <acpi/> <apic/> </features> <clock offset='utc'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>destroy</on_crash> <pm> <suspend-to-mem enabled='no'/> <suspend-to-disk enabled='no'/> </pm> <devices> <emulator>/usr/libexec/qemu-kvm</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2'/> <source file='/var/lib/libvirt/images/generic.qcow2'/> <target dev='hda' bus='ide'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <target dev='hdb' bus='ide'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x2'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='ide' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </controller> <interface type='network'> <mac address='52:54:00:3d:35:10'/> <source network='default'/> <model type='rtl8139'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='spicevmc'> <target type='virtio' name='com.redhat.spice.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <graphics type='spice' autoport='yes'> <listen type='address'/> <image compression='off'/> </graphics> <sound model='ich6'> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </sound> <video> <model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </video> <redirdev bus='usb' type='spicevmc'> <address type='usb' bus='0' port='1'/> </redirdev> <redirdev bus='usb' type='spicevmc'> <address type='usb' bus='0' port='2'/> </redirdev> <memballoon model='virtio'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </memballoon> </devices> </domain> [root@localhost images]# ls generic.qcow2

# virsh 命令1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 // 两种方式 [root@localhost images]# virsh Welcome to virsh, the virtualization interactive terminal. Type: 'help' for help with commands 'quit' to quit virsh # list Id Name State ---------------------------------------------------- 3 generic running virsh # exit [root@localhost images]# virsh list // 列出启动的虚拟机 Id Name State ---------------------------------------------------- 3 generic running [root@localhost images]# virsh list --all // 列出所有虚拟机 Id Name State ---------------------------------------------------- 3 generic running [root@localhost images]# virsh start/reboot/shutdown xxx // 启动虚拟机 [root@localhost images]# virsh destroy xxx // 强制关闭虚拟机 [root@localhost images]# virsh define/undefine xxx // 根据xml创建删除虚拟机 [root@localhost images]# virsh console xxx // 链接虚拟机console ctrl + ] 退出console [root@localhost images]# virsh edit xxx // 调整虚拟机参数 [root@localhost images]# virsh autostart xxx // 开机自启 [root@localhost images]# virsh domiflist xxx // 设置虚拟机网卡 [root@localhost images]# virsh domblklist xxx // 设置虚拟机硬盘 // 虚拟交换机命令 [root@localhost images]# virsh net-list --all Name State Autostart Persistent ---------------------------------------------------------- default active yes yes [root@localhost ~]# virsh net-start [root@localhost ~]# virsh net-destroy [root@localhost ~]# virsh net-define [root@localhost ~]# virsh net-undefine [root@localhost ~]# virsh net-edit [root@localhost ~]# virsh net-autostart

# 虚拟机磁盘管理1 2 3 4 5 6 7 8 9 10 11 12 qemu-img 是虚拟机的磁盘管理命令,支持非常多的磁盘格式,例如raw、qcow2、vdi、vmdk等等 qemu-img create -f qcow2 aa.img 50G qemu-img create -b haha.img -f qcow2 111.img // -b使用后端模板文件 qemu-img info aa.img qemu-img convert // 转换磁盘格式 qemu-img info qemu-img resize

qcow2 占用空间小,创建系统时不会把所有空间全部占用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 [root@localhost ~]# cd /var/lib/libvirt/images/ [root@localhost images]# qemu-img create -f qcow2 node_base 20G Formatting 'node_base', fmt=qcow2 size=21474836480 encryption=off cluster_size=65536 lazy_refcounts=off [root@localhost images]# qemu-img create -b node_base -f qcow2 node_node.img 20G Formatting 'node_node.img', fmt=qcow2 size=21474836480 backing_file='node_base' encryption=off cluster_size=65536 lazy_refcounts=off [root@localhost images]# qemu-img info node_base image: node_base file format: qcow2 virtual size: 20G (21474836480 bytes) disk size: 196K cluster_size: 65536 Format specific information: compat: 1.1 lazy refcounts: false [root@localhost images]# qemu-img info node_node.img image: node_node.img file format: qcow2 virtual size: 20G (21474836480 bytes) disk size: 196K cluster_size: 65536 backing file: node_base Format specific information: compat: 1.1 lazy refcounts: false

cow

Copy On Write,写时复制

直接映射原始盘的数据内容

当数据有修改要求时,在修改之前自动将旧数据拷贝存入前端盘后,对前端盘进行修改

原始盘始终是只读的

# 克隆虚拟机复制已经存在的 xml 为 zzz.xml

修改 name,和 xml 名字一样

修改 disk file

执行:

virsh define zzz.xml

virsh start zzz

virsh list

virsh console zzz

# 扩容磁盘磁盘 -> 分区 -> 文件系统

不能缩,只能扩

1. 硬件扩容

virsh blockresize --path /var/lib/libvirt/images/node1.img --size 50G node1

下面的命令在虚拟机上执行

2. 磁盘空间扩容

growpart /dev/vda 1

3. 扩容文件系统

xfs_growfs /dev/vda1

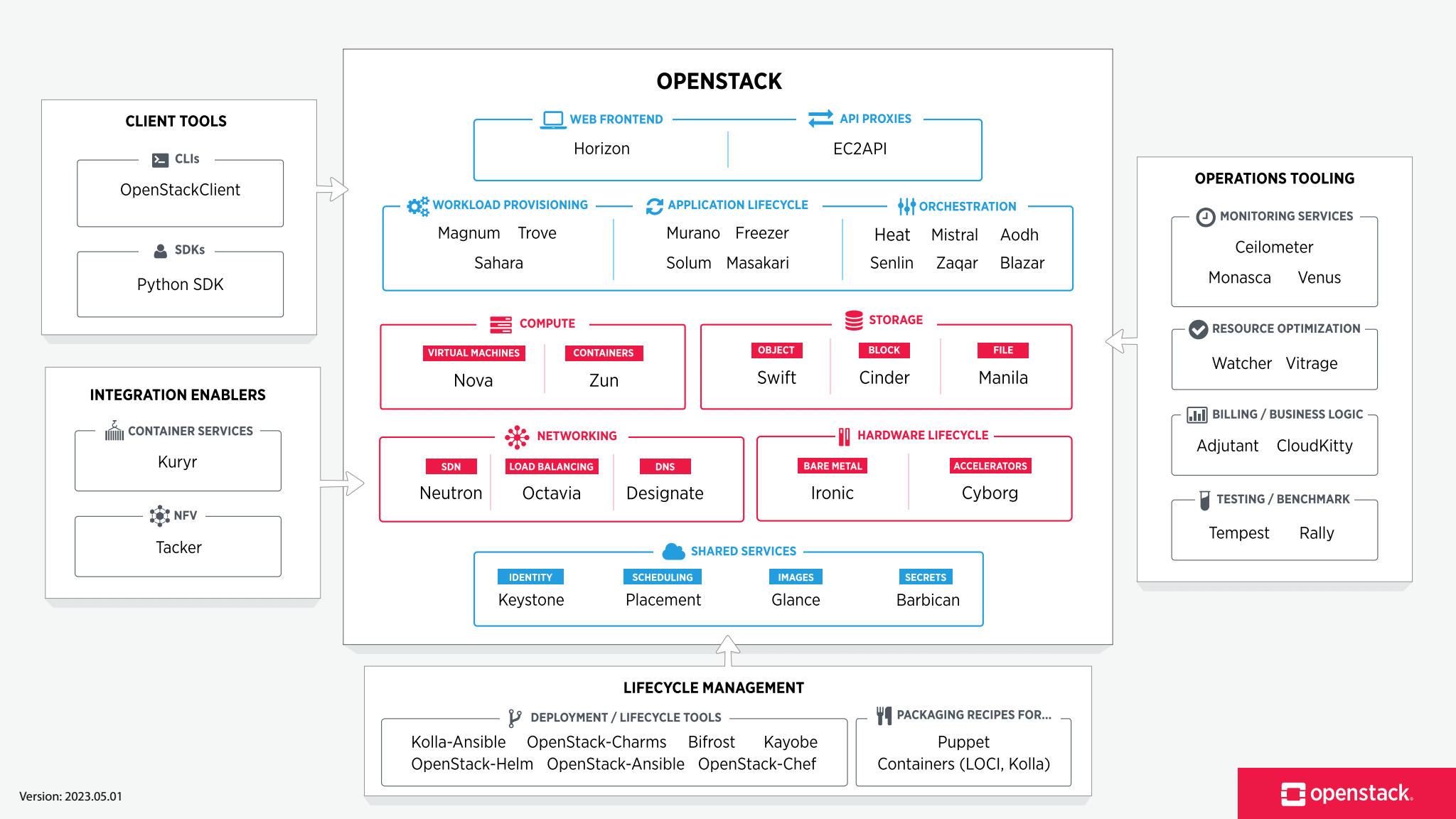

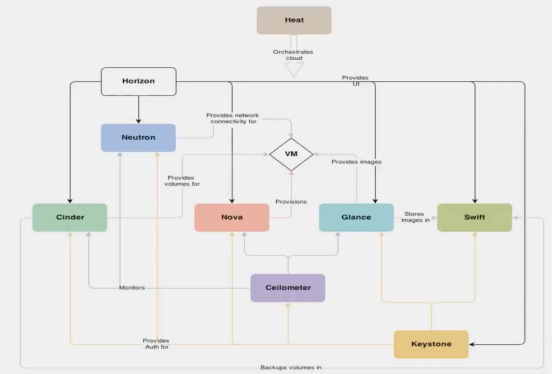

# OpenStack# openstack 组件

Keystone

为其他服务提供认证和授权的集中身份管理服务

也提供了集中的目录服务

支持多种身份认证模式,如密码认证、令牌认证、以及 AWS 登陆

为用户和其他服务提供了 SSO 认证服务

Neutron

一种软件定义网络服务

用于创建网络、子网、路由器、管理浮动 IP 地址

可以实现虚拟交换机、虚拟路由器

可用于在项目中创建 VPN

# 部署三台 8C 16G 主机,一个 hostname openstack,另外两个 nova1 和 nova2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 // 以下操作在每台节点上执行 vim /etc/resolve.conf 8.8.8.8 vim /etc/hosts 192.168.13.201 openstack 192.168.13.202 nova1 192.168.13.202 nova2 yum install chrony -y vim /etc/chrony.conf server ntp2.aliyun.com minpoll 4 maxpoll 10 iburst server ntp.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp.aliyun.com minpoll 4 maxpoll 10 iburst server ntp1.aliyun.com minpoll 4 maxpoll 10 iburst server ntp1.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp10.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp11.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp12.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp2.aliyun.com minpoll 4 maxpoll 10 iburst server ntp2.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp3.aliyun.com minpoll 4 maxpoll 10 iburst server ntp3.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp4.aliyun.com minpoll 4 maxpoll 10 iburst server ntp4.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp5.aliyun.com minpoll 4 maxpoll 10 iburst server ntp5.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp6.aliyun.com minpoll 4 maxpoll 10 iburst server ntp6.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp7.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp8.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst server ntp9.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst systemctl restart chronyd chronyc sources -v yum install qemu-kvm libvirt-daemon libvirt-daemon-driver-qemu libvirt-client python-setuptools -y yum remove NetworkManager* -y yum remove firewalld* -y vim /etc/sysconfig/network-scripts/ifcfg-ens33 BOOTPROTO=static systemctl restart network

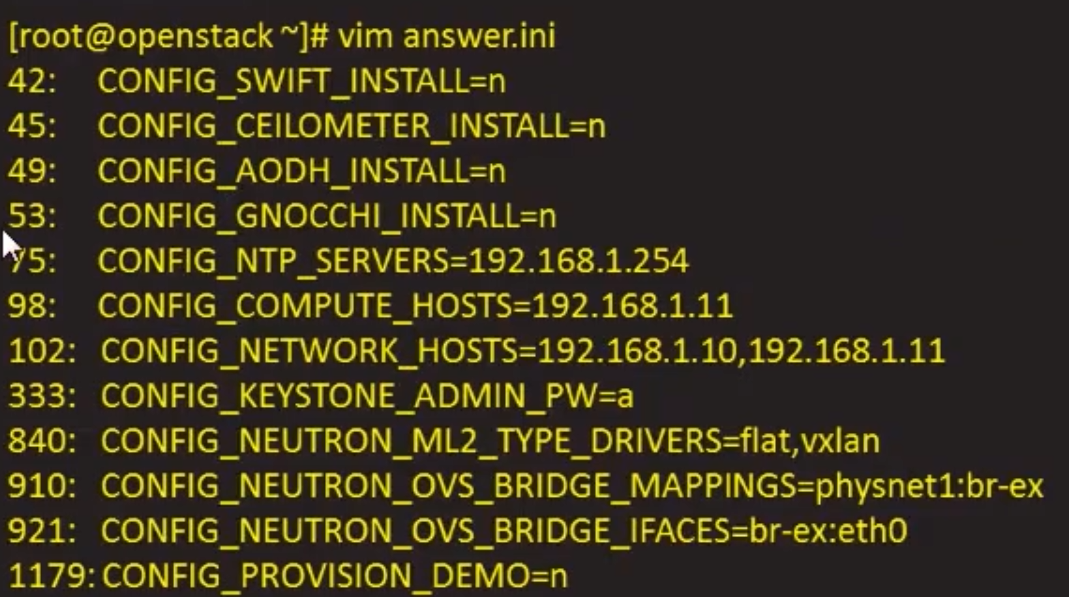

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 // openstack节点 bash <(curl -sSL https://gitee.com/SuperManito/LinuxMirrors/raw/main/ChangeMirrors.sh) 选择中科大镜像源。 注意不要安装EPEL扩展源因为会导致稍后安装packstack失败,建议只更换基础源不要更换OpenStack相关的源避免后续在获取某些软件包时超时失败。 使用HTTP协议 更新软件包 清空已下载软件包缓存 yum install centos-release-openstack-train yum install openstack-packstack packstack --gen-answer-file=answer.ini packstack --answer-file=answer.ini CONFIG_CONTROLLER_HOST=192.168.13.201 CONFIG_COMPUTE_HOSTS=192.168.13.202 # List of servers on which to install the network service such as # Compute networking (nova network) or OpenStack Networking (neutron). CONFIG_NETWORK_HOSTS=192.168.13.201,192.168.13.202

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [root@openstack ~]# . keystonerc_admin [root@openstack ~(keystone_admin)]# openstack user list +----------------------------------+-----------+ | ID | Name | +----------------------------------+-----------+ | b88c0404f054445ab8c099673ecdb0f9 | admin | | 592cf7de5ffe4ec69bec764c6e6a3506 | glance | | a62eaa55c4c44dbf9c559b471bde2394 | cinder | | 7edbdc229b8f4286bde2aa477521b2ad | nova | | 51b14b54bc124655b6bb29fb2b0b8458 | placement | | 4facc9243f4c471a91bc9a9d5b5c45d0 | neutron | +----------------------------------+-----------+ // 不退出终端 [root@openstack ~]# bash [root@openstack ~]# . keystonerc_admin [root@openstack ~(keystone_admin)]# [root@openstack ~(keystone_admin)]# exit exit // 查看帮助 [root@openstack ~]# openstack help user Command "user" matches: user create user delete user list user password set user set user show

# 项目管理项目:一组隔离的资源和对象。由一组关联的用户进行管理

在旧版本里,也用租户 (tenant) 来表示

根据配置的需求,项目对应一个组织、一个公司或是一个使用客户等

项目中可以有多个用户,项目中的用户可以在该项目创建、管理虚拟资

源具有 admin 角色的用户可以创建项目

项目相关信息保存到 MariaDB 中

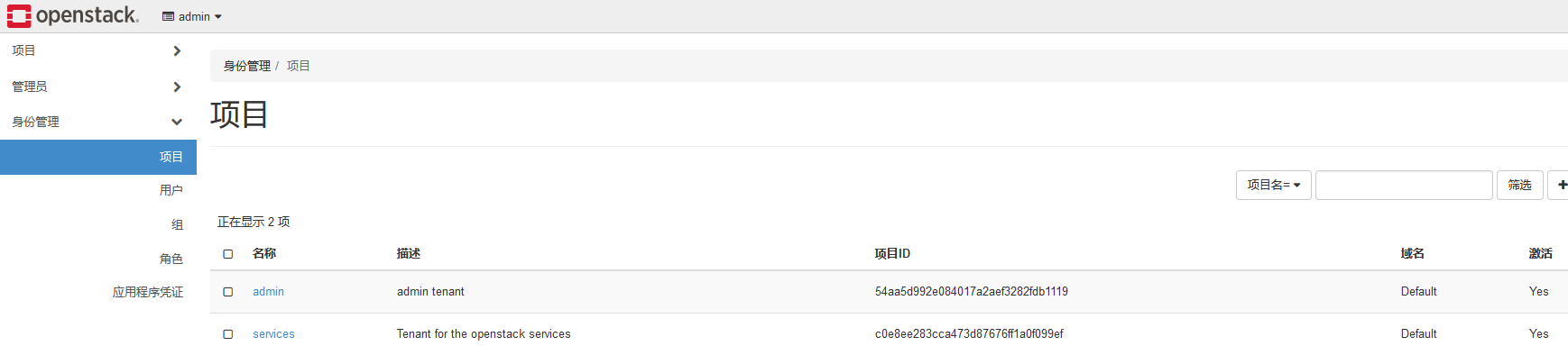

缺省情况下,packstack 安装的 openstack 中有两个独立的项目

admin: 为 admin 账户创建的项目

services: 与安装的各个服务相关联

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 // 创建项目 [root@openstack ~(keystone_admin)]# openstack project create myproject [root@openstack ~(keystone_admin)]# openstack project list +----------------------------------+-----------+ | ID | Name | +----------------------------------+-----------+ | 54aa5d992e084017a2aef3282fdb1119 | admin | | 735ea2227d2e4c509af906f8e519c95d | myproject | | c0e8ee283cca473d87676ff1a0f099ef | services | +----------------------------------+-----------+ [root@openstack ~(keystone_admin)]# openstack project show myproject +-------------+----------------------------------+ | Field | Value | +-------------+----------------------------------+ | description | | | domain_id | default | | enabled | True | | id | 735ea2227d2e4c509af906f8e519c95d | | is_domain | False | | name | myproject | | options | {} | | parent_id | default | | tags | [] | +-------------+----------------------------------+ [root@openstack ~(keystone_admin)]# openstack project set --disable 激活/禁用项目 // 查看项目配额 [root@openstack ~(keystone_admin)]# nova quota-show --tenant myproject // 更新vcpu数目 [root@openstack ~(keystone_admin)]# nova quota-update --cores 30 // 删除 [root@openstack ~(keystone_admin)]# openstack project delete myproject

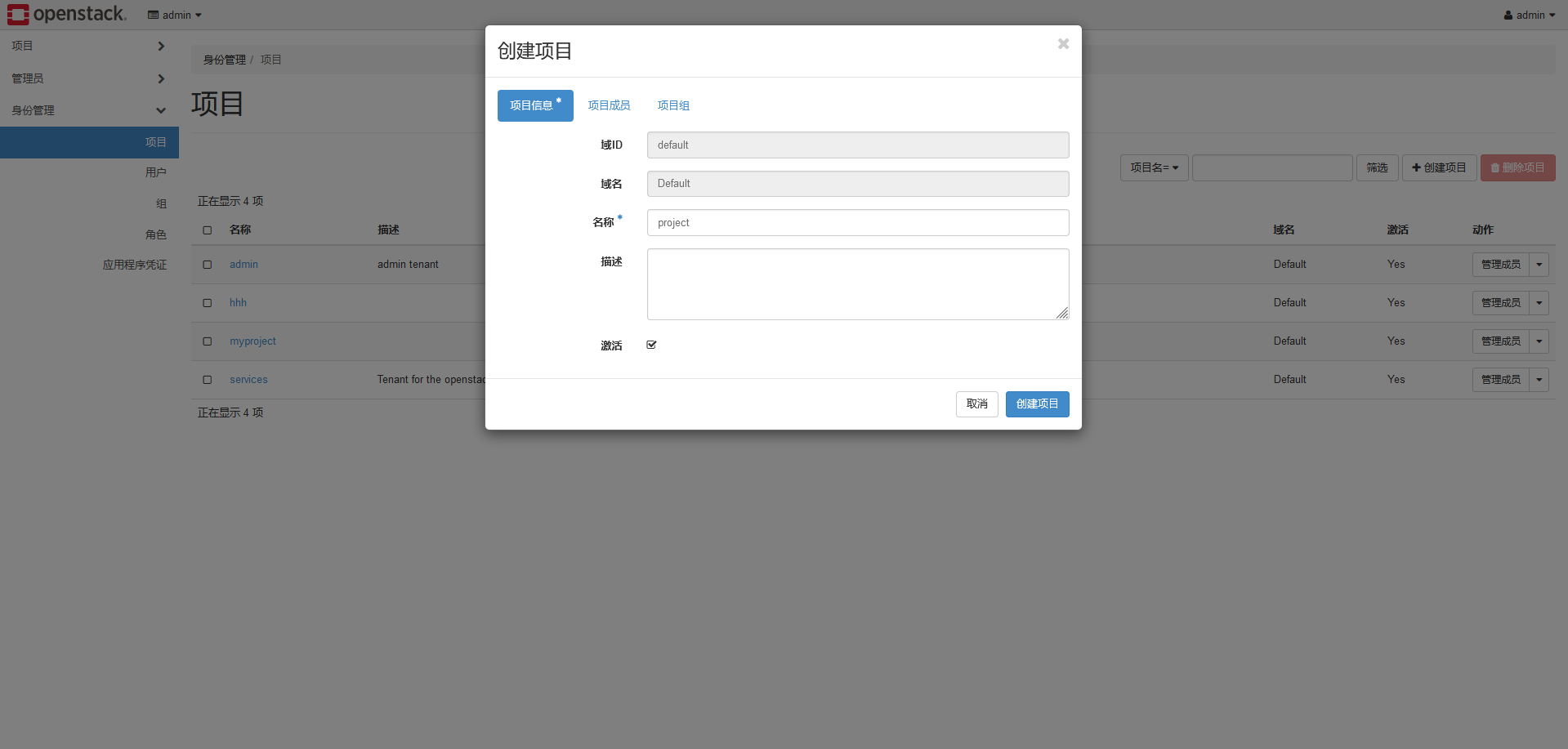

创建项目

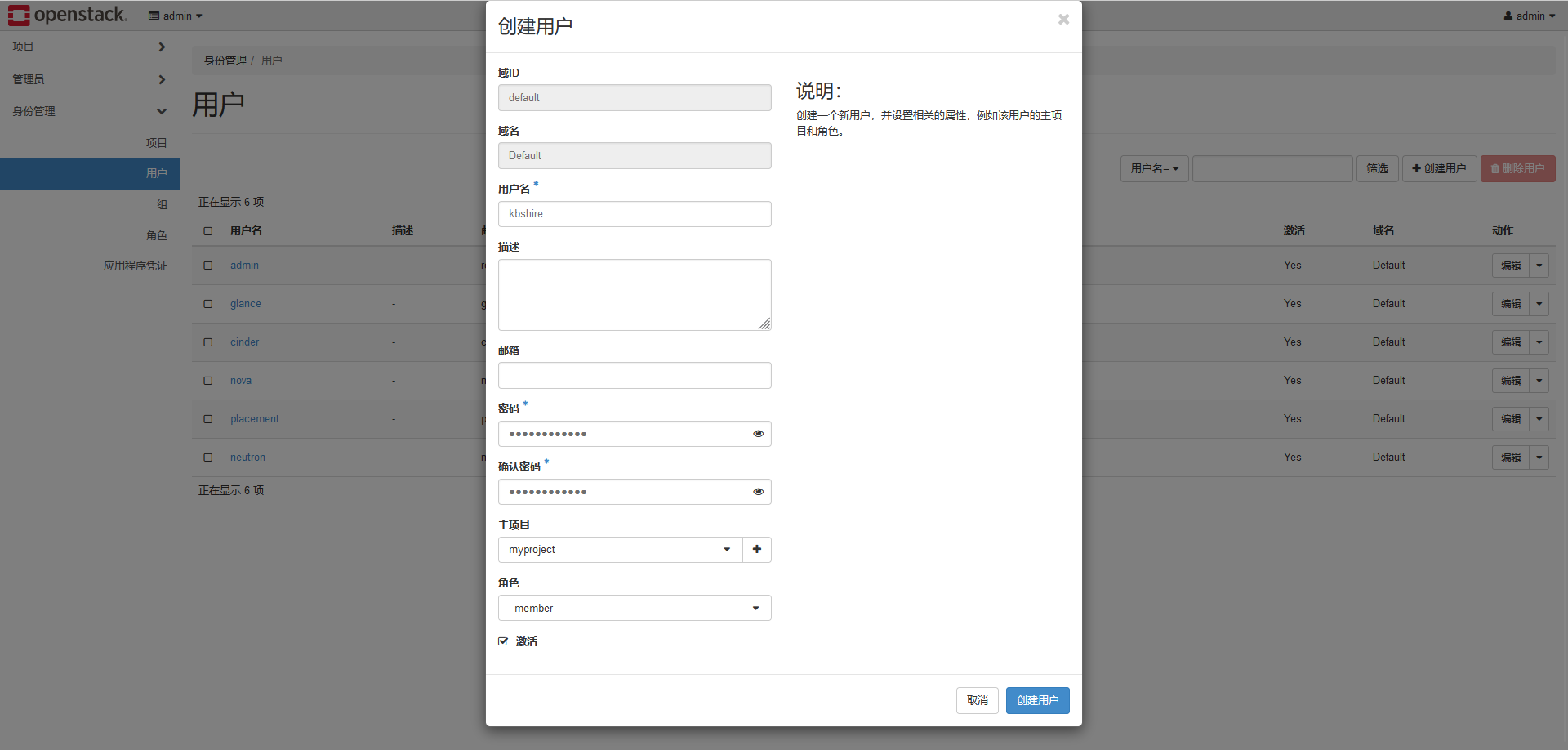

创建用户

修改密码

1 2 3 [root@openstack ~(keystone_admin)]# openstack user set --password 123456 kbshire // 修改密码 [root@openstack ~(keystone_admin)]# openstack role remove --project myproject --user kbshire _member_ // 删除用户 [root@openstack ~(keystone_admin)]# openstack user delete kbshire

资源配额

安全组规则:指定每个项目可用的规则数

核心:指定每个项可用的 VCPU 核心数

固定 IP 地址:指定每个项目可用的固定 IP 数

浮动 IP 地址:指定每个项目可用的浮动 IP 数

注入文件大小:指定每个项目内容大小

注入文件路径:指定每个项目注入的文件路径长度

注入文件:指定每个项目允许注入的文件数目

实例:指定每个项目可创建的虚拟机实例数目

密钥对:指定每个项可创建的密钥数

元数据:指定每个项目可用的元数据数目

内存:指定每个项目可用的最大内存

安全组:指定每个项目可创建的安全组数目

1 2 3 4 5 6 列出项目的缺省配额 [root@openstack~(keystone_admin)]# nova quota-defaults 列出myproject的配额 [root@openstack~(keystone admin)]# nova quota-show --tenant myproject 修改浮动IP地址配额 [root@openstack~(keystone admin)]# nova quota-update --floating-ips 20 myproject

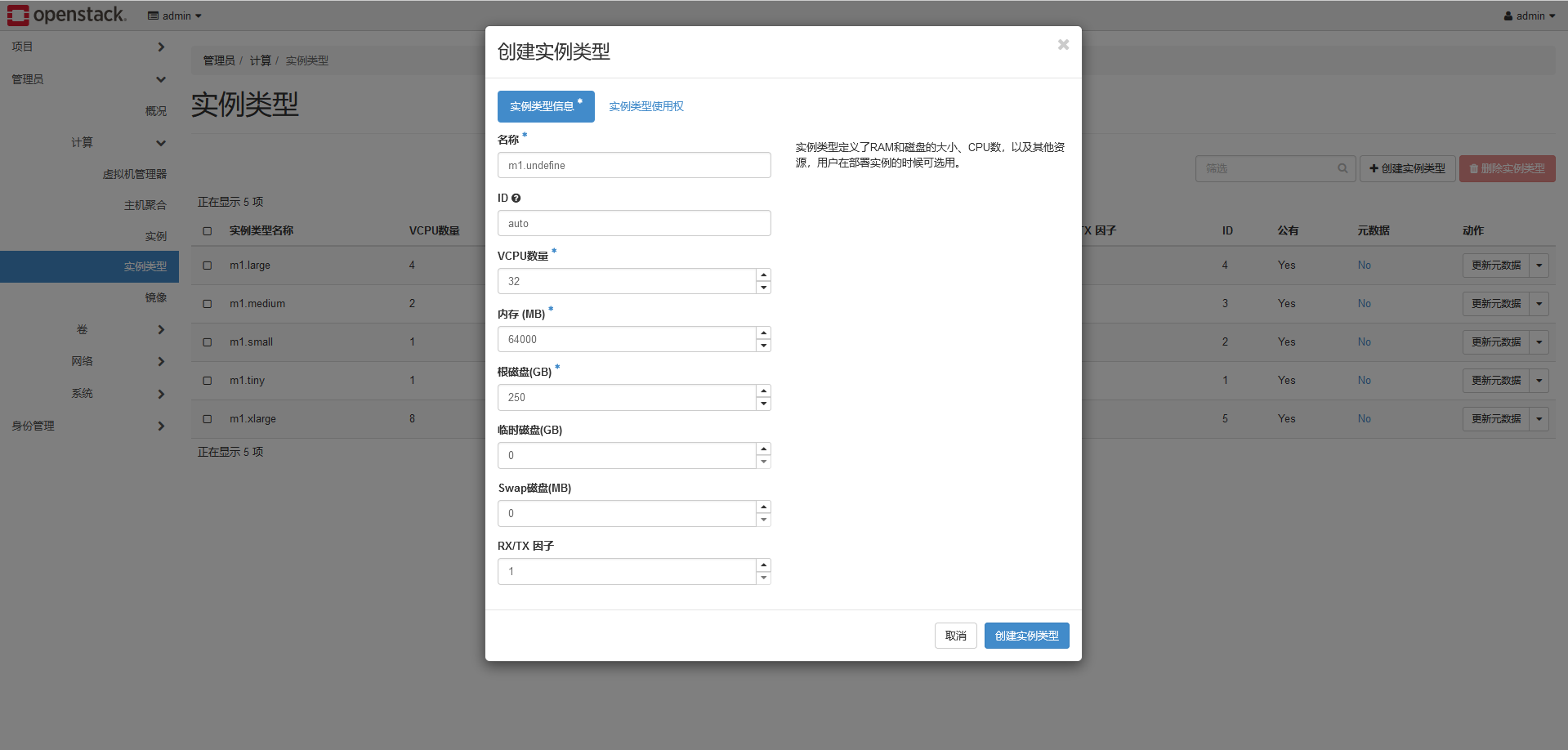

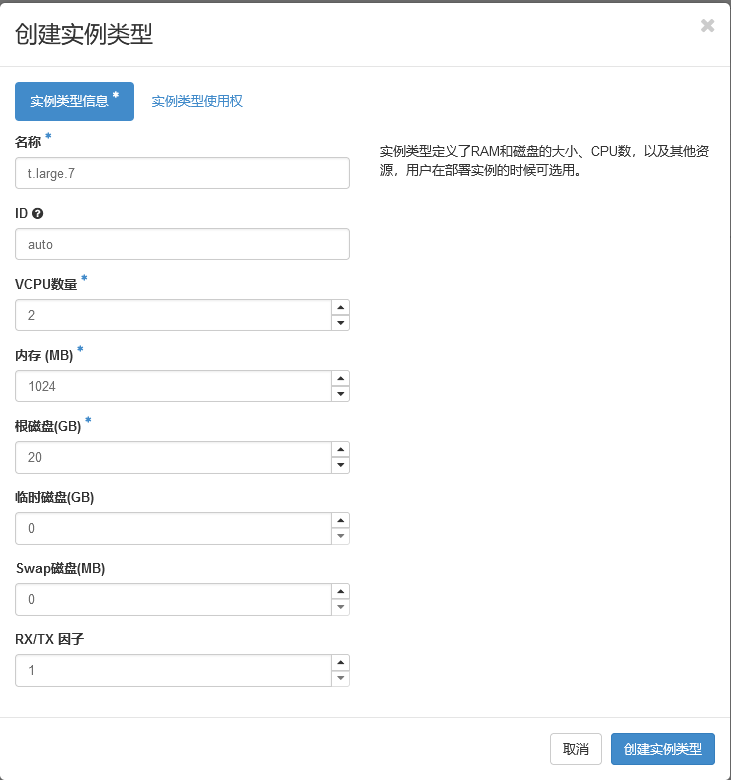

云主机类型

1 2 3 4 云主机类型就是资源的模板 它定义了一台云主机可以使用的资源,如内存大小磁盘容量和CPU核心数等 Openstack提供了几个默认的云主机类型 管理员还可以自定义云主机类型

1 2 3 4 5 6 列出所有的云主机类型 [root@openstack~(keystone admin)]# openstack flavor list 创建一个云主机类型 [root@openstack~(keystone admin)]# openstack flavor create --public demo.tiny--id auto --ram 512 --disk 10 --vcpus 1 删除云主机类型 [root@openstack~(keystone admin)]# openstack flavor delete demo.tiny

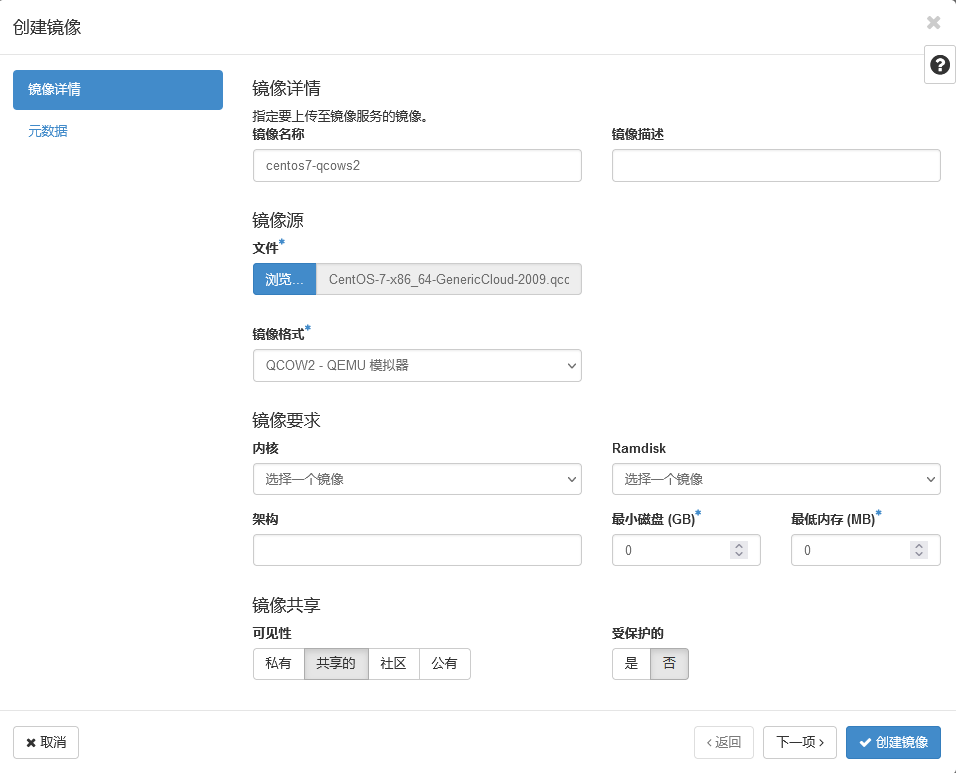

在红帽 Openstack 平台中,镜像指的是虚拟磁盘文件

磁盘文件中应该已经安装了可启动的操作系统

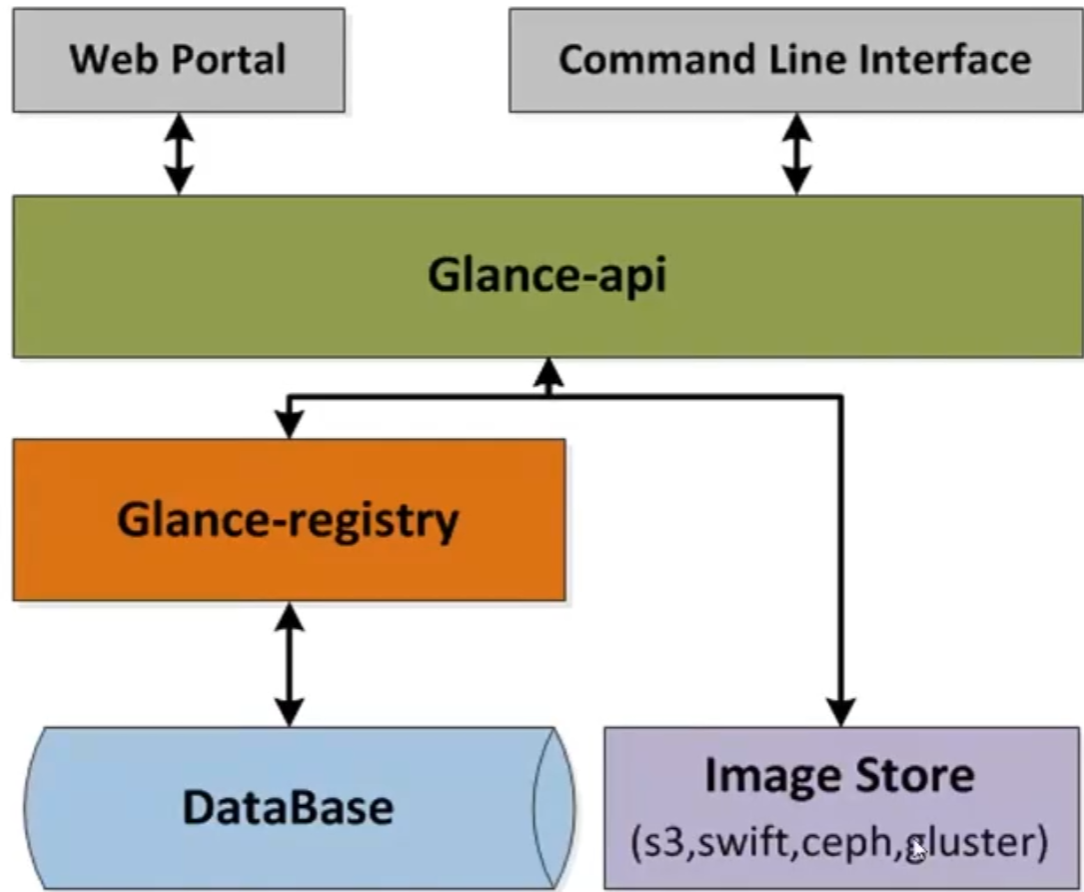

镜像管理功能由 Glance 服务提供

它形成了创建虚拟机实例最底层的块结构

镜像可以由用户上传,也可以通过红帽官方站点下载

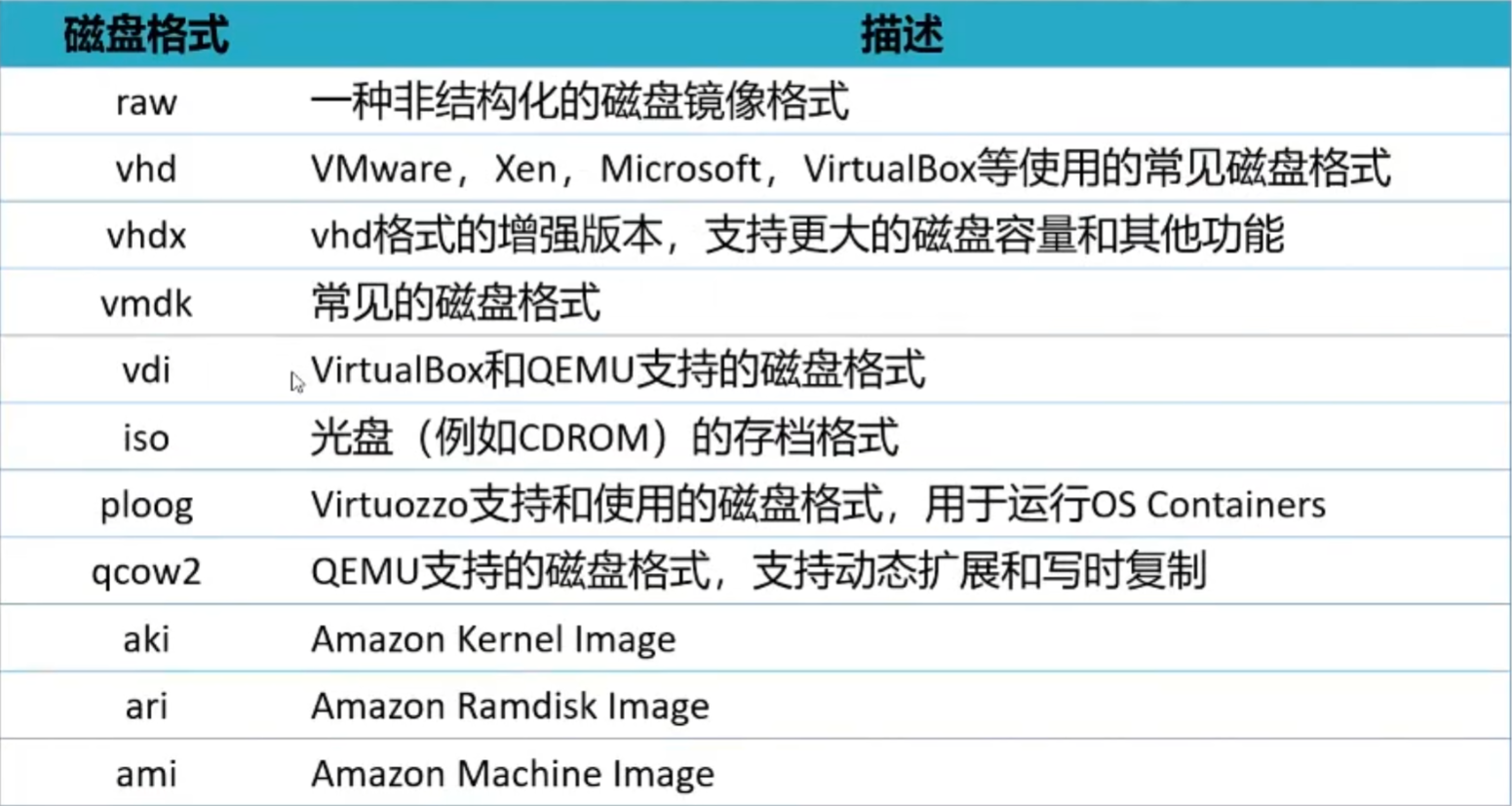

镜像容器格式

raw: 非结构化磁盘镜像格式

vhd:VMware、Xen、Microsoft、VirtualBox 等均支持的通用磁盘格式

vmdk: 是 Vmware 的虚拟磁盘格式

vdi:VirtualBox 虚拟机和 QEMU 支持磁盘格式

iso: 光盘数据内容的归档格式

qcow2:QEMU 支持的磁盘格式。空间自动扩展并支持写时复制 copy-on-write

bare: 镜像中没有容器或元数据封装

ovf: 一种开源的文件规范,描述了一个开源、安全、有效、可拓展的便携式虚拟打包以及软件分布格式

ova:OVA 归档文件

aki: 亚马逊内核镜像

ami: 亚马逊主机镜像

1 2 3 4 5 6 上传镜像 [root@openstack~(keystone admin)]# openstack image create --disk-format qcow2 -min-disk 10 --min-ram 512 --file /root/small.img small_rhel6 列出镜像 [root@openstack~(keystone admin)]# openstack image list 查看镜像详情 [root@openstack~(keystone admin)]# openstack image show small_rhel6

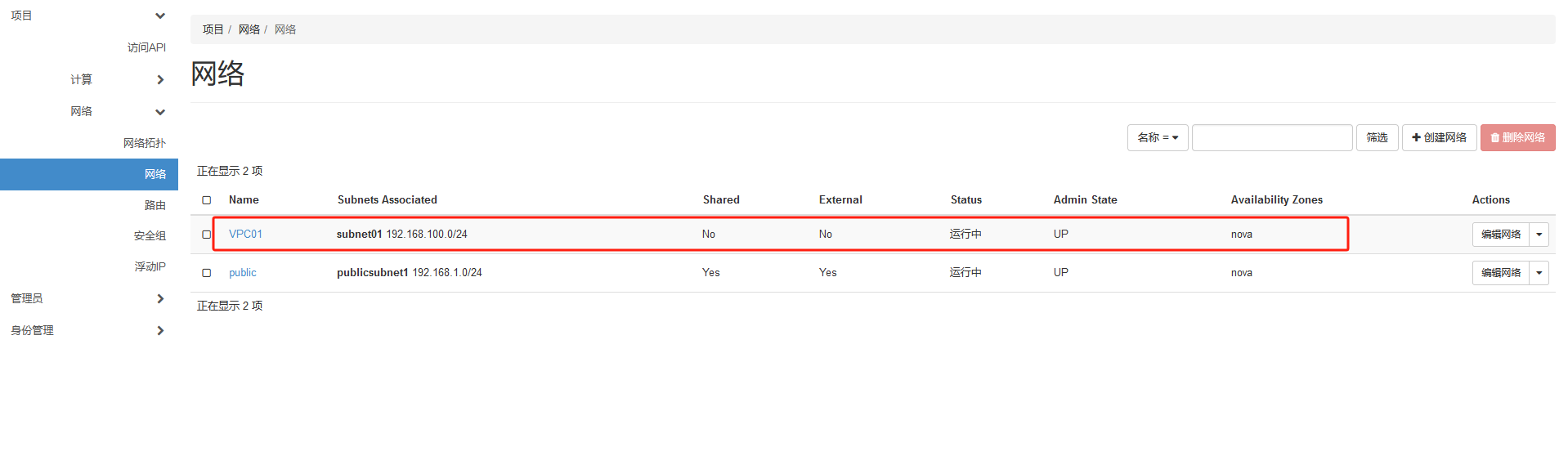

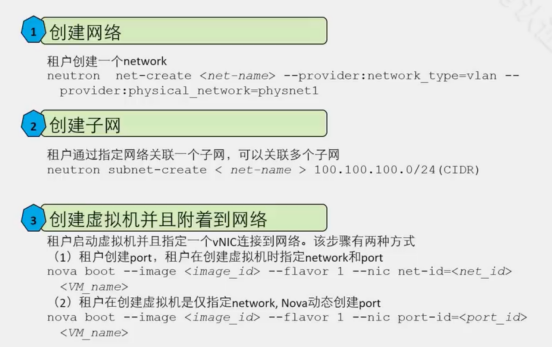

# 网络实例被分配到子网中,以实现网络连通性

每个项目可以有一到多个子网

在红帽的 Openstack 平台中,OpenStack 网络服务是缺省的网络选项,Nova 网络服务作为备用

管理员能够配置丰富的网络,将其他 Openstack 服务连接到这些网络的接口上

每个项目都能拥有多个私有网络,各个项目的私有网络互相不受干扰

项目网络:由 Neutron 提供的项目内部网络,网络间可用 VLAN 隔离

外部网络:可以让虚拟机接入外部网络,但需要配置浮动 IP 地址

提供商网络:将实例连接到现有网络,实现虚拟机实例与外部系统共享同一二层网络

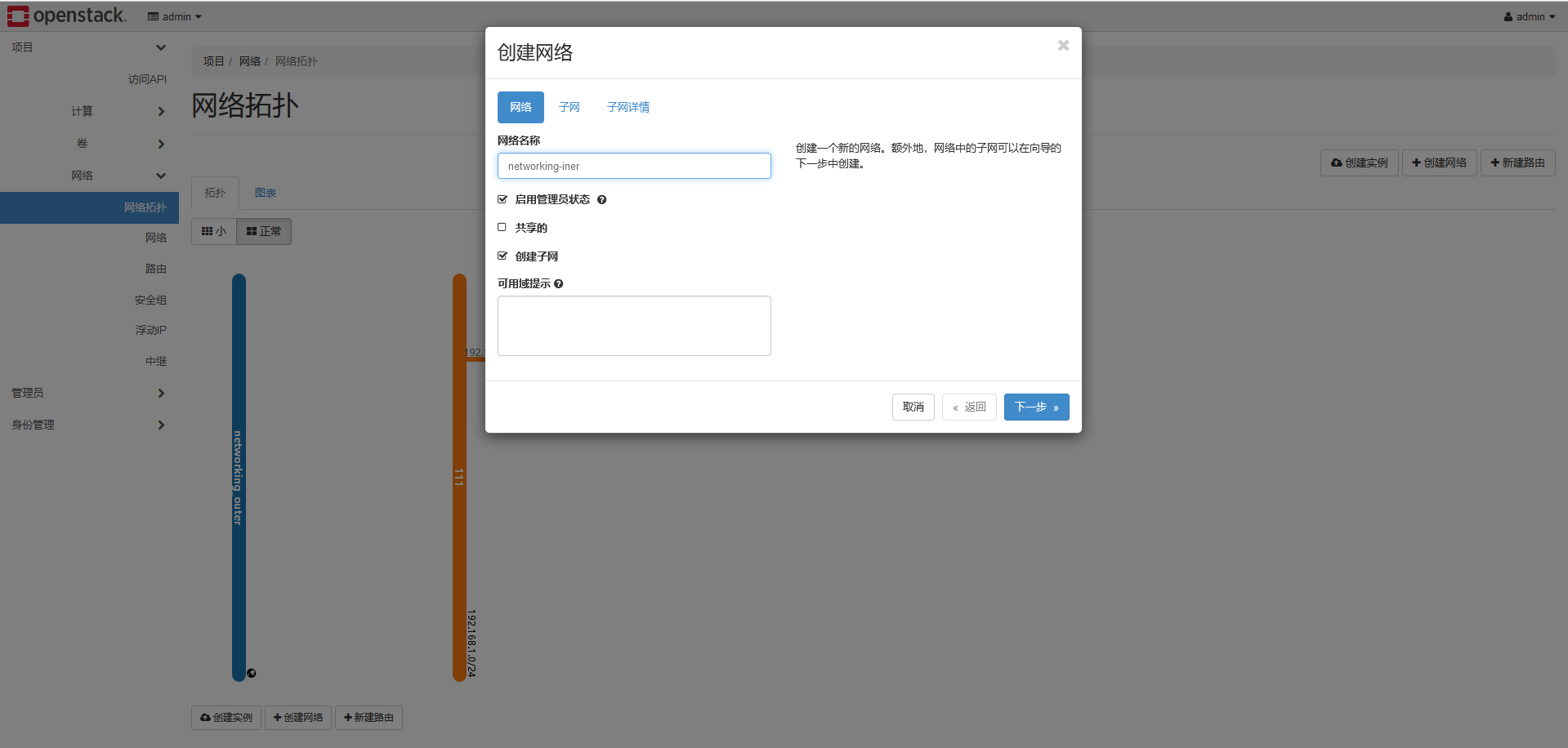

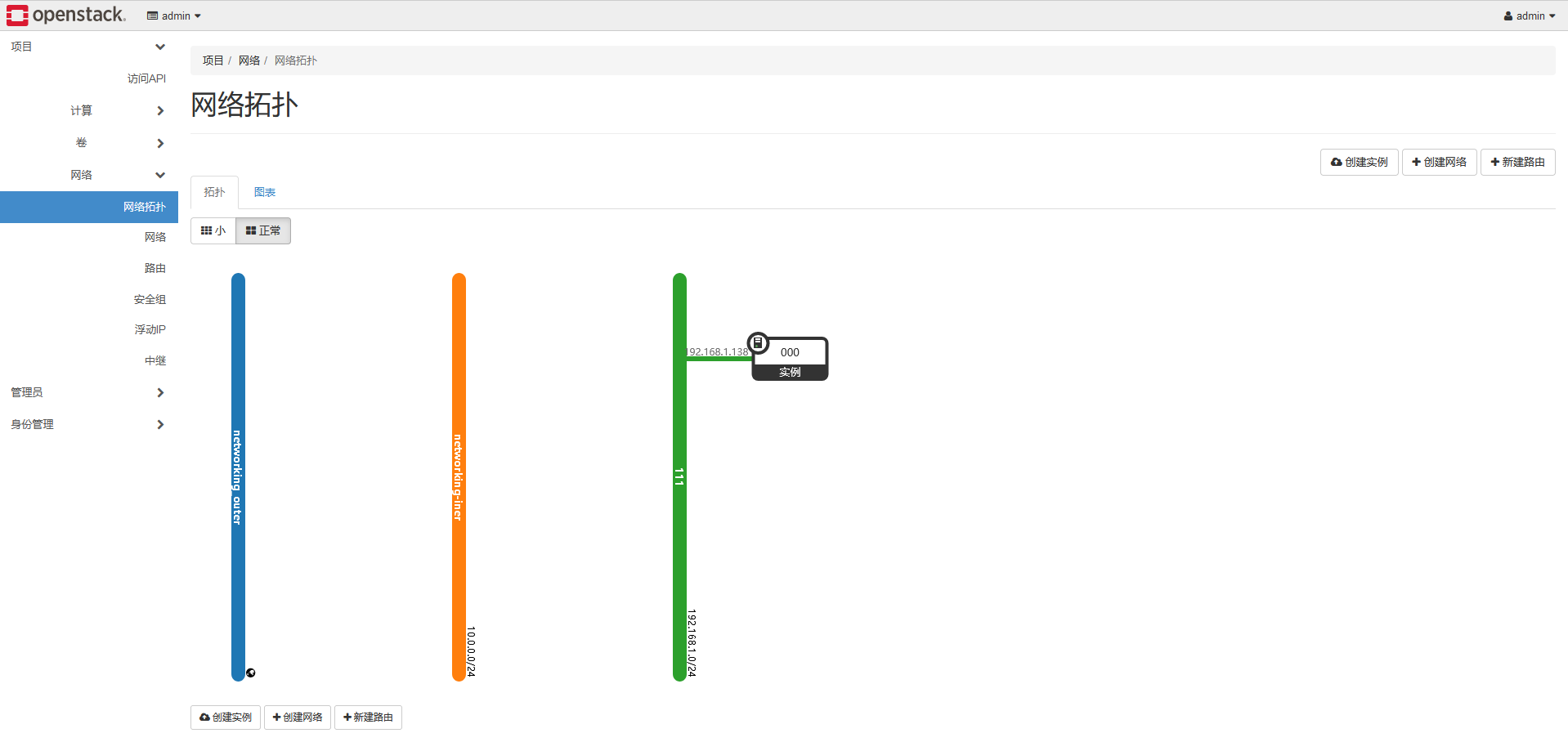

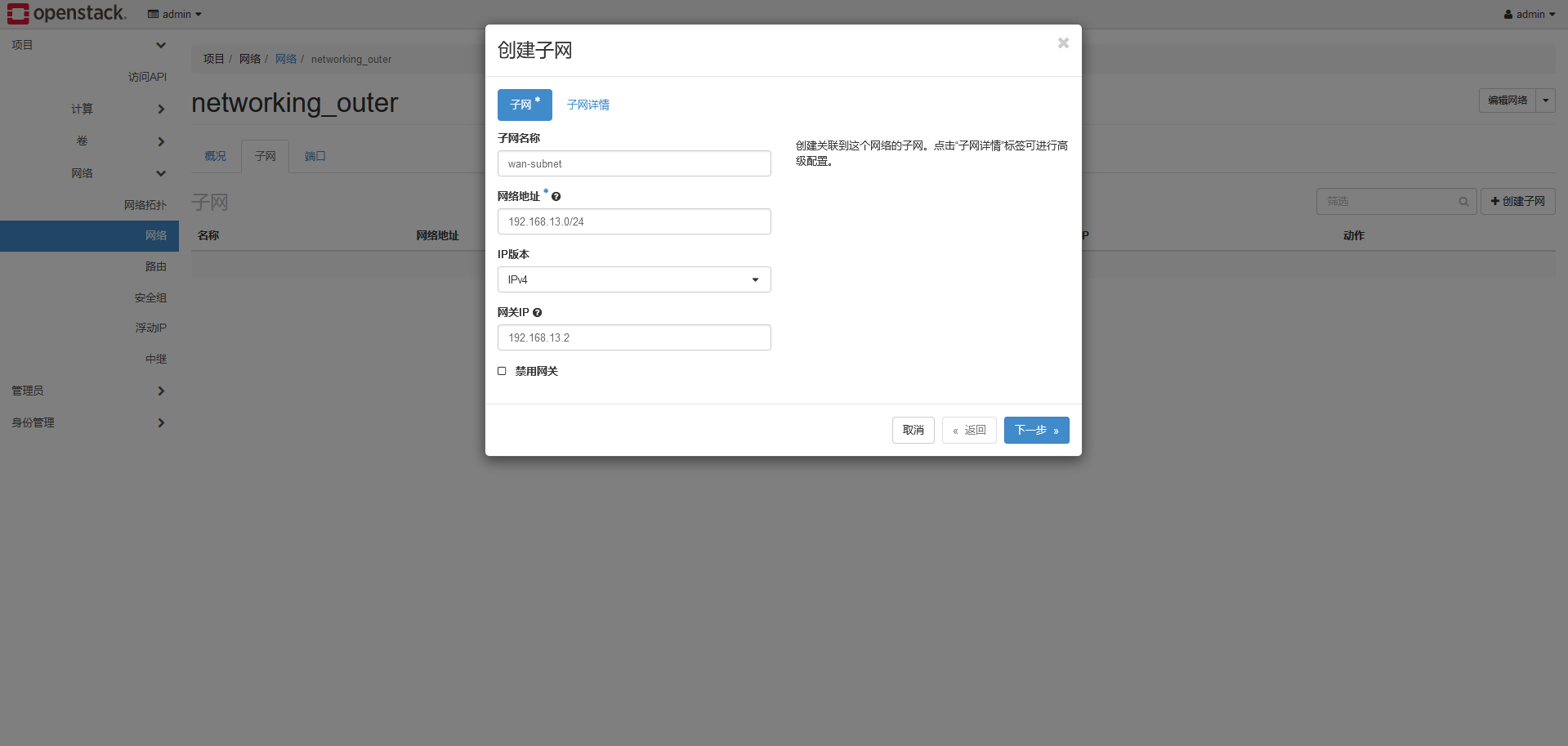

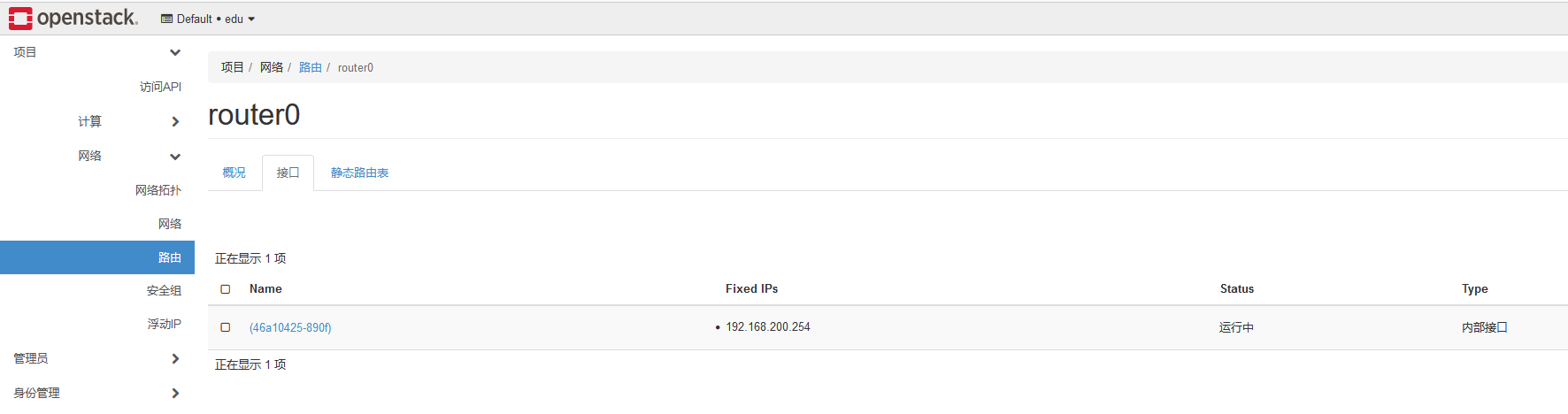

创建内部网络子网

创建外部网络子网

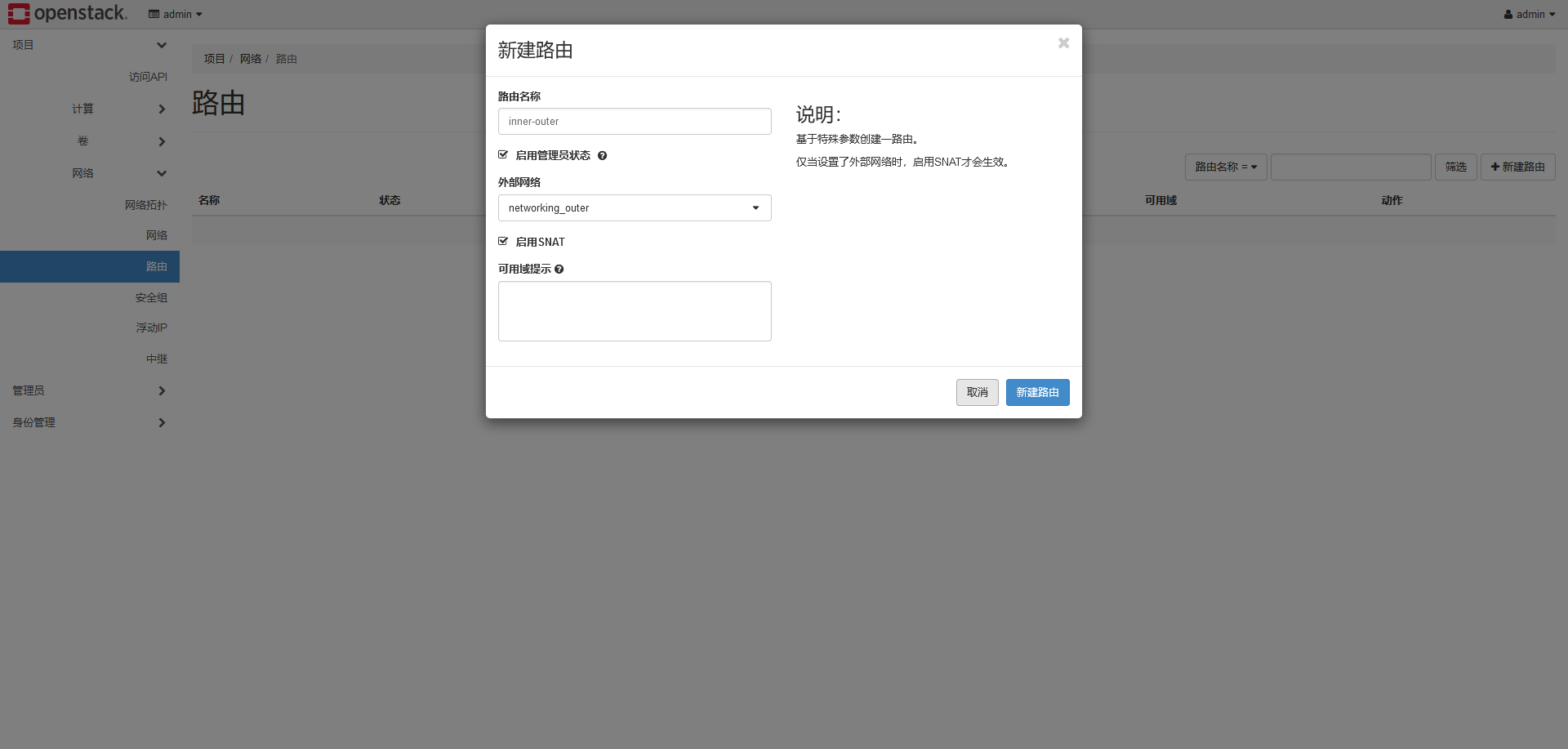

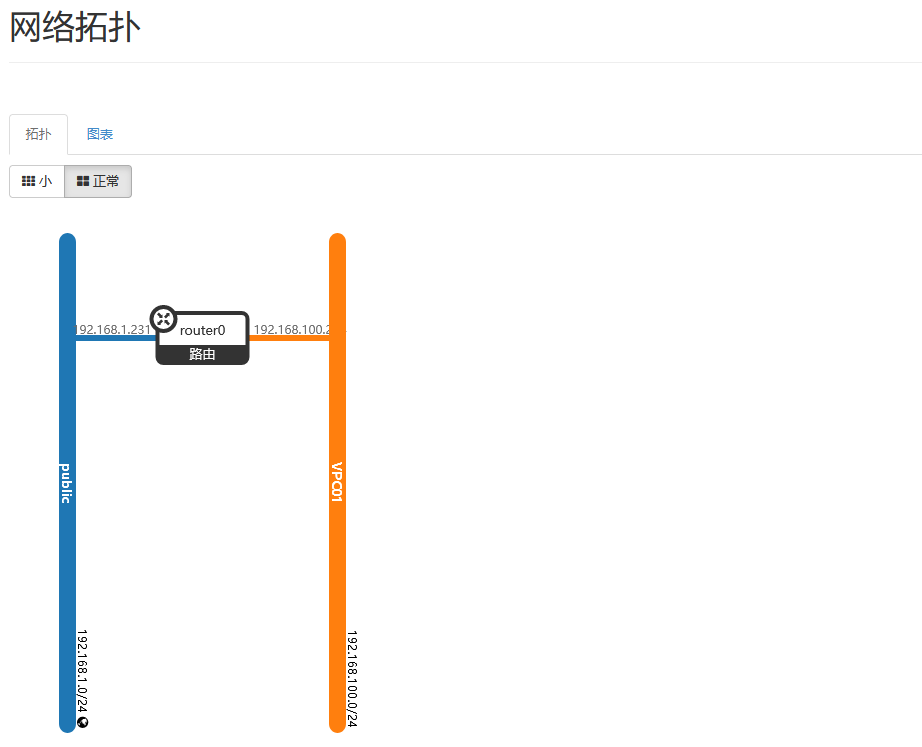

新建路由打通内外网

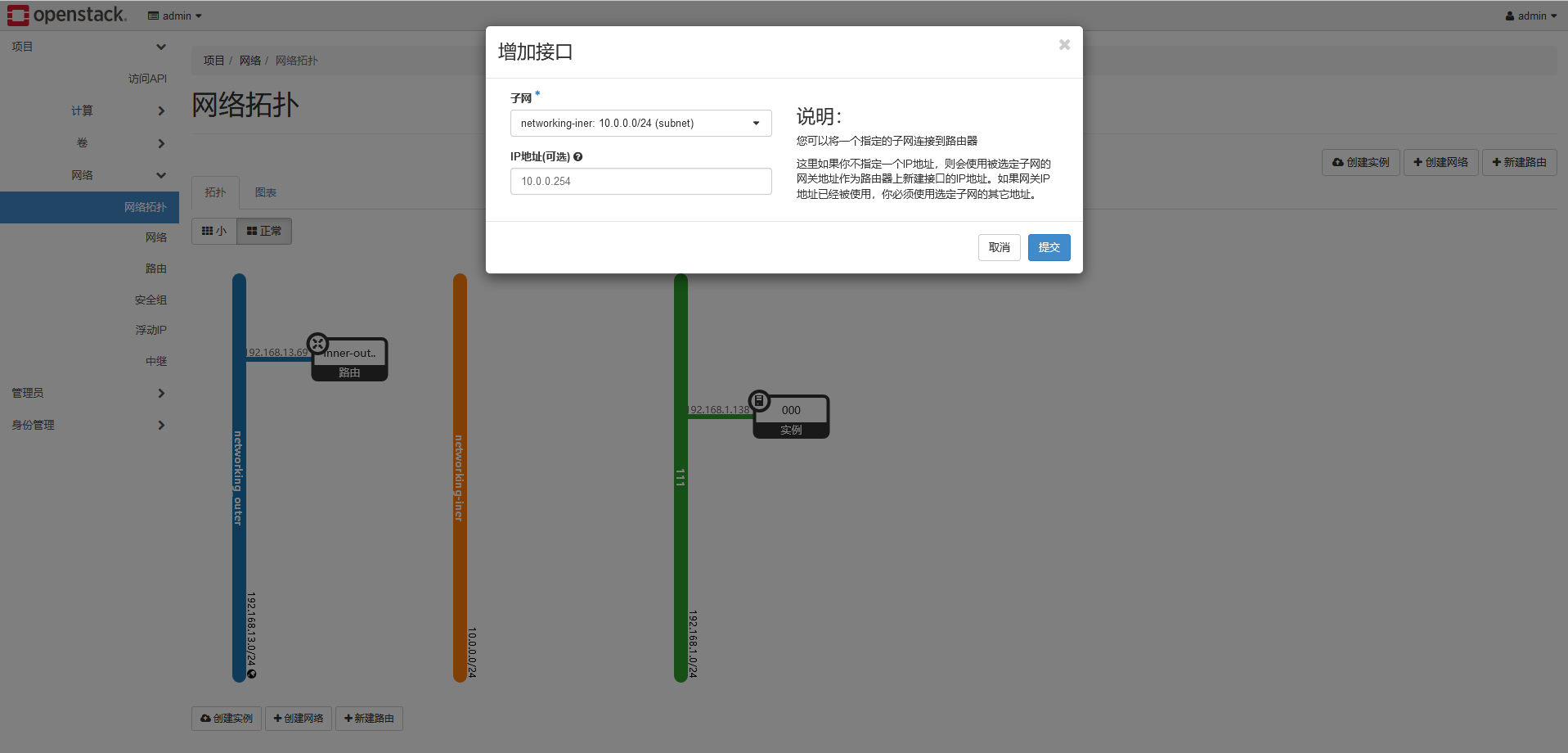

添加接口

1 [root@openstack ~]# systemctl status openstack-nova-compute.service

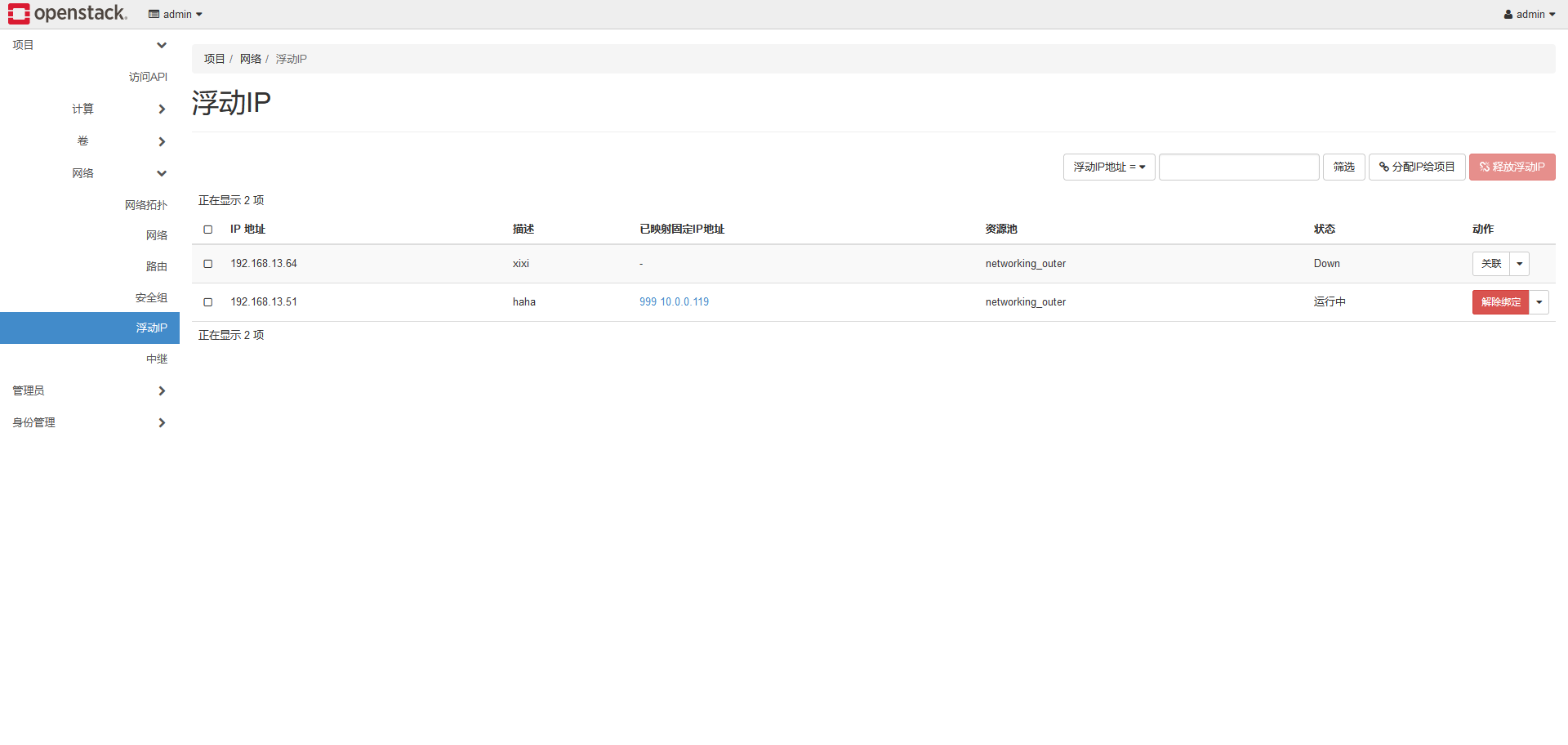

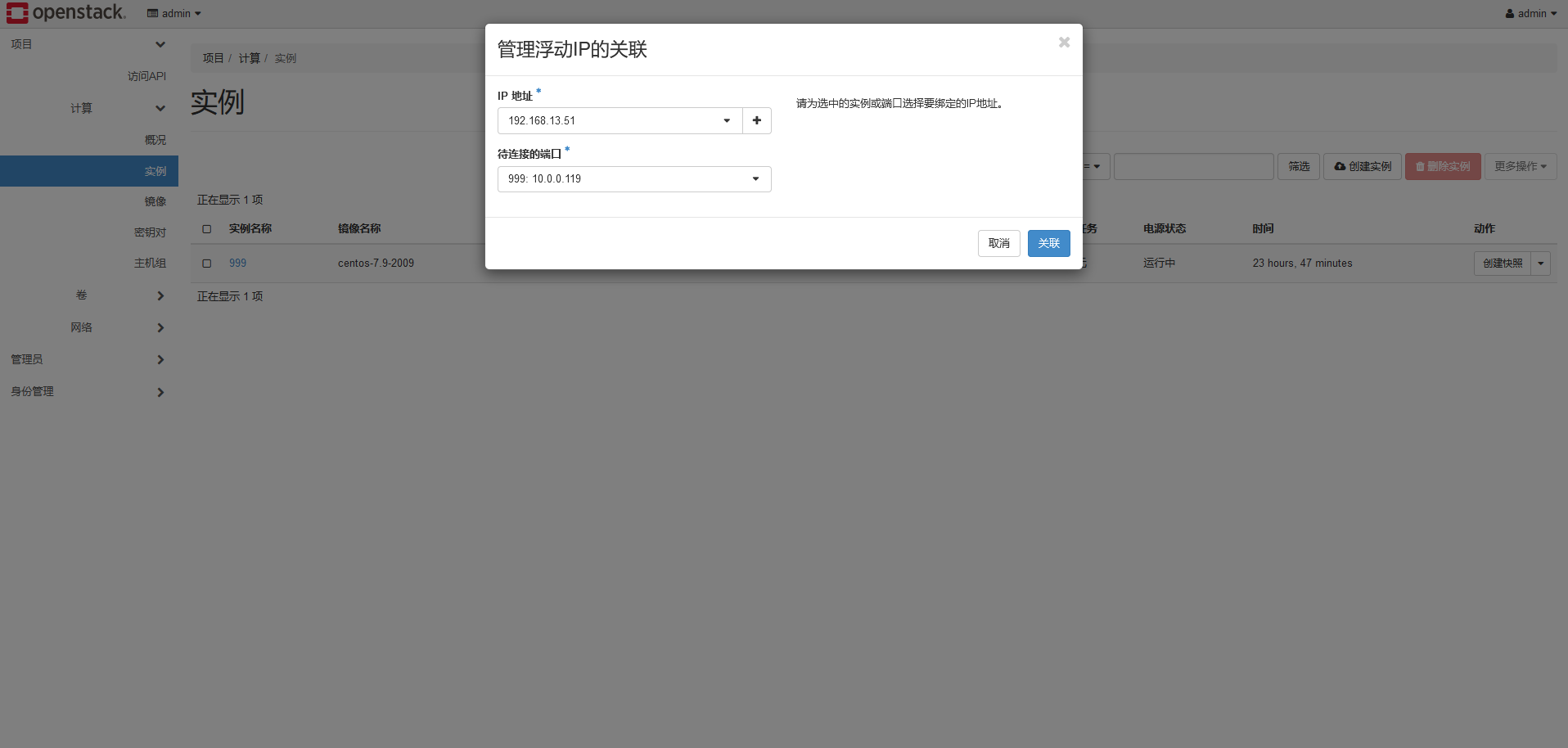

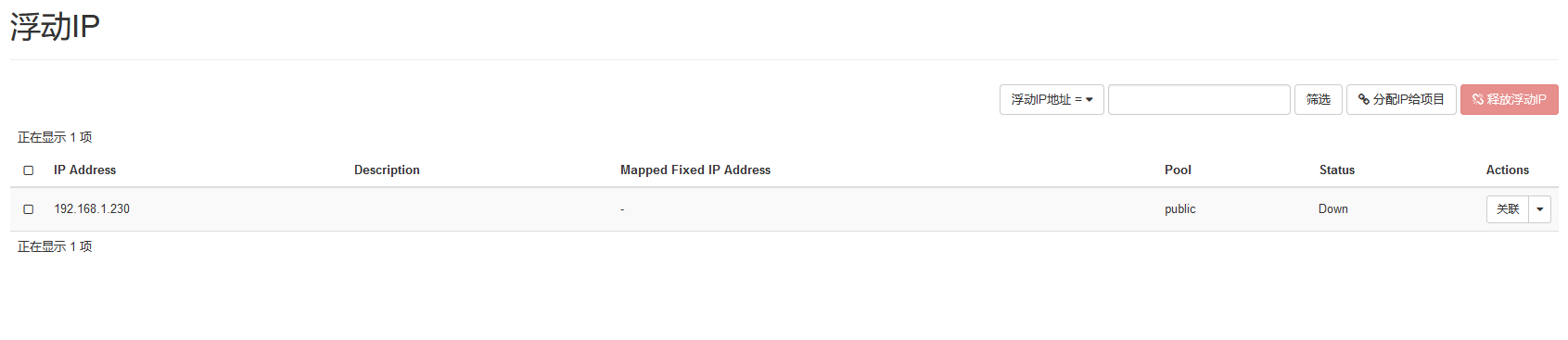

创建浮动 IP 关联主机

扩容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 扩容 openstack 计算节点参考 nova01 配置过程 配置静态 ip:192.168.1.12,及主机名 保证与 openstack,和 nova01 能相互 ping 通 配置/etc/resolv.conf ,删除 search 开头行 配置时间同步 /etc/chrony.conf 配置 yum 源,软仓库 安装依赖软件包 qemu-kvm libvirt-client libvirt-daemon libvirt-daemon-driver-qemu pythonsetuptools 96 # List the servers on which to install the Compute service. 97 CONFIG_COMPUTE_HOSTS=192.168.13.202 98 99 # List of servers on which to install the network service such as 100 # Compute networking (nova network) or OpenStack Networking (neutron).

迁移云主机

有多个 nova 计算节点的时候,我们可以选择性的把某一个云主机从某台机器上迁移到另外一台机器上

迁移条件

nova 计算节点与 openstack 管理节点都能相互 ping 通,主机名称也要能 ping 通

所有计算节点安装 qemu-img-rhev,qemu-kvm-rhev

如未安装,在安装以后需要重启 libvirtd 服务

# openstack1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 Openstack通过一组相互关联的服务提供基础设施即服务(laas)解决方案,每个服务都提供了一个应用程序编程接口(API)来促进这种集成 Openstack项目是一个适用于所有类型云的开源云计算平台,项目目标是提供实施简单、可大规模扩展、丰富、标准统一的云计算平台 Openstack实际上由一系列叫作脚本的命令组成。这些脚本会被捆绑到名为项目的软件包中,这些软件包则用于传递创建云环境的任务为了创建这些环境,0penstack还会使用2种其他类型的软件: 虚拟化软件,用于创建从硬件中抽象出来的虚拟资源层 基础操作系统(0S),用于执行0penstack脚本发出的命令 Openstack本身不会虚拟化资源但会使用虚拟化资源来构建云 openstack、虚拟化和基础操作系统,这3种技术协同工作服务用户 0penstack每年两个大版本,一般在4月和10月中旬发布,版本命名从字母A-Z [root@openstack ~]# rpm -qa | grep cinder openstack-cinder-15.6.0-1.el7.noarch puppet-cinder-15.5.0-1.el7.noarch python2-cinder-15.6.0-1.el7.noarch python2-cinderclient-5.0.2-1.el7.noarch [root@openstack ~]# rpm -qa | grep nova openstack-nova-scheduler-20.6.0-1.el7.noarch openstack-nova-compute-20.6.0-1.el7.noarch openstack-nova-novncproxy-20.6.0-1.el7.noarch openstack-nova-api-20.6.0-1.el7.noarch python2-novaclient-15.1.1-1.el7.noarch openstack-nova-common-20.6.0-1.el7.noarch openstack-nova-migration-20.6.0-1.el7.noarch python2-nova-20.6.0-1.el7.noarch openstack-nova-conductor-20.6.0-1.el7.noarch puppet-nova-15.8.1-1.el7.noarch nova-api-20.6.0-1根据这个版本可以找到对应的版本,即t版 https://releases.openstack.org/train/index.html Openstack不是虚拟化,0penstack只是系统的控制面,不包括系统的数据面组件,如Hypervisor、存储和网络设备等 虚拟化是Openstack底层的技术实现手段之一,但并非核心关注点Openstack与虚拟化的关键区别: 0penstack只是构建云计算的关键组件 内核、骨干、框架、总线 openstack只是一个内核,不能直接用于商业化,需要进行二次开发

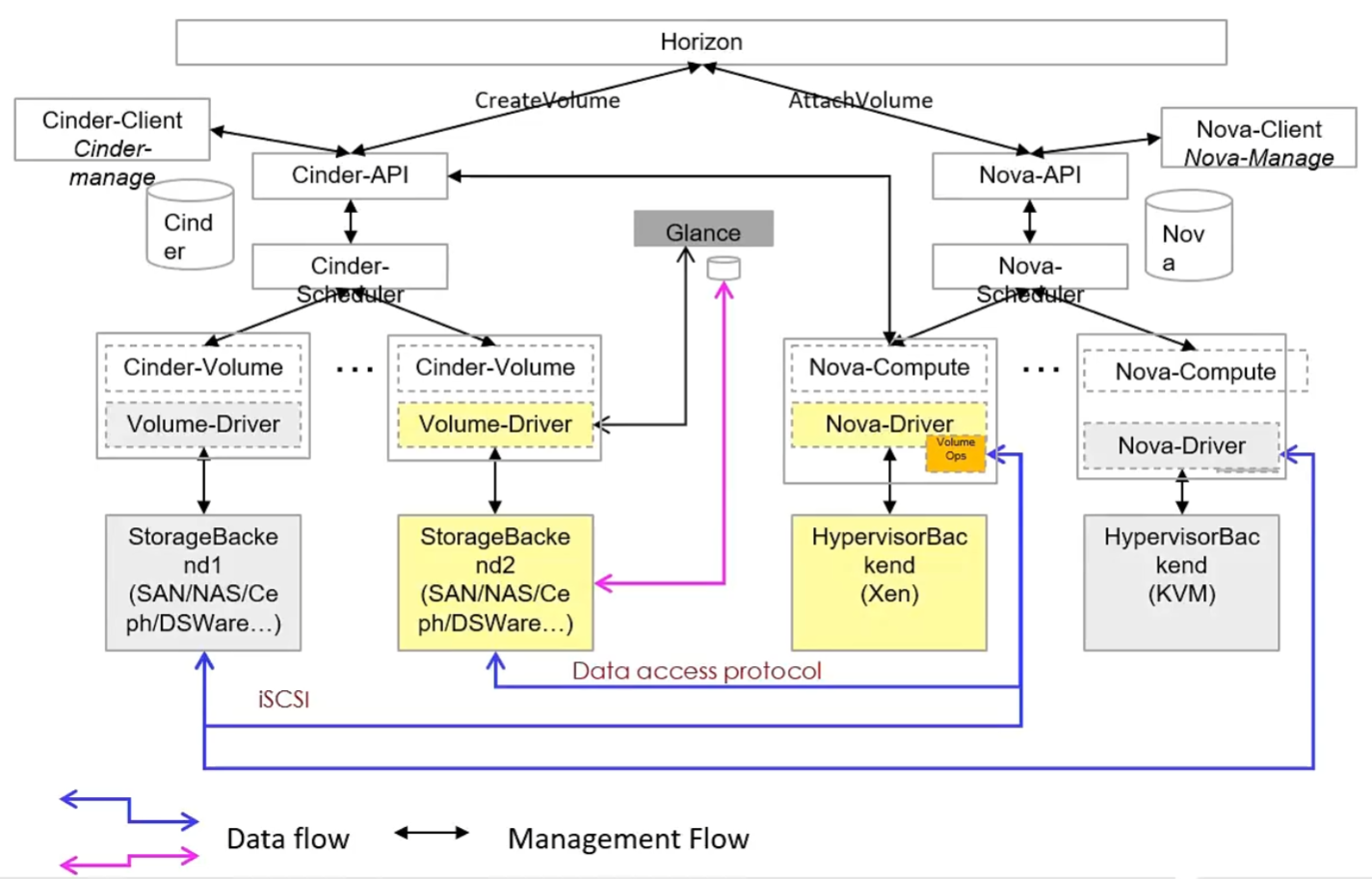

# 组件1 2 3 4 5 6 7 8 9 10 11 12 13 horizon 提供图形页面dashboard nova 提供计算资源 controller + compute controller目前独立存在 Controller 提供各种api入口,如glance-api,nova-api 通过controller操作各种资源,需要安装mysql ntp MQ AD 通过zookeeper做集群,必须单数 compute 装了kvm的主机,也可以是xen lxc esxi 华为云需要3controller + 2 network + N compute glance 提供镜像服务 swift 提供对象存储 cender 提供块存储 neutron 网络组件,可以提供二层网络功能,3层路由功能,dhcp等功能 heat 编排服务 ceilometer:计量服务,监控各个主机的资源使用情况,计费 keystone 身份验证

gpenstack, 各个组件去对接虚拟化平台,对接存储等,都需要开发驱动程序

当执行 openstack 标准指令,通过驱动将 penstack 指令转化为存储指令

1 2 3 4 5 6 部署方式 packstack devstack ansible 开源方式部署 Triple O openstack on openstack 通过heat自动化编排工具,填写参数完成

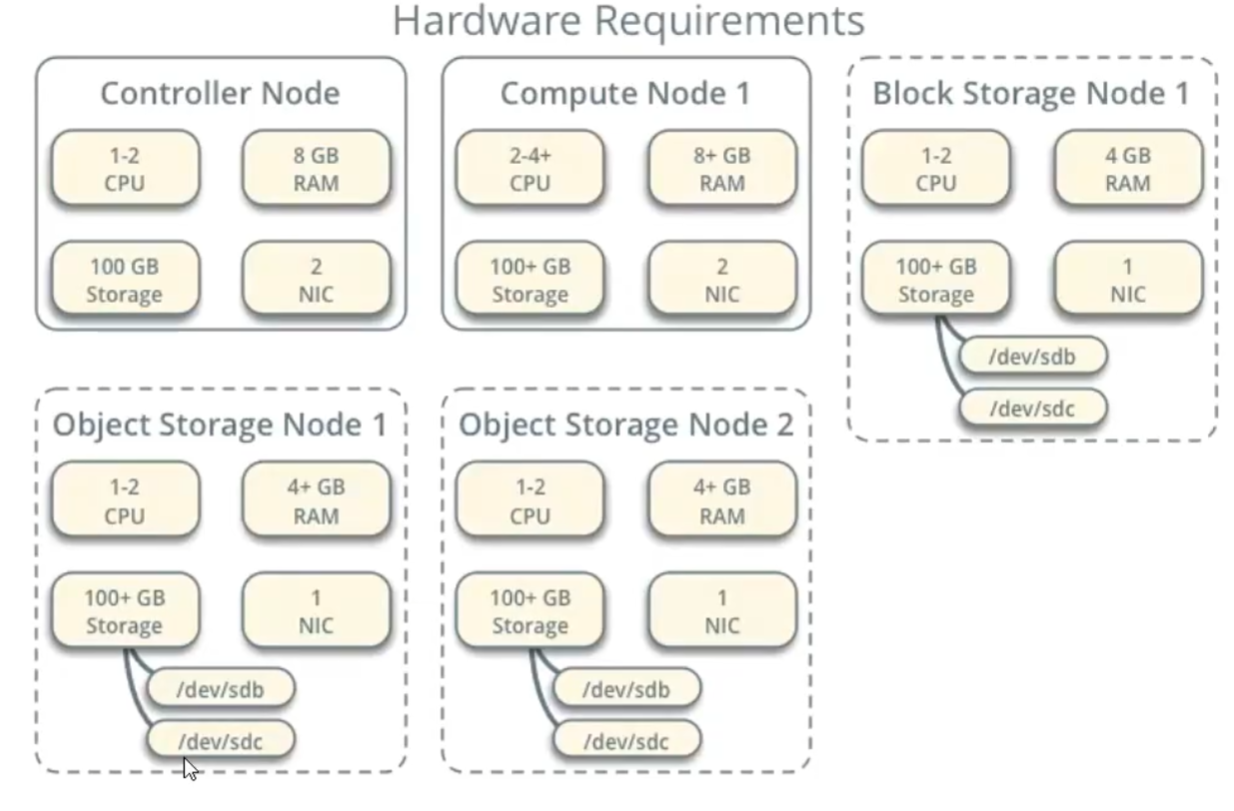

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 最佳部署:3台(一台 Controller+2台Compute 节点)CentOs8Stream,最少两台(一台Controller + 一台 Compute 节点),Controller 节点内存最好 8G,最少 4G,Compute 节点4G 内存或者 8G硬盘 100G CPU 至少2core,最佳 4cores 。并且开启 VT-X每台主机两块网卡,其中第一块网卡需要能访问Internet,用来做管理网络和安装软件包 controller 节点: NOVA-API, glance-api ,cinder-api等组件部署在该节点上,所有访问服务入口 ntp database message queue keystone Zookeeper 必须单数,防止脑裂controller 节点至少三个节点768G 内存 HCS networking TYPE1:网络功能是由 neutron 提供,VLAN ROUTER DHCP 等,网络节点 (自服务网络 self-service) TYPE2: 硬件 SDN 控制器 TYPE3: Provider networks 网络功能是由底层硬件来实现的,比如 VLAN Router (provider network )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 # 虚拟机规划 192.168.13.20 controller 192.168.13.21 compute01 192.168.13.22 compute02 # 配置ip [root@controller ~]#nmcli connection modify ens160 ipv4.addresses 192.168.13.20/24 ipv4.dns 8.8.8.8 ipv4.gateway 192.168.13.2 ipv4.method manual autoconnect yes [root@controller ~]#nmcli connection up ens160 [root@compute01 ~]#nmcli connection modify ens160 ipv4.addresses 192.168.13.21/24 ipv4.dns 8.8.8.8 ipv4.gateway 192.168.13.2 ipv4.method manual autoconnect yes [root@compute01 ~]#nmcli connection up ens160 [root@compute02 ~]#nmcli connection modify ens160 ipv4.addresses 192.168.13.22/24 ipv4.dns 8.8.8.8 ipv4.gateway 192.168.13.2 ipv4.method manual autoconnect yes [root@compute02 ~]#nmcli connection up ens160 # 在所有机器上编辑/etc/hosts,增加如下配置,不要删除127.0.0.1 192.168.13.20 controller 192.168.13.21 compute01 192.168.13.22 compute02 # 设置计算机名 [root@controller ~]# hostnamectl set-hostname controller [root@compute01 ~]# hostnamectl set-hostname compute01 [root@compute02 ~]# hostnamectl set-hostname compute02 # 在所有节点上关闭selinux && firewalld systemctl disable firewalld --now sed -ri 's#(SELINUX=)enforcing#1disabled#' /etc/selinux/config # keygen [root@controller ~]# ssh-keygen -t rsa [root@controller ~]# ssh-copy-id controller [root@controller ~]# ssh-copy-id compute01 [root@controller ~]# ssh-copy-id compute02 # ntp dnf install chrony -y systemctl enable chronyd --now [root@controller ~]# vim /etc/chrony.conf # pool 2.centos.pool.ntp.org iburst allow 0.0.0.0/0 local stratum 10 systemctl restart chronyd [root@compute01 ~]# vim /etc/chrony.conf pool controller iburst [root@compute01 ~]# systemctl restart chronyd [root@compute02 ~]# vim /etc/chrony.conf pool controller iburst [root@compute01 ~]# systemctl restart chronyd chronyc -a makestep chronyc sources # 开启安装源,在所有节点上执行,centos8可以直接yum install yum install centos-release-openstack-ussuri.noarch -y yum config-manager --set-enabled powertools # 在所有节点上执行 yum upgrade reboot yum install python3-openstackclient yum install openstack-selinux -y # 控制节点安装数据库 yum install mariadb mariadb-server python2-PyMySQL -y [root@controller my.cnf.d]# vim openstack.cnf [mysqld] bind-address = controller default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 systemctl enable mariadb --now mysql_secure_installation 全部为Y。密码此处为redhat systemctl restart mariadb.service # 控制节点安装消息队列 yum install rabbitmq-server -y systemctl enable rabbitmq-server.service --now rabbitmqctl add_user openstack redhat rabbitmqctl set_permissions openstack ".*" ".*" ".*" tcp6 0 0 :::5672 :::* LISTEN 5373/beam.smp # 控制节点安装memcache,keystone缓存token The Identity service authentication mechanism for services uses Memcached to cache tokens. yum install memcached python3-memcached -y vim /etc/sysconfig/memcached PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="-l 127.0.0.1,::1,controller" systemctl enable memcached.service --now tcp 0 0 192.168.13.20:11211 0.0.0.0:* LISTEN 7961/memcached # 控制节点安装etcd yum install etcd -y vim /etc/etcd/etcd.conf #[Member] ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://192.168.13.20:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.13.20:2379" ETCD_NAME="controller" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.13.20:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.13.20:2379" ETCD_INITIAL_CLUSTER="controller=http://192.168.13.20:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01" ETCD_INITIAL_CLUSTER_STATE="new" systemctl enable etcd --now tcp 0 0 192.168.13.20:2379 0.0.0.0:* LISTEN 9441/etcd tcp 0 0 192.168.13.20:2380 0.0.0.0:* LISTEN 9441/etcd https://docs.openstack.org/install-guide/environment-packages-rdo.html #######环境搭建完毕###### # 安装keystone https://docs.openstack.org/keystone/ussuri/install/keystone-install-rdo.html mysql -uroot -predhat CREATE DATABASE keystone; GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'redhat'; yum install openstack-keystone httpd python3-mod_wsgi -y vim /etc/keystone/keystone.conf [database] ... connection = mysql+pymysql://keystone:redhat@controller/keystone ... [token] provider = fernet su -s /bin/sh -c "keystone-manage db_sync" keystone keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone keystone-manage bootstrap --bootstrap-password redhat \ --bootstrap-admin-url http://controller:5000/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne vim /etc/httpd/conf/httpd.conf ServerName controller ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ systemctl enable httpd.service --now vim admin-openrc export OS_USERNAME=admin export OS_PASSWORD=redhat export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 . admin-openrc openstack domain create --description "An Example Domain" example openstack project create --domain default \ --description "Service Project" service openstack project create --domain default \ --description "Demo Project" myproject openstack user create --domain default \ --password-prompt myuser openstack role create myrole openstack role add --project myproject --user myuser myrole # 安装glance https://docs.openstack.org/glance/ussuri/install/install-rdo.html#prerequisites mysql -u root -predhat CREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \ IDENTIFIED BY 'redhat'; . admin-openrc openstack user create --domain default --password-prompt glance # 在openstack中创建用户glance openstack role add --project service --user glance admin openstack service create --name glance \ --description "OpenStack Image" image openstack endpoint create --region RegionOne \ image public http://controller:9292 openstack endpoint create --region RegionOne \ image internal http://controller:9292 openstack endpoint create --region RegionOne \ image admin http://controller:9292 vim /etc/glance/glance-api.conf [database] connection = mysql+pymysql://glance:redhat@controller/glance [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = redhat [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file # 验证glance . admin-openrc wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img glance image-create --name "cirros" \ --file cirros-0.4.0-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --visibility=public glance image-list +--------------------------------------+--------+ | ID | Name | +--------------------------------------+--------+ | e4c7903b-fc16-4d62-b60c-0e5ca211054e | cirros | +--------------------------------------+--------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 # 安装placement,在s版之前,placement集成在novaapi中,之后单独分离出来。 # 当用户申请创建云主机时,会向nova api发送请求,NovaAPI会统计所有的compute节点资源剩余情况,决定哪个compute节点执行 # https://docs.openstack.org/placement/ussuri/install/install-rdo.html mysql -u root -p CREATE DATABASE placement; GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \ IDENTIFIED BY 'redhat'; . admin-openrc openstack user create --domain default --password-prompt placement openstack role add --project service --user placement admin openstack service create --name placement --description "Placement API" placement openstack endpoint create --region RegionOne placement public http://controller:8778 openstack endpoint create --region RegionOne placement internal http://controller:8778 openstack endpoint create --region RegionOne placement admin http://controller:8778 yum install openstack-placement-api vim /etc/placement/placement.conf [placement_database] connection = mysql+pymysql://placement:redhat@controller/placement [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = placement password = redhat su -s /bin/sh -c "placement-manage db sync" placement vim /etc/httpd/conf.d/00-placement-api.conf <VirtualHost *:8778> ... <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory> </VirtualHost> systemctl restart httpd # 检查 placement-status upgrade check pip install osc-placement openstack --os-placement-api-version 1.2 resource class list --sort-column name openstack --os-placement-api-version 1.6 trait list --sort-column name

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 # nova # https://docs.openstack.org/nova/ussuri/install/ # controller node mysql -u root -p CREATE DATABASE nova_api; CREATE DATABASE nova; CREATE DATABASE nova_cell0; GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \ IDENTIFIED BY 'redhat'; . admin-openrc openstack user create --domain default --password-prompt nova openstack role add --project service --user nova admin openstack service create --name nova \ --description "OpenStack Compute" compute openstack endpoint create --region RegionOne \ compute public http://controller:8774/v2.1 openstack endpoint create --region RegionOne \ compute internal http://controller:8774/v2.1 openstack endpoint create --region RegionOne \ compute admin http://controller:8774/v2.1 yum install openstack-nova-api openstack-nova-conductor \ openstack-nova-novncproxy openstack-nova-scheduler -y vim /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata [api_database] connection = mysql+pymysql://nova:redhat@controller/nova_api [database] connection = mysql+pymysql://nova:redhat@controller/nova [DEFAULT] transport_url = rabbit://openstack:redhat@controller:5672/ [api] auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000/ auth_url = http://controller:5000/ memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = redhat [DEFAULT] my_ip = 192.168.13.20 [vnc] enabled = true server_listen = $my_ip server_proxyclient_address = $my_ip [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = redhat su -s /bin/sh -c "nova-manage api_db sync" nova su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova su -s /bin/sh -c "nova-manage db sync" nova su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova systemctl enable \ openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service \ openstack-nova-novncproxy.service \ --now # compute node yum install openstack-nova-compute # 不同节点替换my_ip vim /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:redhat@controller my_ip = 192.168.13.21 [api] auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000/ auth_url = http://controller:5000/ memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = redhat [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = redhat egrep -c '(vmx|svm)' /proc/cpuinfo # centos stream9 ,2024版本需要 yum install qemu-kvm libvirt libvirt-daemon virt-install virt-manager libvirt-dbus -y systemctl enable libvirtd.service openstack-nova-compute.service --now chmod 777 -R /usr/lib/python3.9/site-packages/ # controller node openstack compute service list --service nova-compute su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova vim /etc/nova/nova.conf [scheduler] discover_hosts_in_cells_interval = 300 # 验证 openstack compute service list openstack catalog list nova-status upgrade check

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 # neutron # controller node 执行 mysql -u root -p CREATE DATABASE neutron; GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \ IDENTIFIED BY 'redhat'; . admin-openrc openstack user create --domain default --password-prompt neutron openstack role add --project service --user neutron admin openstack service create --name neutron \ --description "OpenStack Networking" network openstack endpoint create --region RegionOne \ network public http://controller:9696 openstack endpoint create --region RegionOne \ network internal http://controller:9696 openstack endpoint create --region RegionOne \ network admin http://controller:9696 yum install openstack-neutron openstack-neutron-ml2 \ openstack-neutron-linuxbridge ebtables vim /etc/neutron/neutron.conf [database] connection = mysql+pymysql://neutron:redhat@controller/neutron [DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:redhat@controller auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = redhat [nova] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = redhat [oslo_concurrency] lock_path = /var/lib/neutron/tmp vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security [ml2_type_flat] flat_networks = provider [ml2_type_vxlan] vni_ranges = 1:1000 [securitygroup] enable_ipset = true vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens160 [vxlan] enable_vxlan = true local_ip = 192.168.13.20 l2_population = true [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver # 该命令所有节点上执行 yum install bridge-utils -y modprobe br_netfilter echo br_netfilter > /etc/modules-load.d/br_netfilter.conf sysctl -a | grep bridge # 确保下面的为 1 net.bridge.bridge-nf-call-arptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 vim /etc/neutron/l3_agent.ini [DEFAULT] interface_driver = linuxbridge vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true vim /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = redhat vim/etc/nova/nova.conf [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = redhat service_metadata_proxy = true metadata_proxy_shared_secret = redhat ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron systemctl restart openstack-nova-api.service systemctl enable neutron-server.service \ neutron-linuxbridge-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service \ --now systemctl enable neutron-l3-agent.service --now # compute node yum install openstack-neutron-linuxbridge ebtables ipset vim /etc/neutron/neutron.conf [DEFAULT] transport_url = rabbit://openstack:redhat@controller auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = redhat [oslo_concurrency] lock_path = /var/lib/neutron/tmp vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens160 [vxlan] enable_vxlan = true local_ip = 192.168.13.21 l2_population = true [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver vim /etc/nova/nova.conf [neutron] # ... auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = redhat # openstack 2024 需要配置 mkdir /usr/lib/python3.9/site-packages/instances chown -R root.nova /usr/lib/python3.9/site-packages/instances systemctl restart openstack-nova-compute.service systemctl enable neutron-linuxbridge-agent.service --now # 验证 . admin-openrc [root@controller ~]# openstack network agent list +--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+ | ID | Agent Type | Host | Availability Zone | Alive | State | Binary | +--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+ | 1e065961-ca8a-4fef-8d0b-65cb8525108a | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent | | 3acf922b-2984-4d6a-aa2a-ed0ccba26c1c | Linux bridge agent | controller | None | :-) | UP | neutron-linuxbridge-agent | | 63815950-35ab-4298-8726-bfc416e2fb35 | Linux bridge agent | compute02 | None | :-) | UP | neutron-linuxbridge-agent | | 9ec3deda-192f-4a52-882f-9a468277beee | L3 agent | controller | nova | :-) | UP | neutron-l3-agent | | d41fbf03-5391-43c9-bba4-02d2fdd959e5 | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent | | f4d841d6-ef98-4fca-8548-30975d7a6aff | Linux bridge agent | compute01 | None | :-) | UP | neutron-linuxbridge-agent | +--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 # dashboard yum install openstack-dashboard OPENSTACK_HOST = "controller" ALLOWED_HOSTS = ['*'] SESSION_ENGINE = 'django.contrib.sessions.backends.cache' CACHES = { 'default': { 'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache', 'LOCATION': 'controller:11211', } } OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST TIME_ZONE = "Asia/Shanghai" OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True OPENSTACK_API_VERSIONS = { "identity": 3, "image": 2, "volume": 3, } OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default" OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user" vim /etc/httpd/conf.d/openstack-dashboard.conf WSGIApplicationGroup %{GLOBAL} systemctl restart httpd.service memcached.service vim /usr/share/openstack-dashboard/openstack_dashboard/defaults.py WEBROOT = '/dashboard' # from openstack_auth vim /usr/share/openstack-dashboard/openstack_dashboard/test/settings.py WEBROOT = '/dashboard' systemctl restart httpd.service memcached.service

http://192.168.13.20/dashboard

Default

admin

redhat

机器重启后无法登陆,重启服务 systemctl restart httpd.service memcached.service

# 发放云主机开启配置后,可以支持多域

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

# 创建镜像

创建虚拟机时,不能比创建镜像时指定的最小磁盘和最小内存小,否则虚拟机会发放失败

# 创建实例类型

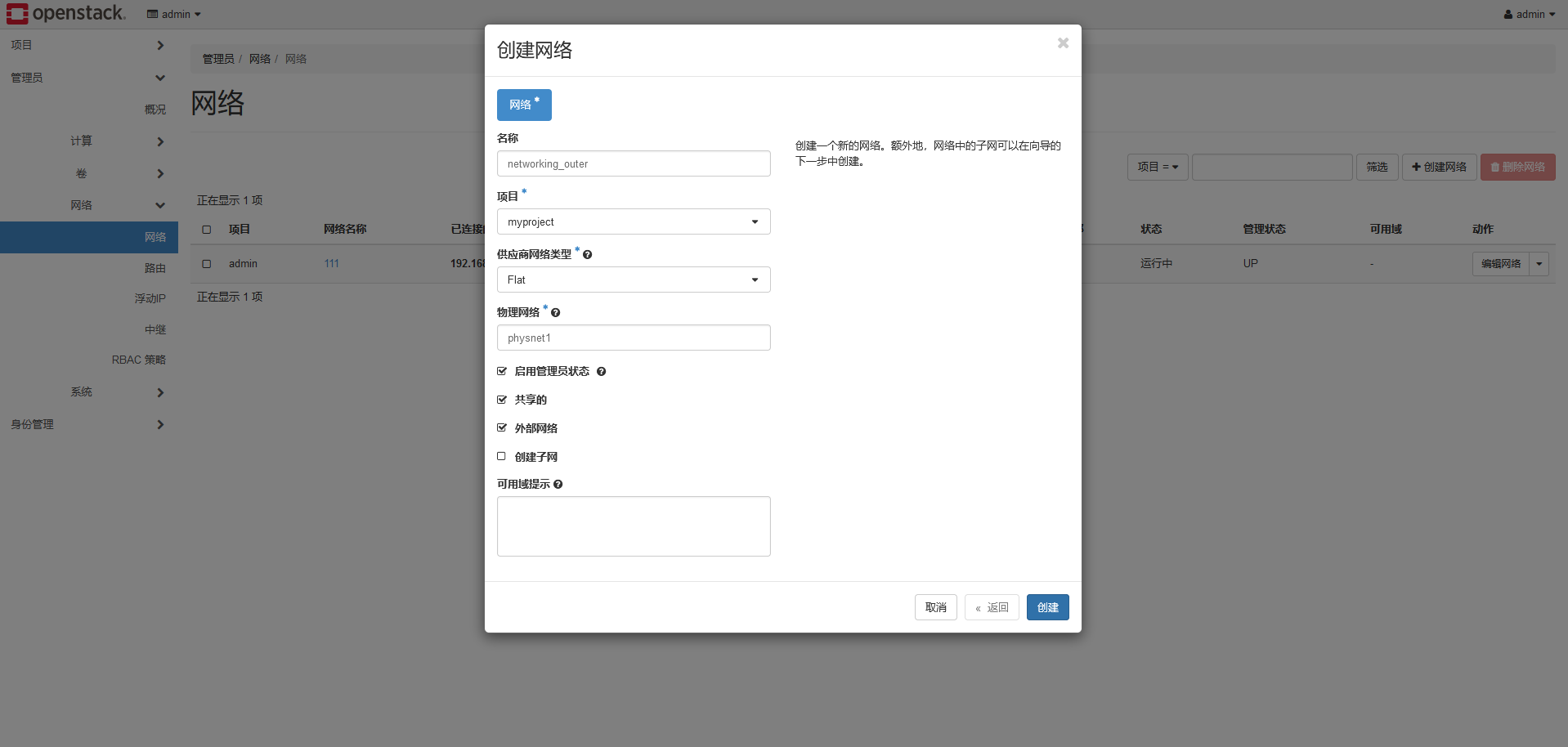

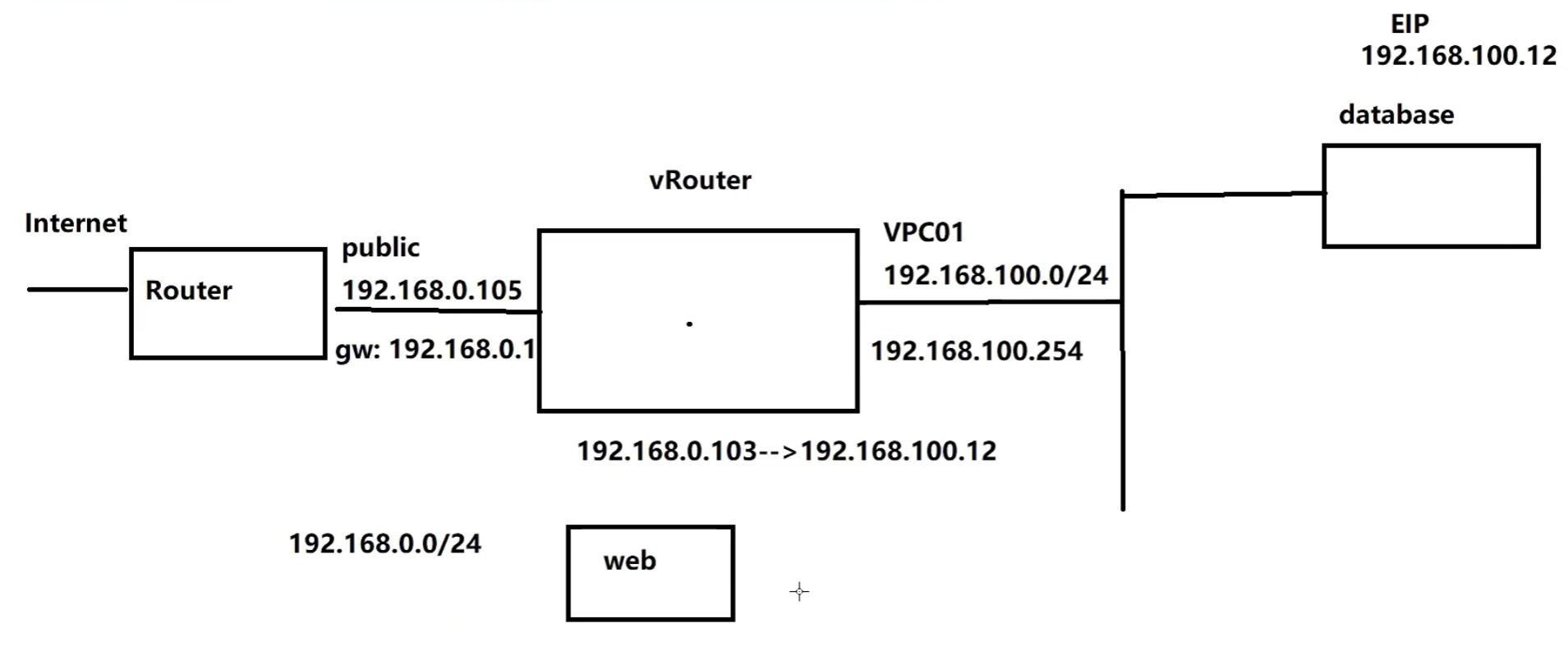

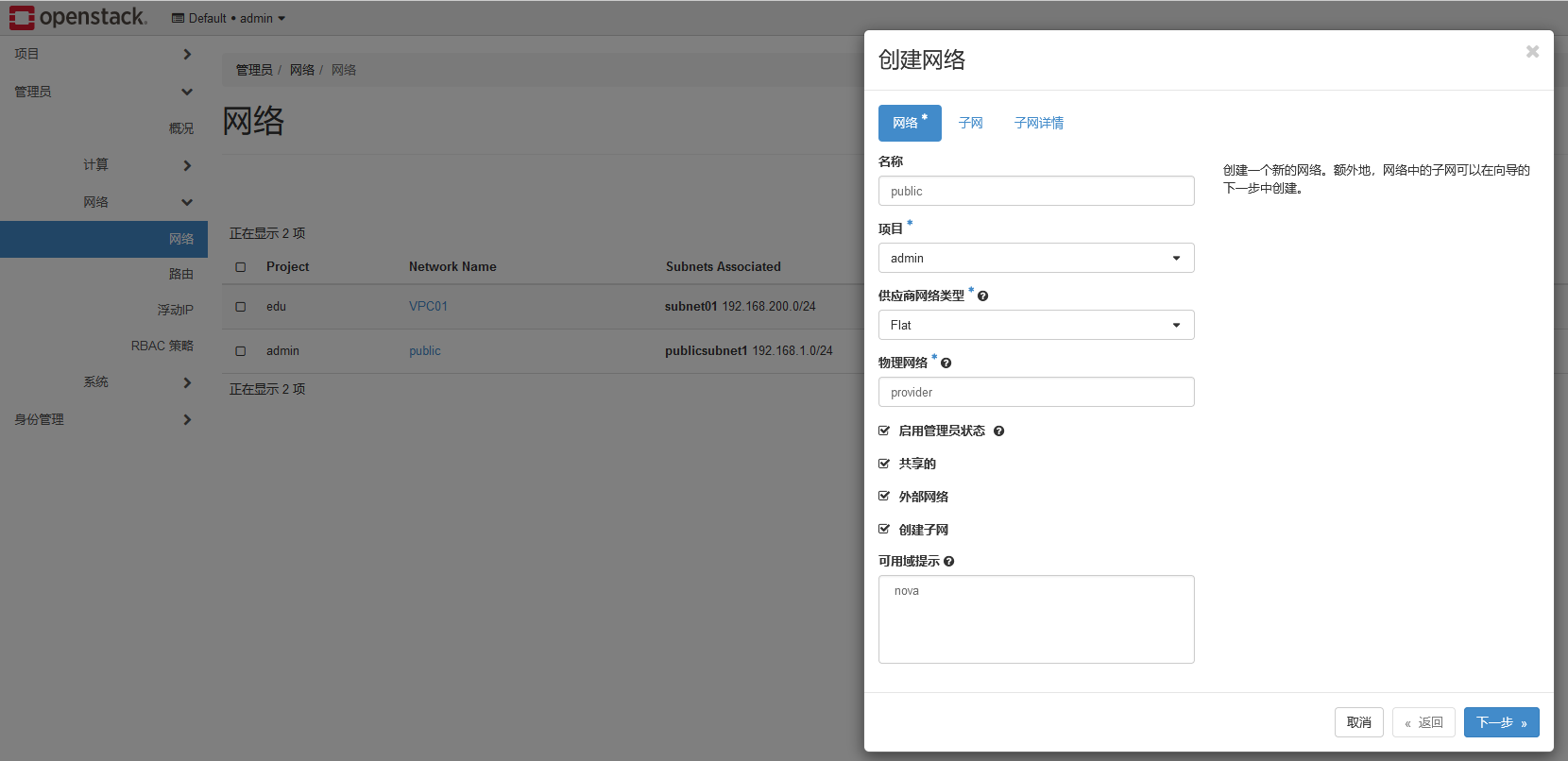

# 创建外部网络

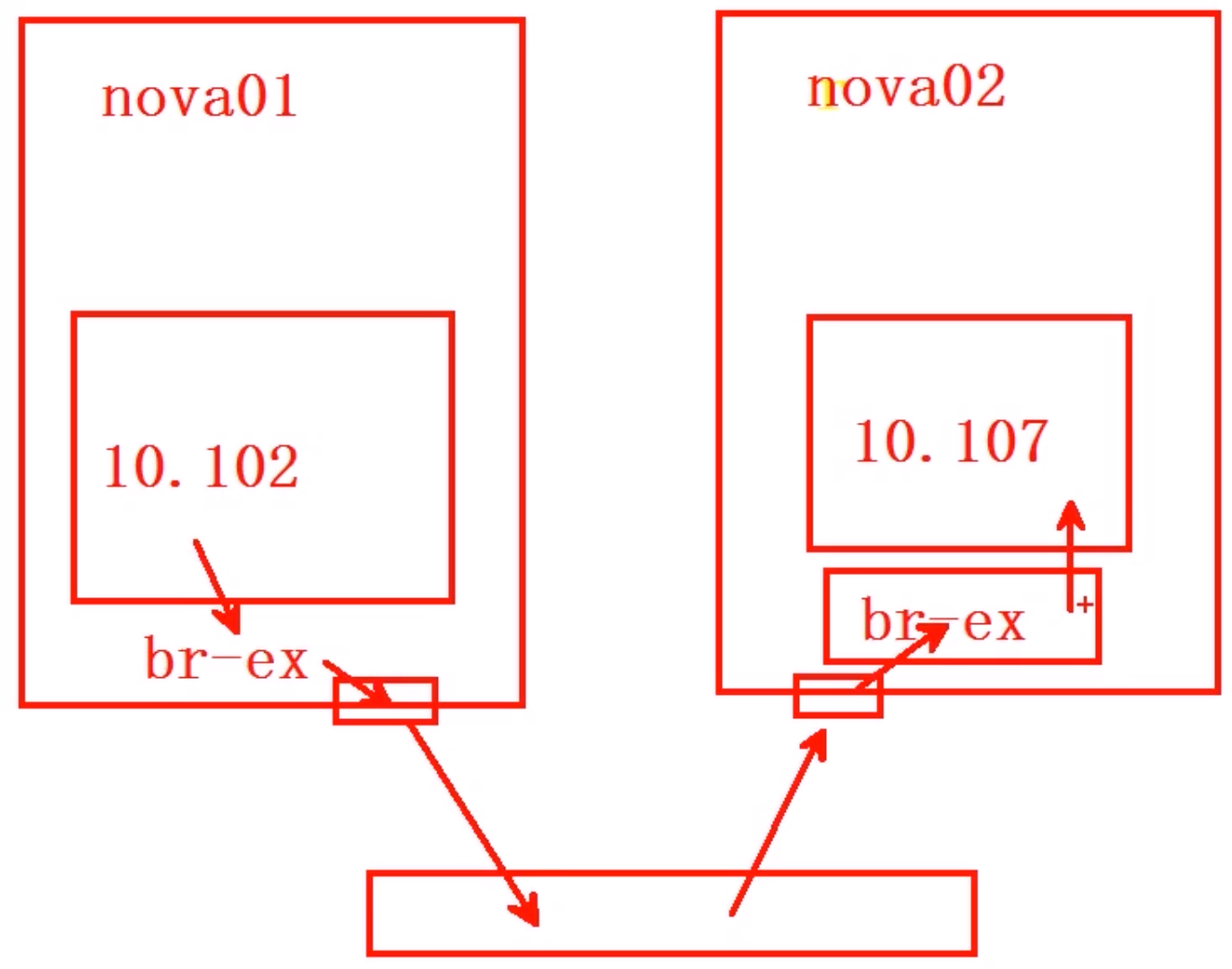

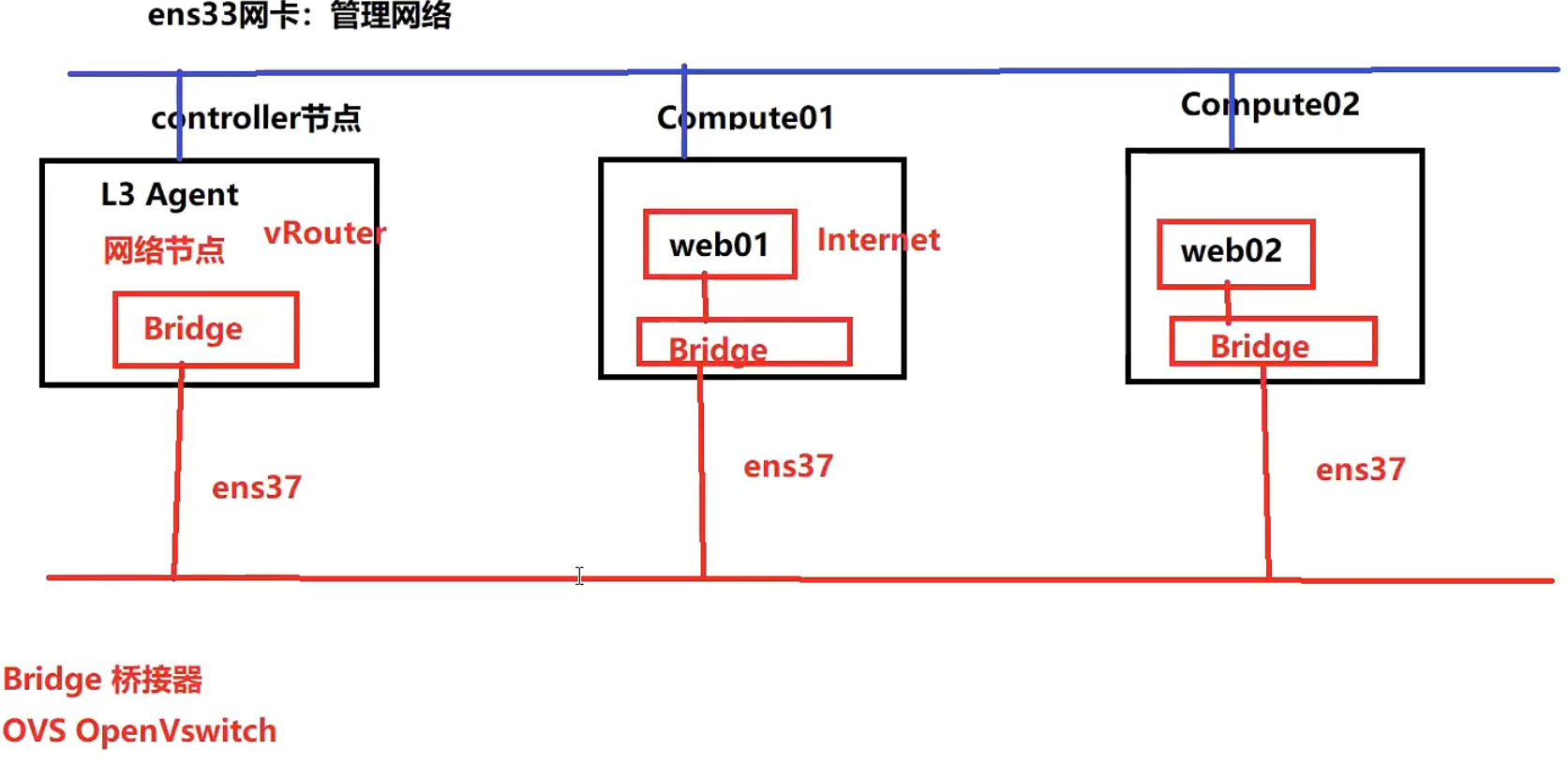

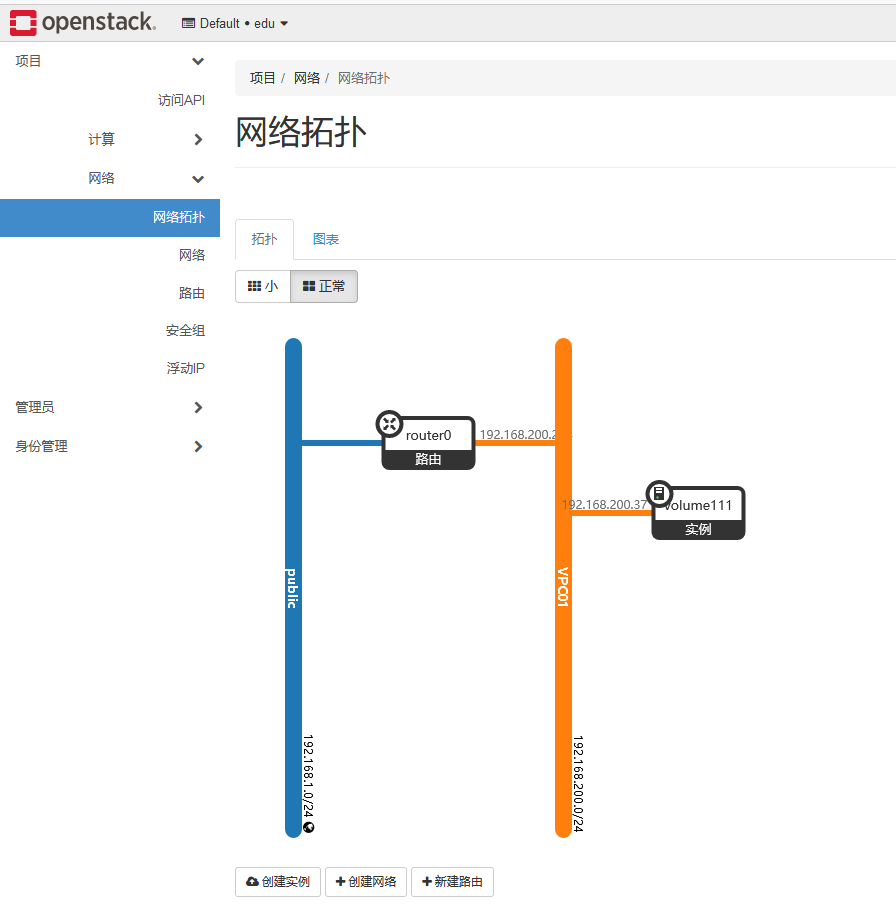

一般来说,宿主机会有两块网卡,一块用于管理网络,一块用于 node 之间通信和访问 Internet

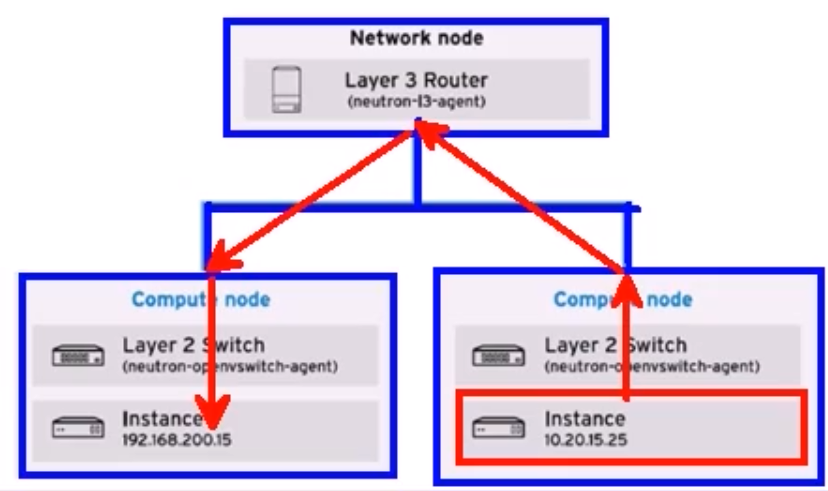

只有通过 L3 Agent 才可以访问 Internet,L3Agent 只有 controller 上才有,compute 节点必须通过上图中 ens37 网络到 controller,才可以访问 Internet。此时网络节点可以称 vRouter

此时这种方式叫做集中式路由。每个 compute node 有自己的网络节点的时候称为分布式路由

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 # 添加一块桥接网卡 nmcli device up ens224 nmcli connection modify ens224 ipv4.address 192.168.1.100/24 ipv4.dns 8.8.8.8 ipv4.gateway 192.168.1.1 ipv4.method manual autoconnect yes nmcli connection up ens224 # controller node # 配置第二块网卡提供业务网络 vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2_type_flat] flat_networks = provider vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens224 systemctl restart neutron-server.service neutron-linuxbridge-agent.service # compute node vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens224 systemctl restart neutron-linuxbridge-agent.service ####################### ip a 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:87:28:54 brd ff:ff:ff:ff:ff:ff altname enp3s0 inet 192.168.13.20/24 brd 192.168.13.255 scope global noprefixroute ens160 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe87:2854/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:87:28:5e brd ff:ff:ff:ff:ff:ff altname enp19s0 inet 192.168.1.100/24 brd 192.168.1.255 scope global noprefixroute ens224 valid_lft forever preferred_lft forever inet6 2409:8a1e:3b41:f0f0:6dd3:d917:4cba:7b6/64 scope global dynamic noprefixroute valid_lft 212884sec preferred_lft 126484sec inet6 fe80::fe05:4ac6:73b8:b7d7/64 scope link noprefixroute valid_lft forever preferred_lft forever 此时ens224是业务网络,ens160是管理网络 ens224也是上行链路 [root@controller ~]# brctl show bridge name bridge id STP enabled interfaces virbr0 8000.5254003bb141 yes

1 2 3 4 供应商网络类型 local 只有本机能访问 vlan 带标签的网络 flat 不带标签的网络

# 创建用户和租户

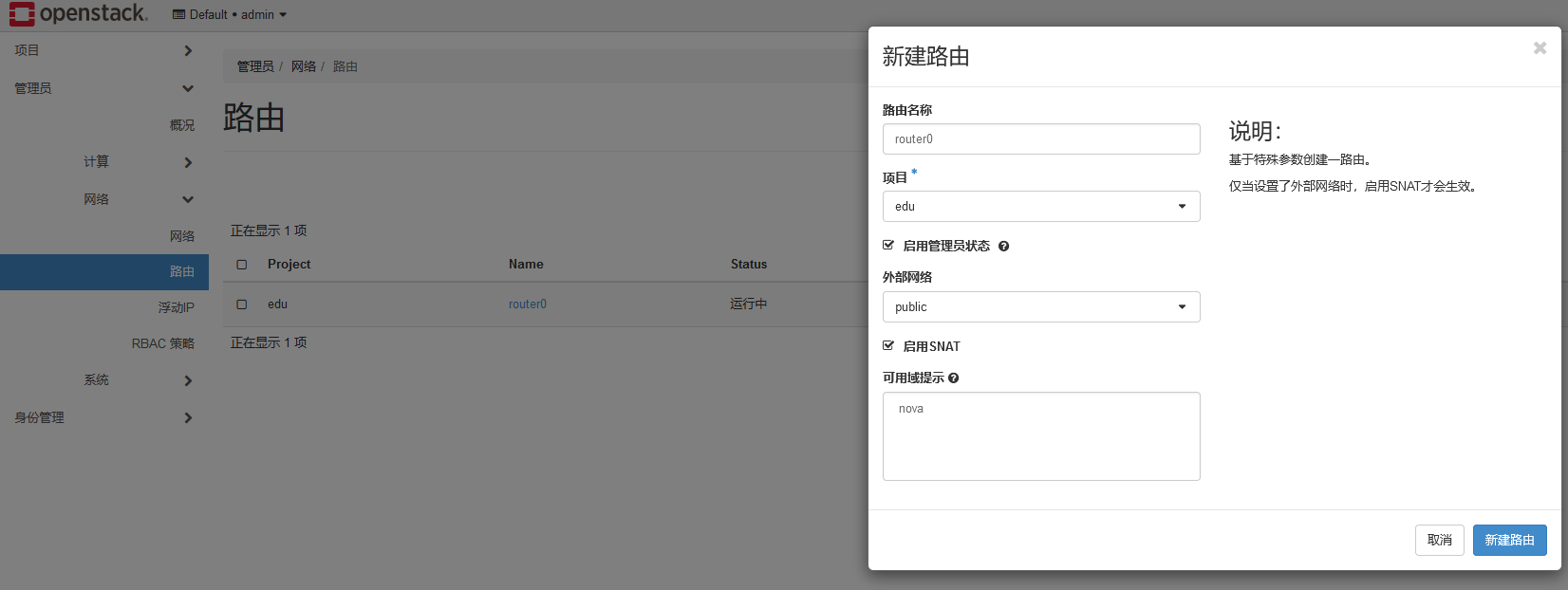

# 创建网络过程管理员操作:创建网络 -> 创建路由 禁用 dhcp

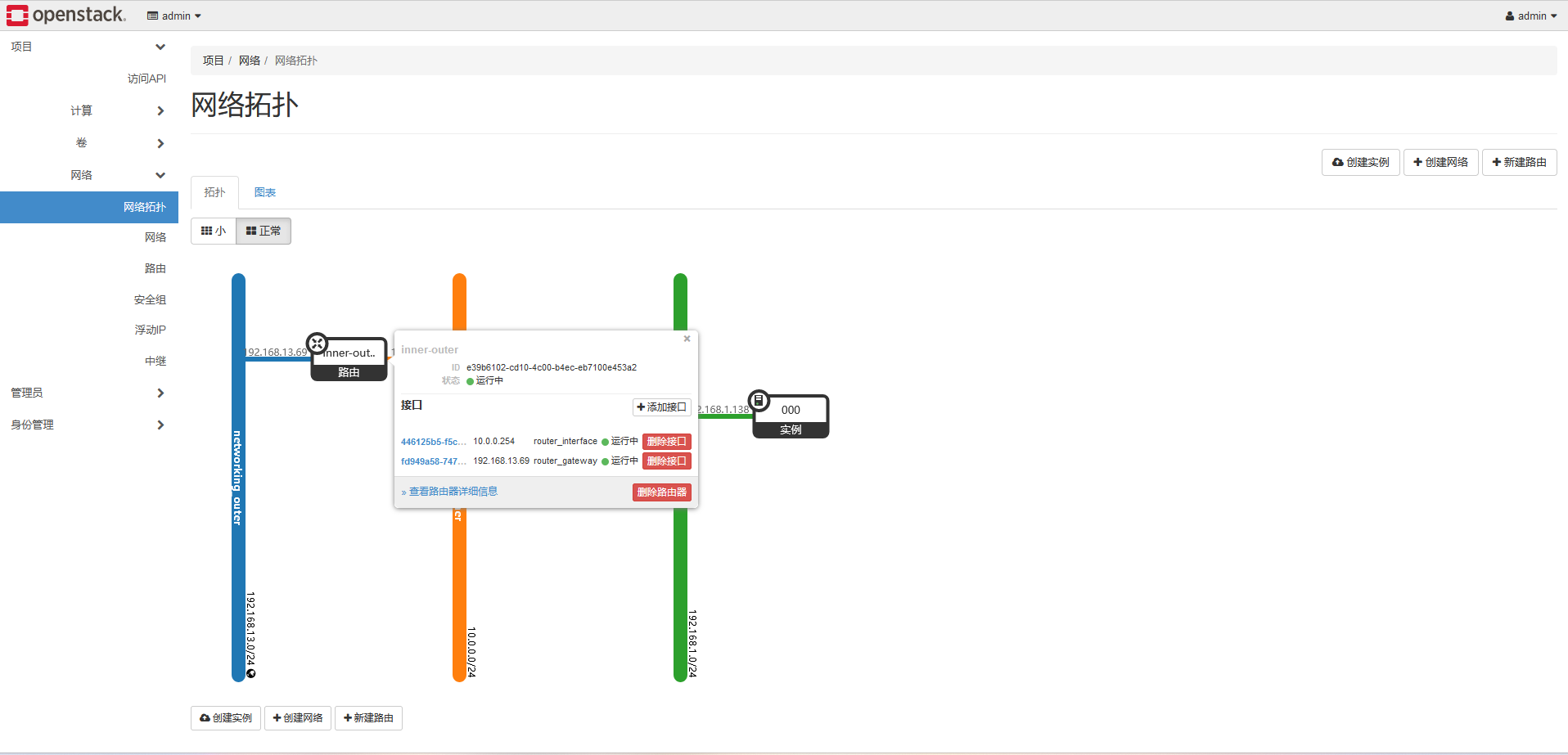

切换至租户:创建网络,添加路由器接口

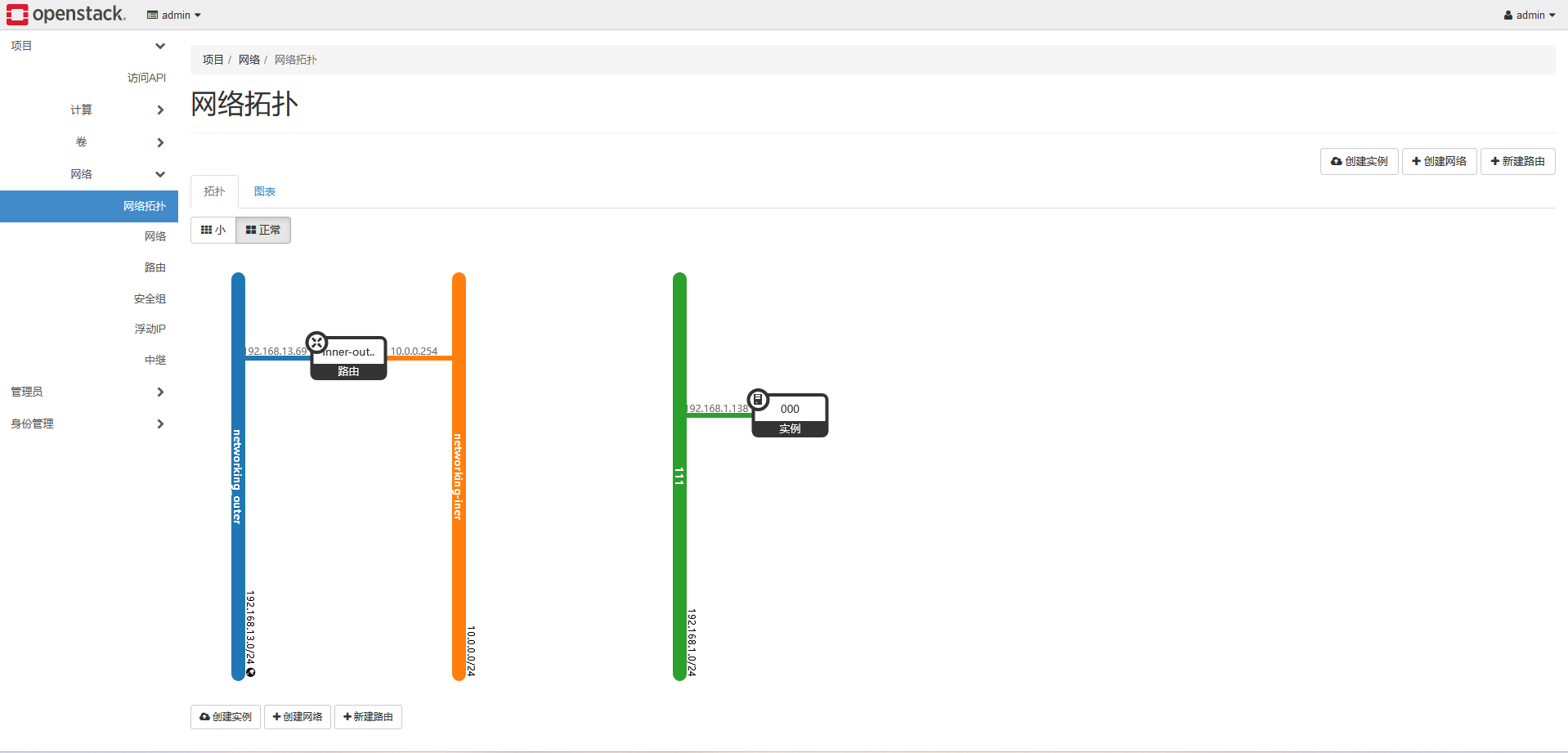

最后的网络拓扑如下

注意:管理员既有管理权限,也有项目权限。用户只有项目权限,所以创建路由不在一个地方

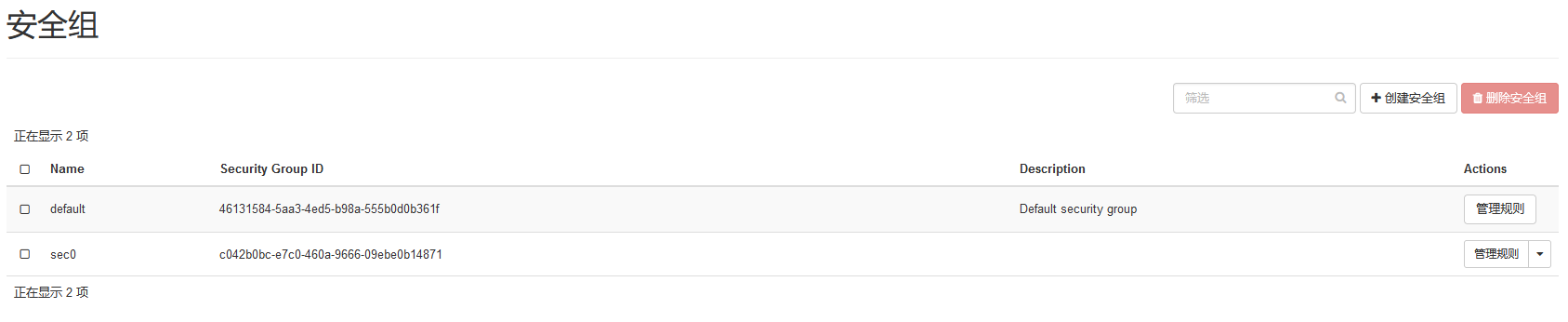

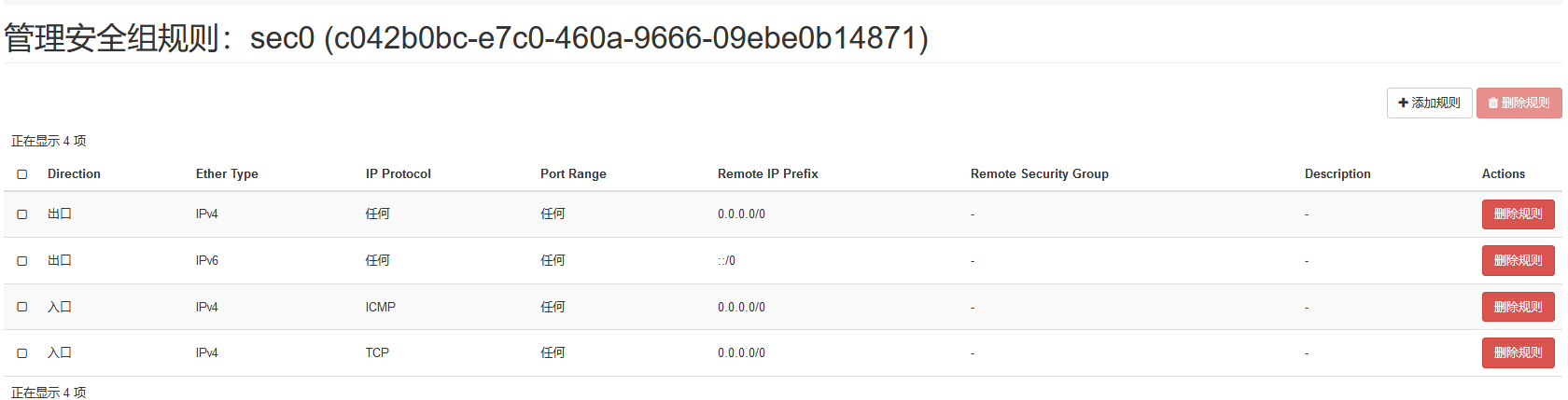

# 创建安全组

# 创建浮动 ip

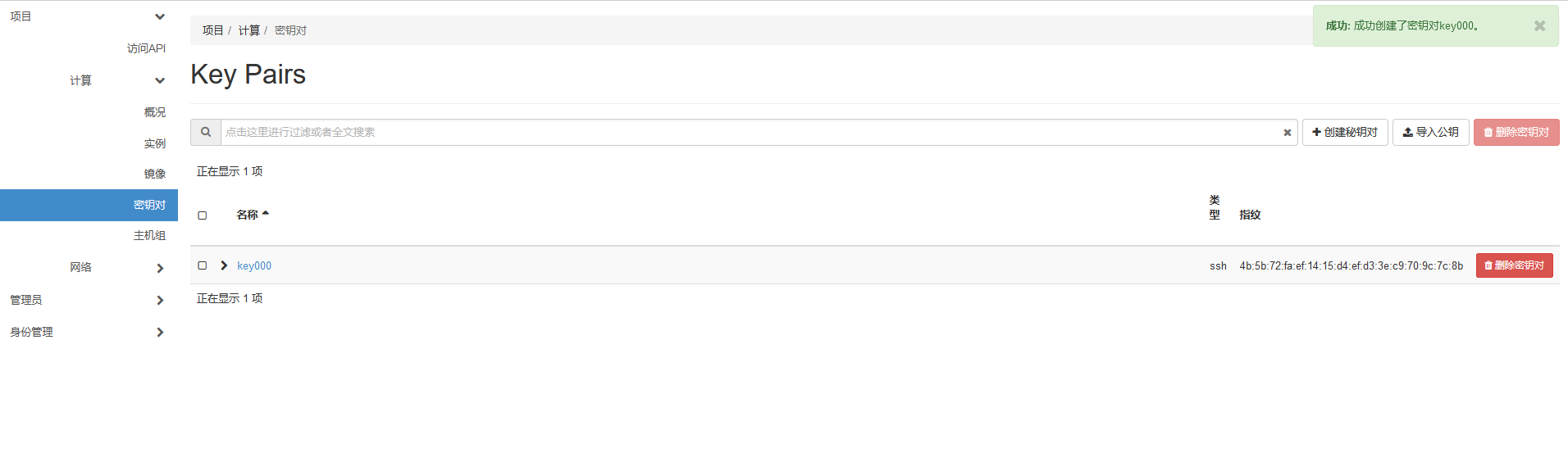

# 创建秘钥对

# 创建实例# 绑定浮动 IP

1 2 3 4 5 6 7 8 9 10 11 12 # 配置更新,my_ip 需要是controller的ip,不然会导致虚拟机无法调度。novncproxy_base_url需要是ip,因为本机没有配置hosts vim /etc/nova/nova.conf [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:redhat@controller my_ip = 192.168.13.20 [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://192.168.13.20:6080/vnc_auto.html

如果没有 snat,需要购买 nat 网关,否则无法访问外网

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 # 报错解决 Failed to allocate the network(s), not rescheduling https://blog.51cto.com/royfans/5612693 在nova的计算节点修改 /etc/nova/nova.conf # Determine if instance should boot or fail on VIF plugging timeout. For more # information, refer to the documentation. (boolean value) vif_plugging_is_fatal=false # Timeout for Neutron VIF plugging event message arrival. For more information, # refer to the documentation. (integer value) # Minimum value: 0 vif_plugging_timeout=0 systemctl restart openstack-nova-compute.service Unknown auth type: None https://blog.51cto.com/molewan/1906366 所有节点都编辑neutron.conf vim /etc/neutron/neutron.conf [DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:redhat@controller auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true rpc_backend = rabbit controller重启 systemctl restart neutron-server.service compute重启 systemctl restart neutron-linuxbridge-agent.service Load key "C:\\key000.pem": bad permissions https://blog.csdn.net/weixin_37989267/article/details/126270640

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 # 测试 ssh -i c:\key000.pem centos@192.168.1.230 [centos@111 ~]$ ping www.baidu.com PING www.a.shifen.com (36.155.132.76) 56(84) bytes of data. 64 bytes from 36.155.132.76 (36.155.132.76): icmp_seq=1 ttl=52 time=12.3 ms 64 bytes from 36.155.132.76 (36.155.132.76): icmp_seq=2 ttl=52 time=12.8 ms [centos@111 ~]$ ping 192.168.1.1 PING 192.168.1.1 (192.168.1.1) 56(84) bytes of data. 64 bytes from 192.168.1.1: icmp_seq=1 ttl=63 time=0.963 ms 64 bytes from 192.168.1.1: icmp_seq=2 ttl=63 time=2.33 ms [centos@111 ~]$ ping 192.168.100.254 PING 192.168.100.254 (192.168.100.254) 56(84) bytes of data. 64 bytes from 192.168.100.254: icmp_seq=1 ttl=64 time=0.848 ms 64 bytes from 192.168.100.254: icmp_seq=2 ttl=64 time=1.16 ms

# 命令行管理 OpenStack1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 # 创建不同用户的环境变量 vim user2-openrc export OS_USERNAME=user2 export OS_PASSWORD=redhat export OS_PROJECT_NAME=edu export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export PS1='[\u@\h \W user2]#' [root@controller ~ user2]#source user2-openrc [root@controller ~ user2]#openstack server list +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ | b389755c-c00a-494c-8df1-9fec65a2cadc | 111 | ACTIVE | VPC01=192.168.100.27, 192.168.1.230 | centos7-qcows2 | t.large.7 | +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ [root@controller ~ user2]#source admin-openrc [root@controller ~]#openstack server list --all +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ | b389755c-c00a-494c-8df1-9fec65a2cadc | 111 | ACTIVE | VPC01=192.168.100.27, 192.168.1.230 | centos7-qcows2 | t.large.7 | +--------------------------------------+------+--------+-------------------------------------+----------------+-----------+ # 列出云主机 nova list openstack server list # 删除云主机 openstack server delete 111 # 列出密钥对 [root@controller ~ user2]#openstack keypair list +--------+-------------------------------------------------+ | Name | Fingerprint | +--------+-------------------------------------------------+ | key000 | 4b:5b:72:fa:ef:14:15:d4:ef:d3:3e:c9:70:9c:7c:8b | +--------+-------------------------------------------------+ openstack keypair delete key000 # 列出安全组 [root@controller ~ user2]#openstack security group list +--------------------------------------+---------+------------------------+----------------------------------+------+ | ID | Name | Description | Project | Tags | +--------------------------------------+---------+------------------------+----------------------------------+------+ | 46131584-5aa3-4ed5-b98a-555b0d0b361f | default | Default security group | e087871141144d04809921dc4695c91a | [] | | c042b0bc-e7c0-460a-9666-09ebe0b14871 | sec0 | | e087871141144d04809921dc4695c91a | [] | +--------------------------------------+---------+------------------------+----------------------------------+------+ # 列出浮动IP [root@controller ~ user2]#openstack floating ip list +--------------------------------------+---------------------+------------------+--------------------------------------+--------------------------------------+----------------------------------+ | ID | Floating IP Address | Fixed IP Address | Port | Floating Network | Project | +--------------------------------------+---------------------+------------------+--------------------------------------+--------------------------------------+----------------------------------+ | 5e0d6da9-4d2e-4958-9772-0fea529062c4 | 192.168.1.226 | None | None | 4c8c74c7-8764-45c3-a7be-4b4de94aca42 | e087871141144d04809921dc4695c91a | | 94ebf368-704b-4b68-8ea0-bf054cc356c0 | 192.168.1.230 | 192.168.100.27 | 80555d60-734e-404b-83a1-e7a002e8a019 | 4c8c74c7-8764-45c3-a7be-4b4de94aca42 | e087871141144d04809921dc4695c91a | +--------------------------------------+---------------------+------------------+--------------------------------------+--------------------------------------+----------------------------------+ # 列出路由器 [root@controller ~ user2]#openstack route list +--------------------------------------+---------+--------+-------+----------------------------------+ | ID | Name | Status | State | Project | +--------------------------------------+---------+--------+-------+----------------------------------+ | e4867095-b421-4cf8-8f6d-e461fb6bc005 | router0 | ACTIVE | UP | e087871141144d04809921dc4695c91a | +--------------------------------------+---------+--------+-------+----------------------------------+ # 列出子网 [root@controller ~ user2]#openstack subnet list +--------------------------------------+---------------+--------------------------------------+------------------+ | ID | Name | Network | Subnet | +--------------------------------------+---------------+--------------------------------------+------------------+ | 0bfeeedd-40b8-4604-bd29-c64537e54095 | publicsubnet1 | 4c8c74c7-8764-45c3-a7be-4b4de94aca42 | 192.168.1.0/24 | | f601029d-74bf-4ccc-a03a-a2d42731a2ae | subnet01 | 1630bf29-e5d9-41e7-971b-32f84e1cc8cb | 192.168.100.0/24 | +--------------------------------------+---------------+--------------------------------------+------------------+ [root@controller ~ user2]#source admin-openrc [root@controller ~]#openstack project list +----------------------------------+-----------+ | ID | Name | +----------------------------------+-----------+ | 1845901c8800478b81b1e80de5148cf3 | admin | | 49bda9ea06794ea180ab0d45e714ac83 | myproject | | 955a68e6247048d3a9a7d99a0e6fc895 | service | | e087871141144d04809921dc4695c91a | edu | +----------------------------------+-----------+ [root@controller ~]#openstack user list +----------------------------------+-----------+ | ID | Name | +----------------------------------+-----------+ | 7b028a214e104565b90648e521c9a490 | admin | | ea4d56a09b484ccc81a363b95064b4d0 | glance | | 262dea2a3392437d9ef45e534360049e | myuser | | 077dc3eac993404586105cf3c88cfe0b | placement | | 8de7eefc14074d4a857ad184aa1f02ba | nova | | 6f8e00c5f08b460c97bc30bbc4377531 | neutron | | 8d86d72b27d74bf18b5a36be3fc6cb40 | user2 | +----------------------------------+-----------+ [root@controller ~]# openstack flavor list +--------------------------------------+-----------+------+------+-----------+-------+-----------+ | ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public | +--------------------------------------+-----------+------+------+-----------+-------+-----------+ | 2d14e3c1-42e7-4f24-aeb6-79560d8effdb | t.small.1 | 512 | 10 | 0 | 1 | True | | 3917bde7-c655-4892-a1e2-2a1af2be58c8 | t.large.7 | 1024 | 20 | 0 | 2 | True | +--------------------------------------+-----------+------+------+-----------+-------+-----------+ [root@controller ~]# openstack image list +--------------------------------------+----------------+--------+ | ID | Name | Status | +--------------------------------------+----------------+--------+ | 30bddbe2-25d1-4b87-86a2-6eaf5d03f9d7 | centos7-qcows2 | active | | e4c7903b-fc16-4d62-b60c-0e5ca211054e | cirros | active | +--------------------------------------+----------------+--------+

# 查看日志信息1 2 3 4 5 6 7 8 9 10 11 12 ca /var/log/nova nova-api.log nova-conductor.log nova-manage.log nova-novncproxy.log nova-scheduler.log [root@controller nova]# cd ../neutron/ [root@controller neutron]# ls dhcp-agent.log l3-agent.log linuxbridge-agent.log metadata-agent.log server.log 如果想要更详细日志,需要开启debug级别日志 # 每个节点修改 vim nova.conf debug=true

云主机在本地存储时,使用本地磁盘空间位置

/var/lib/nova/instance

1 2 3 4 5 6 7 8 9 [root@compute01 instances]# ls 431b7e00-6d33-48f6-9b2b-2241410bddea _base compute_nodes locks [root@compute01 instances]# cd 431b7e00-6d33-48f6-9b2b-2241410bddea/ [root@compute01 431b7e00-6d33-48f6-9b2b-2241410bddea]# ls console.log disk disk.info _base 是基础盘 xxx-xxx-xxx-xxx-xxx 是差分盘

开源 openstack 默认使用的是本地硬盘

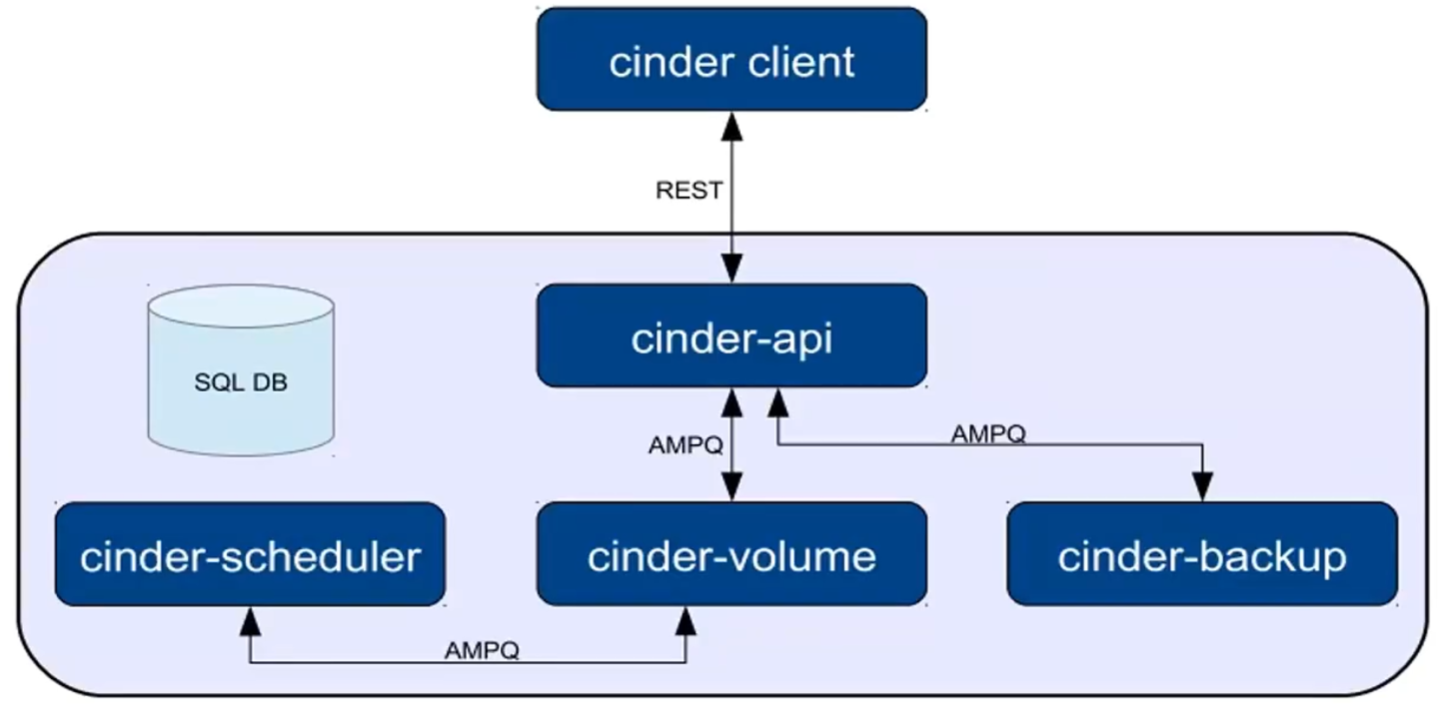

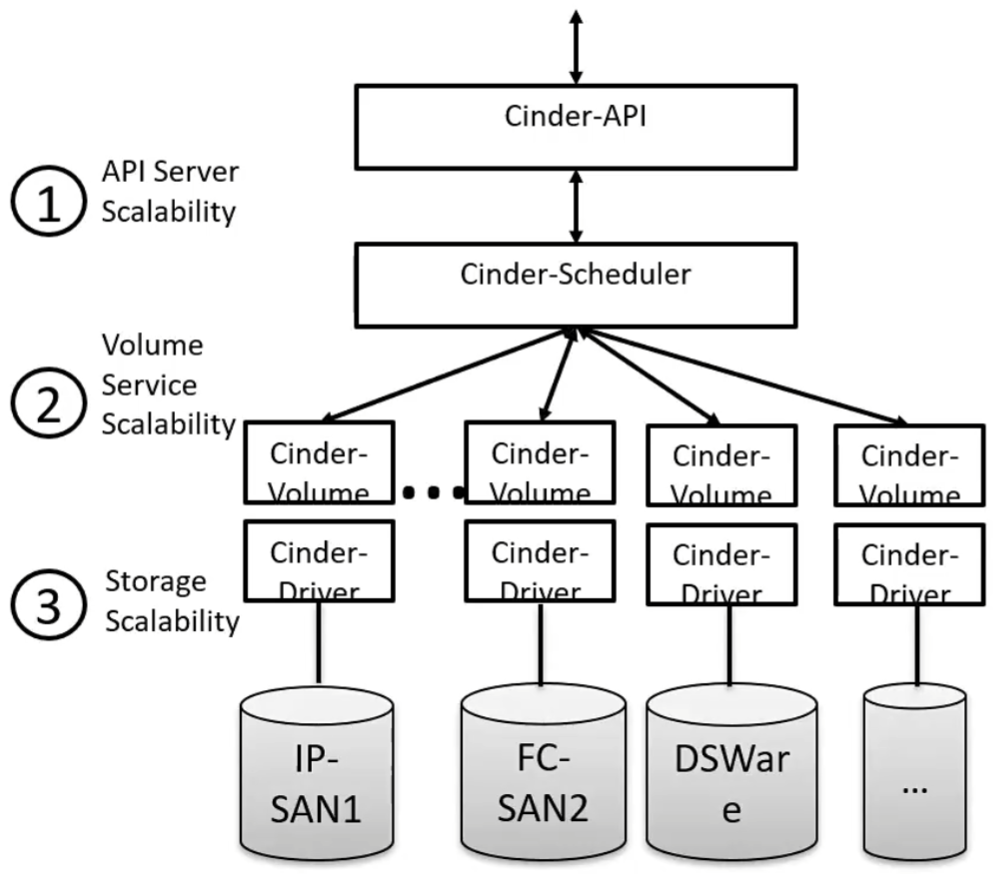

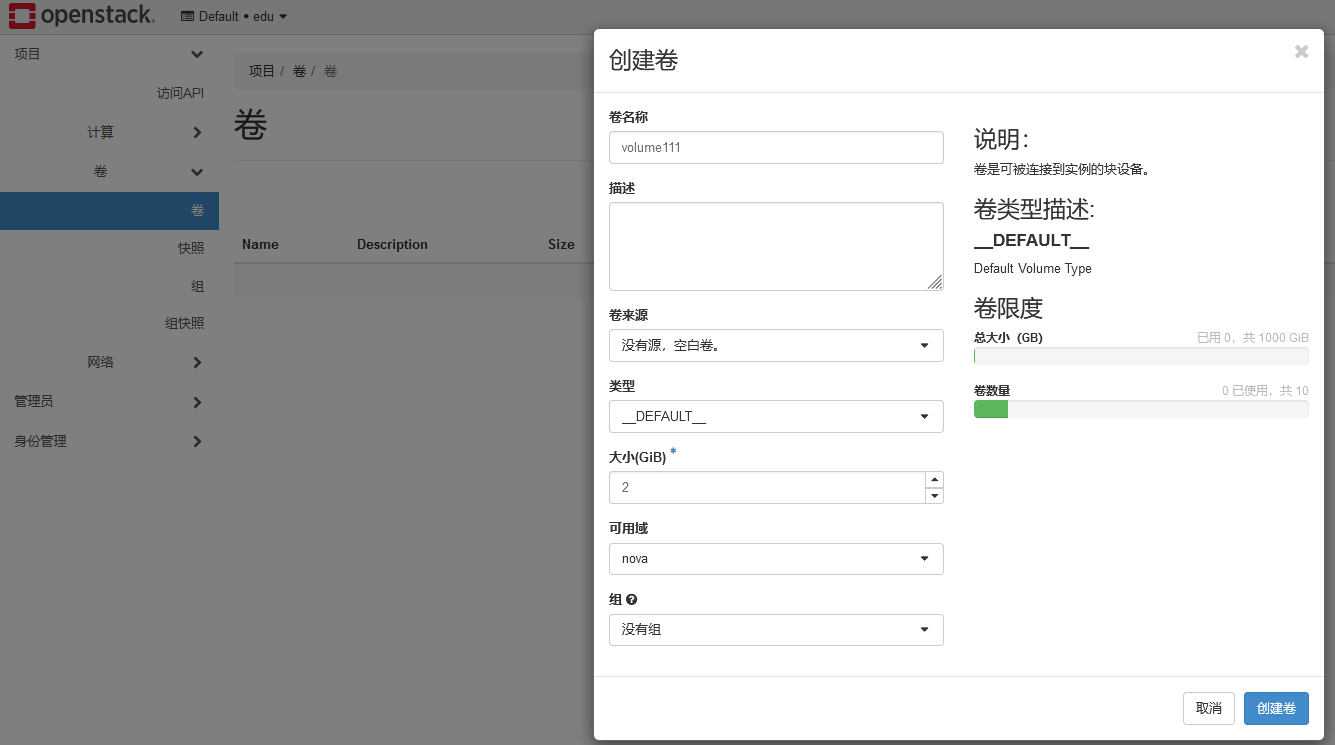

# cinder1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 # 安装cinder # controller node mysql -u root -p CREATE DATABASE cinder; GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \ IDENTIFIED BY 'redhat'; GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \ IDENTIFIED BY 'redhat'; openstack user create --domain default --password-prompt cinder openstack role add --project service --user cinder admin openstack service create --name cinderv2 \ --description "OpenStack Block Storage" volumev2 openstack service create --name cinderv3 \ --description "OpenStack Block Storage" volumev3 openstack endpoint create --region RegionOne \ volumev2 public http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev2 internal http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev2 admin http://controller:8776/v2/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 public http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 internal http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 admin http://controller:8776/v3/%\(project_id\)s yum install openstack-cinder -y vim /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:redhat@controller/cinder [DEFAULT] transport_url = rabbit://openstack:redhat@controller auth_strategy = keystone my_ip = 192.168.1.20 [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = cinder password = redhat [oslo_concurrency] lock_path = /var/lib/cinder/tmp su -s /bin/sh -c "cinder-manage db sync" cinder vim /etc/nova/nova.conf [cinder] os_region_name = RegionOne systemctl restart openstack-nova-api.service systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service --now # storage node 此处复用controller node ,在controller上增加一块磁盘 yum install lvm2 device-mapper-persistent-data [root@controller ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sr0 11:0 1 1G 0 rom nvme0n1 259:0 0 40G 0 disk └─nvme0n1p1 259:1 0 40G 0 part / nvme0n2 259:2 0 80G 0 disk pvcreate /dev/nvme0n2 vgcreate cinder-volumes /dev/nvme0n2 yum install openstack-cinder targetcli python3-keystone -y vim /etc/cinder/cinder.conf [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = cinder-volumes target_protocol = iscsi target_helper = lioadm [DEFAULT] enabled_backends = lvm glance_api_servers = http://controller:9292 systemctl enable openstack-cinder-volume.service target.service --now # 校验 [root@controller ~]# . admin-openrc [root@controller ~]# openstack volume service list +------------------+----------------+------+---------+-------+----------------------------+ | Binary | Host | Zone | Status | State | Updated At | +------------------+----------------+------+---------+-------+----------------------------+ | cinder-scheduler | controller | nova | enabled | up | 2024-04-04T09:35:37.000000 | | cinder-volume | controller@lvm | nova | enabled | up | 2024-04-04T09:35:46.000000 | +------------------+----------------+------+---------+-------+----------------------------+

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@controller ~]# vgdisplay -v cinder-volumes --- Logical volume --- LV Path /dev/cinder-volumes/volume-84b2b73b-bfad-4bfa-97e7-fc6be4eb10a9 LV Name volume-84b2b73b-bfad-4bfa-97e7-fc6be4eb10a9 VG Name cinder-volumes LV UUID Q2qgz8-UJdJ-QlWd-mLo9-N3CW-WP3P-oMqRXN LV Write Access read/write LV Creation host, time controller, 2024-04-04 17:41:24 +0800 LV Pool name cinder-volumes-pool LV Status available # open 0 LV Size 2.00 GiB Mapped size 0.00% Current LE 512 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 8192 Block device 253:4

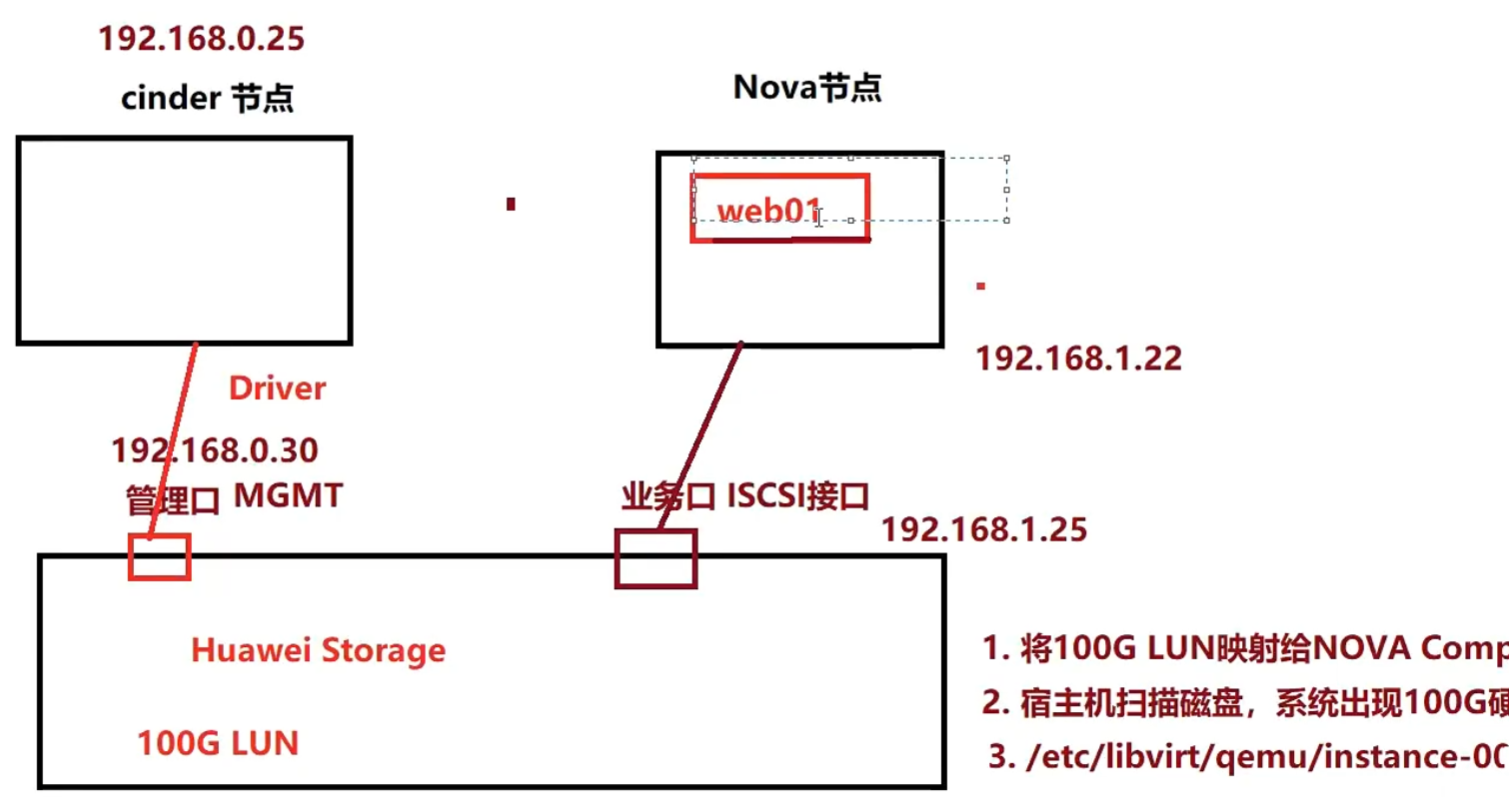

cinder 只用于做存储管理,数据写盘的时候不会经过 cinder

硬盘挂载给云主机的时候是 nova 操作

硬盘附加给云主机的时候,会挂载到云主机所在的宿主机,通过 kvm 映射给云主机

nova 挂载的时候向 cinder-api 请求卷信息

阵列侧添加主机和 lun 的映射

主机侧扫描 scsi 总线

多路径生成虚拟磁盘

Nova 调用 libvirt 接口将磁盘添加到 xml

experiment: use glusterfs

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 # 自己的ip不用probe,自己的ip是192.168.9.77 gluster peer probe 192.168.9.88 gluster peer status gluster volume create aaa replica 2 192.168.9.77:/volume/node1 192.168.9.88:/volume/node2 gluster volume start aaa gluster volume info aaa # controller node测试。 fuse 用户空间提供 ,不在内核空间 yum install gluster-fuse vim /etcd/cinder/cinder.conf [DEFAULT] enabled_backends = glusterfs123 [glusterfs123] volume_driver = cinder.volume.drivers.glusterfs.GlusterfsDriver gluster_shares_config = /etc/cinder/haha volume_backend_name = rhs vim /etc/cinder/haha 192.168.9.77:/firstvol systemctl restart openstack-cinder-api.service . admin-openrc cinder tpye-create glusterfs cinder tpye-key glusterfs set volume_backend_name=rhs # compute yum install gluster-fuse -y # horizon 上创建glustefs存储,attach 到云主机上 cinder create --display-name aaa nova volume-attache server1 volume disk1

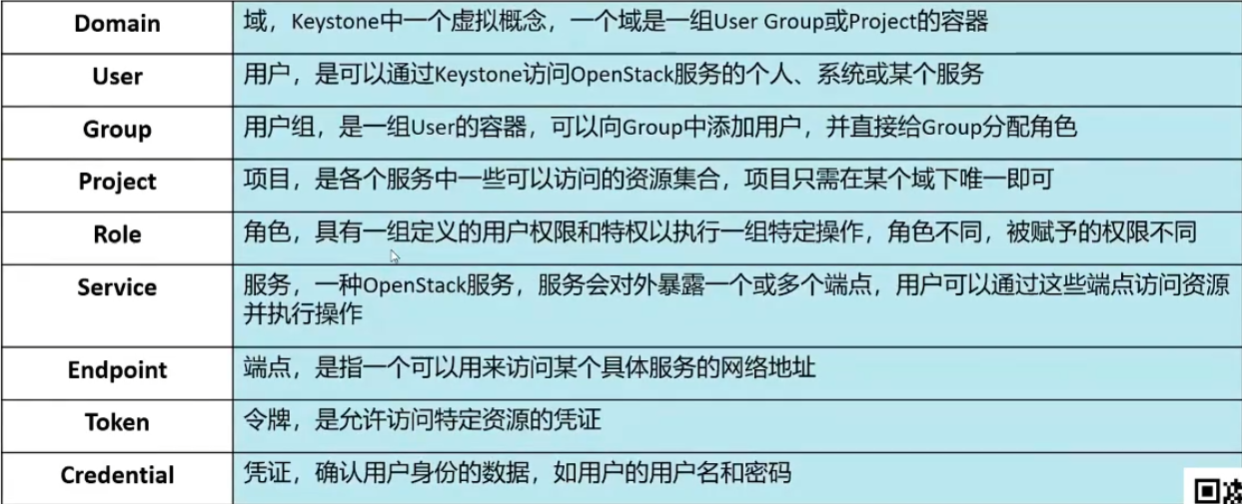

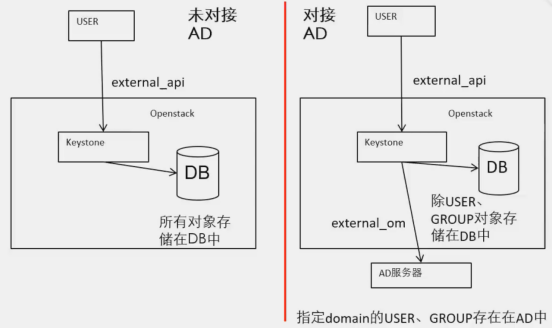

# keystone

Domain: 域,keystone 中资源 (project、user、group) 的持有:者

Project: 租户,其他组件中资源 (虚拟机、镜像等) 的持有者

User: 用户,云系统的使用者

Group: 用户组,可以把多个用户作为一个整体进行角色管理

Role: 角色,基于角色进行访问控制

Trust: 委托,把自己拥有的角色临时授权给别人

Service: 服务,一组相关功能的集合,比如计算服务、网络服务、镜像服务、存储服务等

Endpoint: 必须和一个服务关联,代表这个服务的访问地址,一般一个服务需要提供三种类型的访问地址:public、internal、adrmin

Region: 区域,在 keystone 里基本代表一个数据中心

Policy: 访问控制策略,定义接口访问控制规则

Assignment: 一个 (actor, target, role) 三元组叫一个 assignment,actor 包括 user、group,target 包括 domain、project。每个 assignment 代表一次赋权操作

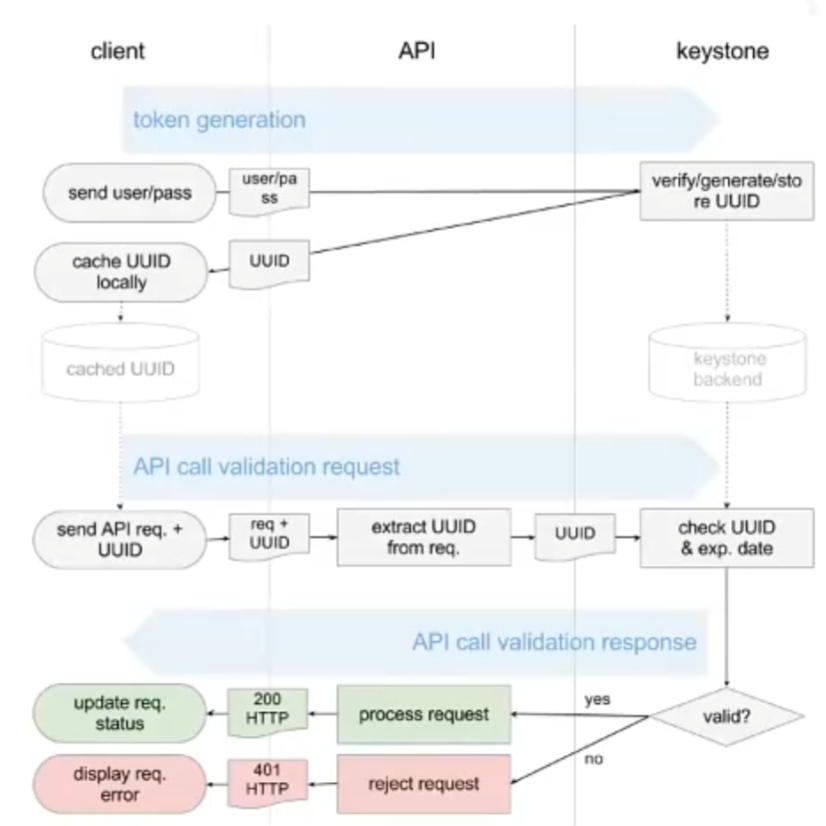

Token: 令牌,用户访问服务的凭证,代表着用户的账户信息,一般需要包含 user 信息、scope 信息 (project、domain 或者 trust)、1role 信息。分为 PKI,UUID,PKIZ,Fernet 几种类型。

1 2 3 4 openstack user create user2 openstack user set --password-prompt user2 openstack project create Huawei openstack role add --user user2 --project huawei admin

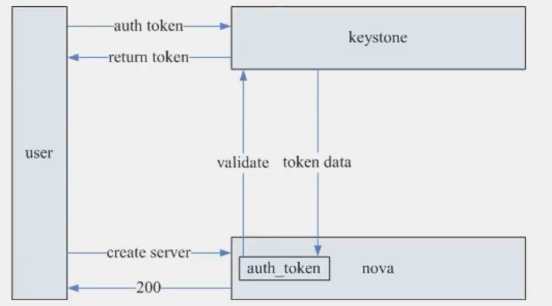

用户从 keystone 申请 token

用户使用 token 访问服务

被访问组件验证 token

用户得到返回消息

1 2 3 4 5 6 7 8 9 10 11 12 13 vim /etc/openstack-dashboard/ cinder_policy.json keystone_policy.json neutron_policy.json nova_policy.json glance_policy.json local_settings nova_policy.d/ cp glance_policy.json /etc/glance/policy.json chown dashborad 下的json 是模板文件,需要复制到对应的配置目录下修改并重启服务生效 上传镜像的时候,默认不能上传为公有镜像,需要修改policy.json

权限不是由 ksysone 决定的,而是服务本身决定的(policy.json)。keystone 只做身份验证

1 2 3 4 5 6 7 8 9 10 [root@controller openstack-dashboard] total 432 -rw-r----- 1 root apache 77821 Mar 18 15:47 cinder_policy.yaml drwxr-xr-x 2 root root 4096 May 16 16:38 default_policies -rw-r----- 1 root apache 21507 Mar 18 15:47 glance_policy.yaml -rw-r----- 1 root apache 95071 Mar 18 15:47 keystone_policy.yaml -rw-r----- 1 root apache 12009 May 16 16:38 local_settings -rw-r----- 1 root apache 111993 Mar 18 15:47 neutron_policy.yaml drwxr-xr-x 2 root root 4096 May 16 16:38 nova_policy.d -rw-r----- 1 root apache 104596 Mar 18 15:47 nova_policy.yaml

角色本身没有意义,需要授权

# glance写操作会通过 glance-registry,读操作可以直接去数据库中查

glance 使用 swift

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 default_store = swift stores = file,http,swift swift_store_auth_version = 2 swift_store_auth_address = <None> # keystone address + port + version swift_store_user = edu:user1 # 用户需要有上传swift权限 swift_store_key = redhat swift_store_container = glance swift_store_create_container_on_put = True swift_store_large_object_size = 5120 swift list swift list hahah swift download swift upload swift post

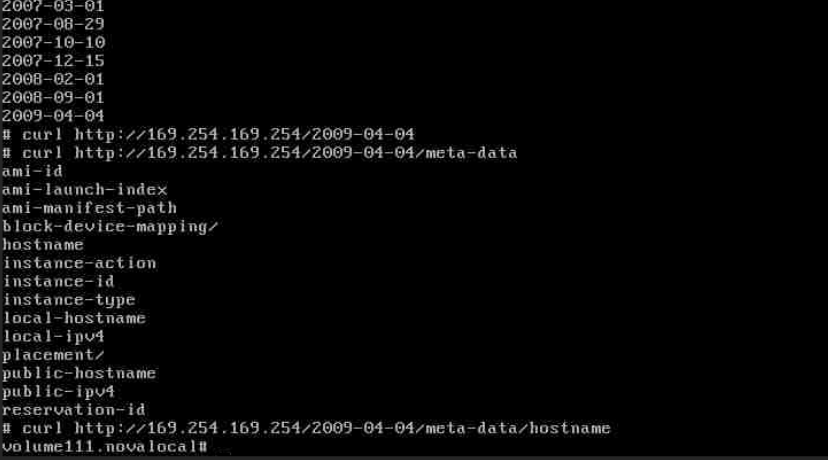

# 制作镜像1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 安装kvm 在kvm中安装需要的虚拟机 创建虚拟机磁盘 qemu-img create -f qcow2 /root/qq.qcow2 10G -o preallocation=metadata 最小化安装 安装cloud-init yum install https://archives.fedoraproject.org/pub/epel/8/Everything/x86_64/Packages/e/epel-release-8-19.el8.noarch.rpm yum install cloud-init -y systemctl enable cloud-init-local.service cloud-init.service cloud-config.service cloud-final.service vim /etc/sysconfig/network-scripts/ifcfg-ens160 获取方式dhcp 删除uuid vim /etc/cloud/cloud.cfg https://support.huaweicloud.com/intl/zh-cn/bestpractice-ims/ims_bp_0024.html 关闭开启的实例 压缩虚拟机镜像,在宿主机上执行 qemu-img convert -c -O qcow2 /root/qq.qcow2 /tmp/ww.qcow2

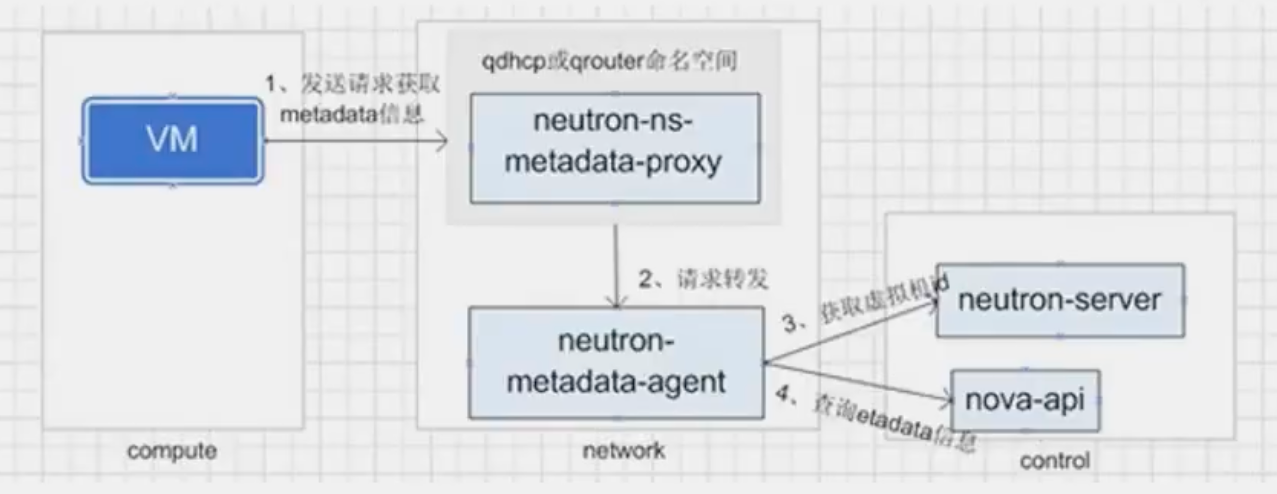

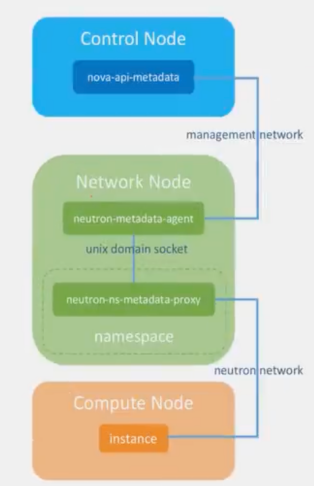

注入

systemctl status neutron-metadata-agent.service

https://blog.51cto.com/lyanhong/1924271

https://blog.csdn.net/cuibin1991/article/details/124140346

http://cloud.centos.org/centos/7/images/

# 升级,搭建 [Dalmatian] 版 openstack注意:

1 2 3 4 5 6 7 大部分步骤按照官网来即可,但是neutron按照官网存在问题,这里按照u版配置neutron的方式配置,verify正常 注意nova.conf 中配置的keystone的端口是 80 还是 5000,协议是http还是https 否则会导致无法登陆或者无法发放主机 https://blog.csdn.net/qq_41158292/article/details/135750315

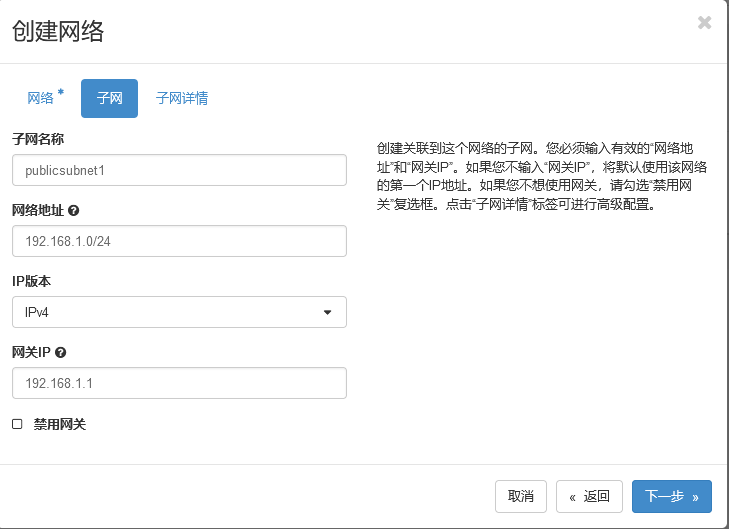

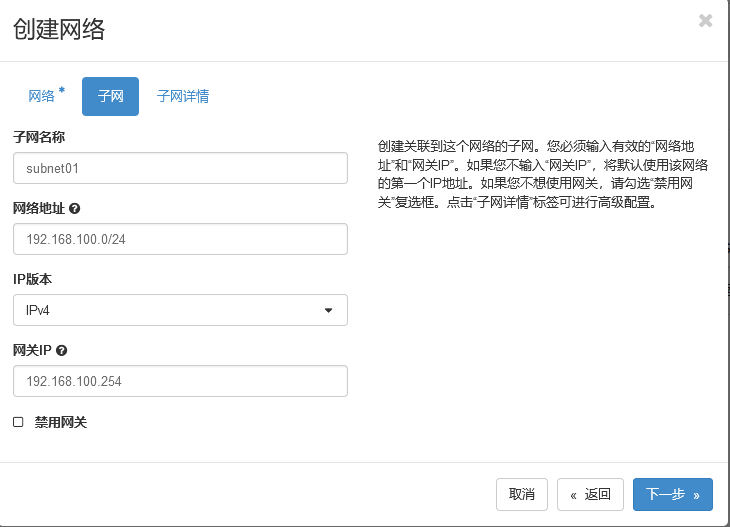

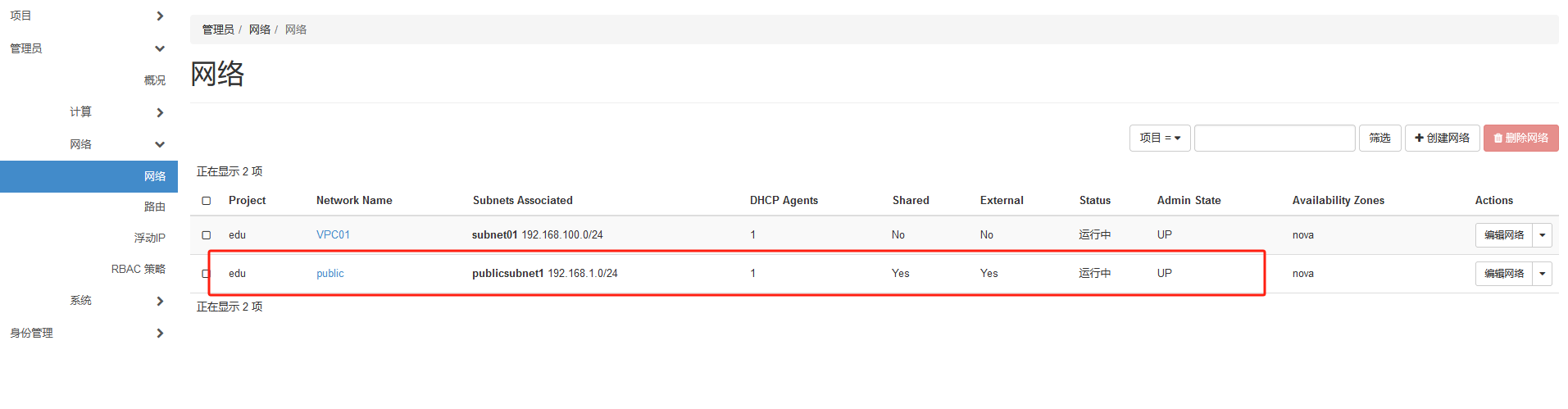

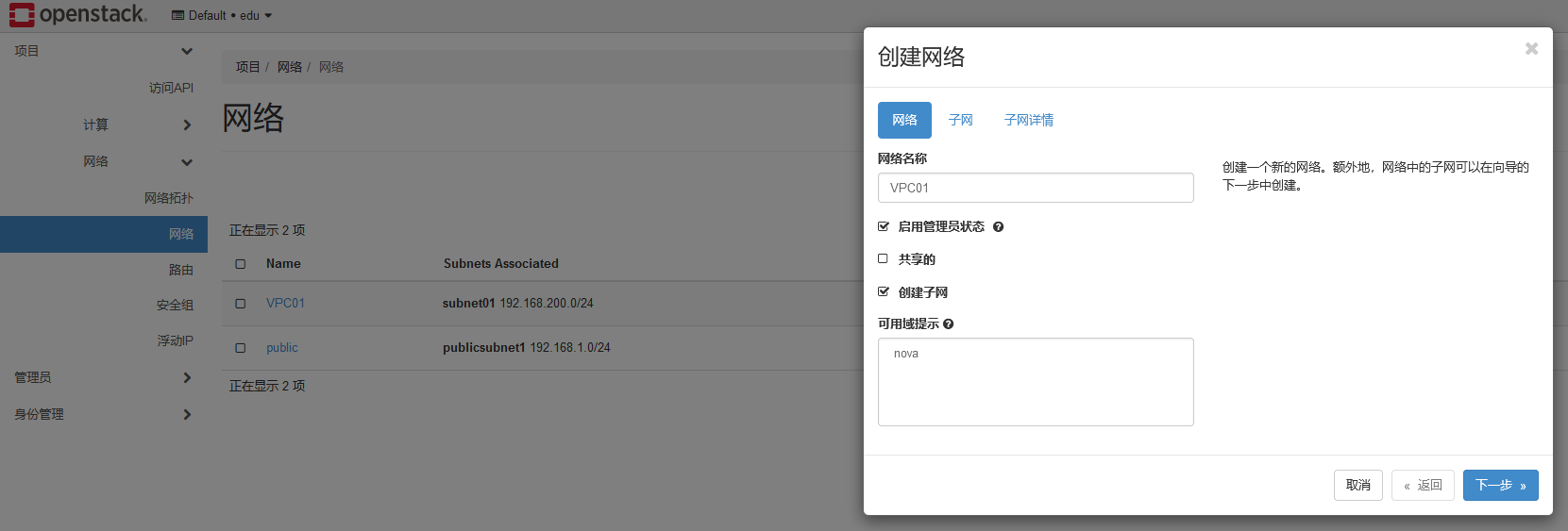

# 创建网络1. 创建租户 edu,租户内存在用户 user2

2. 在 admin 空间内,管理员视图下,创建网络 public。这是共享的,外部网络,关闭 dchp

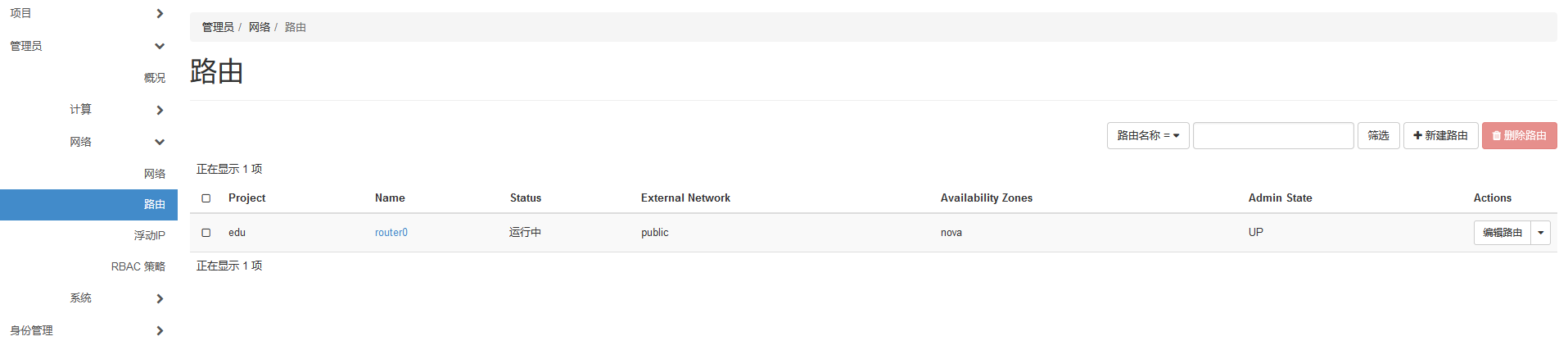

3. 在 admin 空间内,管理员视图下,创建路由,项目为 edu

4. 切换至 user2,在项目视图下,创建网络 vpc01,不共享,子网随意写,开启 dhcp

5,user2,在项目视图下,添加路由的 接口

6. 最终网络拓扑图如下

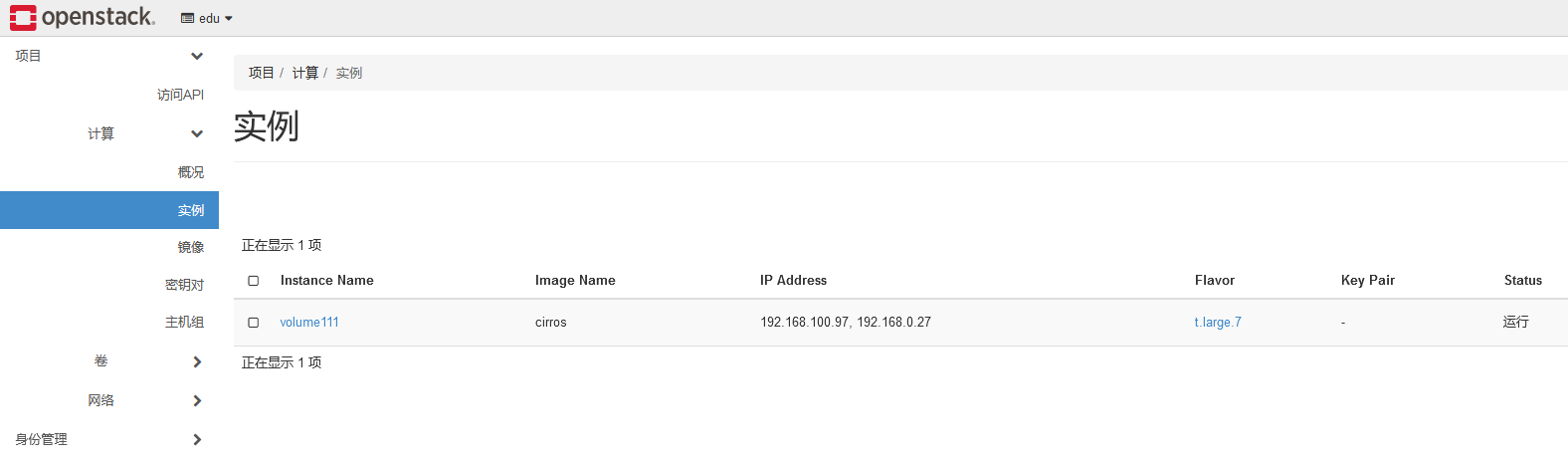

# nova虚拟机生命周期管理

其他计算资源生命周期管理

主机发放完之后可以把镜像删掉,因为系统盘是通过镜像复制的

openstack 中实例快照是作为镜像使用的,可以直接作为云主机镜像,不能直接还原

硬盘快照是用于创建相同的云硬盘,可以挂载给主机,不能直接还原快照。也可以放做启动盘使用

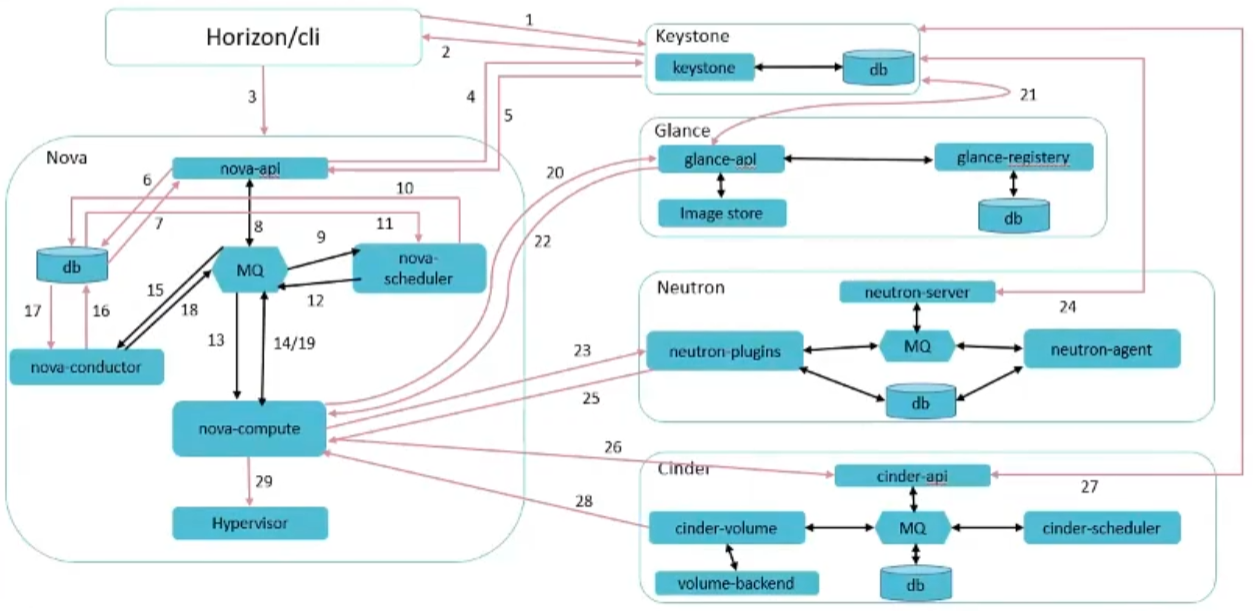

Nova 依赖 Keystone 认证服务

通过 Nova 模块创建计算实例,Nova 调用 Glance 模块提供的镜像服务

通过 Nova 模块创建的计算实例启动时,连接到的虚拟或物理网络由 Neutron 负责

最后一个服务已经没有了

1 2 3 4 5 6 7 8 9 [root@controller ~]# openstack compute service list +----+----------------+------------+----------+---------+-------+----------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | +----+----------------+------------+----------+---------+-------+----------------------------+ | 2 | nova-scheduler | controller | internal | enabled | up | 2024-04-22T13:36:31.000000 | | 6 | nova-conductor | controller | internal | enabled | up | 2024-04-22T13:36:37.000000 | | 10 | nova-compute | compute01 | nova | enabled | up | 2024-04-22T13:36:39.000000 | | 11 | nova-compute | compute02 | nova | enabled | up | 2024-04-22T13:36:40.000000 | +----+----------------+------------+----------+---------+-------+----------------------------+

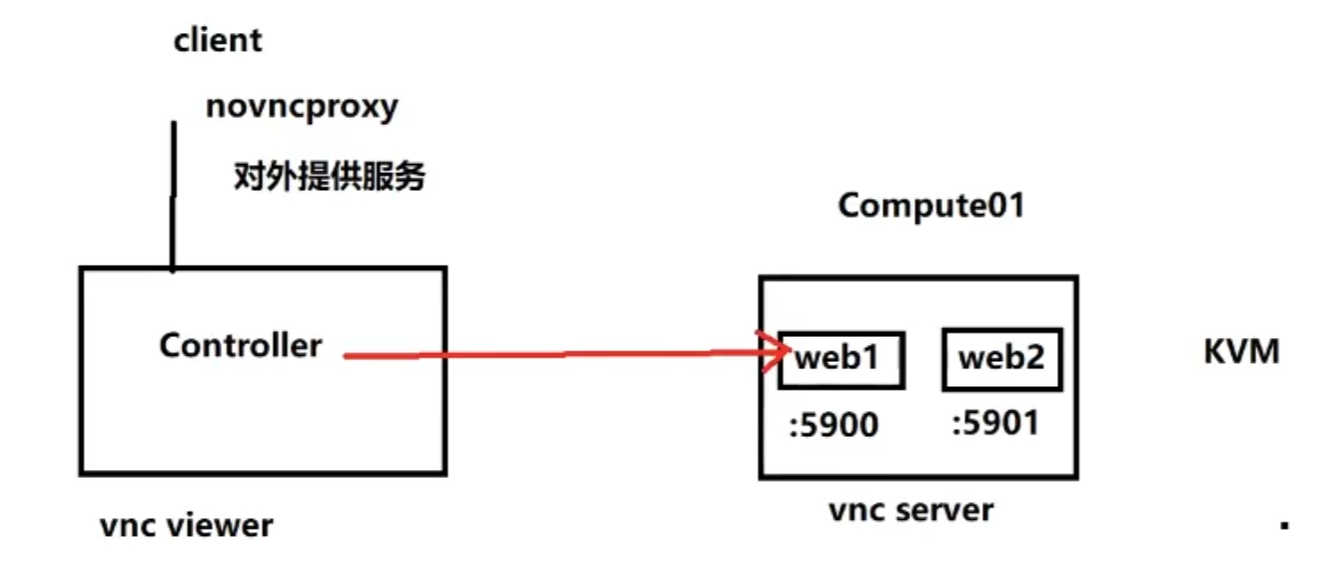

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@controller ~]# systemctl status openstack-nova-novncproxy.service ● openstack-nova-novncproxy.service - OpenStack Nova NoVNC Proxy Server Loaded: loaded (/usr/lib/systemd/system/openstack-nova-novncproxy.service; enabled; vendor preset: disabled) Active: active (running) since Mon 2024-04-08 22:13:09 CST; 1 weeks 6 days ago controller 不需要安装vnc客户端,novncproxy集成在浏览器中。 链接的时候通过http://controller:6080 连接 tcp 0 0 0.0.0.0:6080 0.0.0.0:* LISTEN 20389/python3 通过nonvcproxy访问到 compute上面 的5900 实现 vnc访问 tcp 0 0 0.0.0.0:5900 0.0.0.0:* LISTEN 4881/qemu-kvm

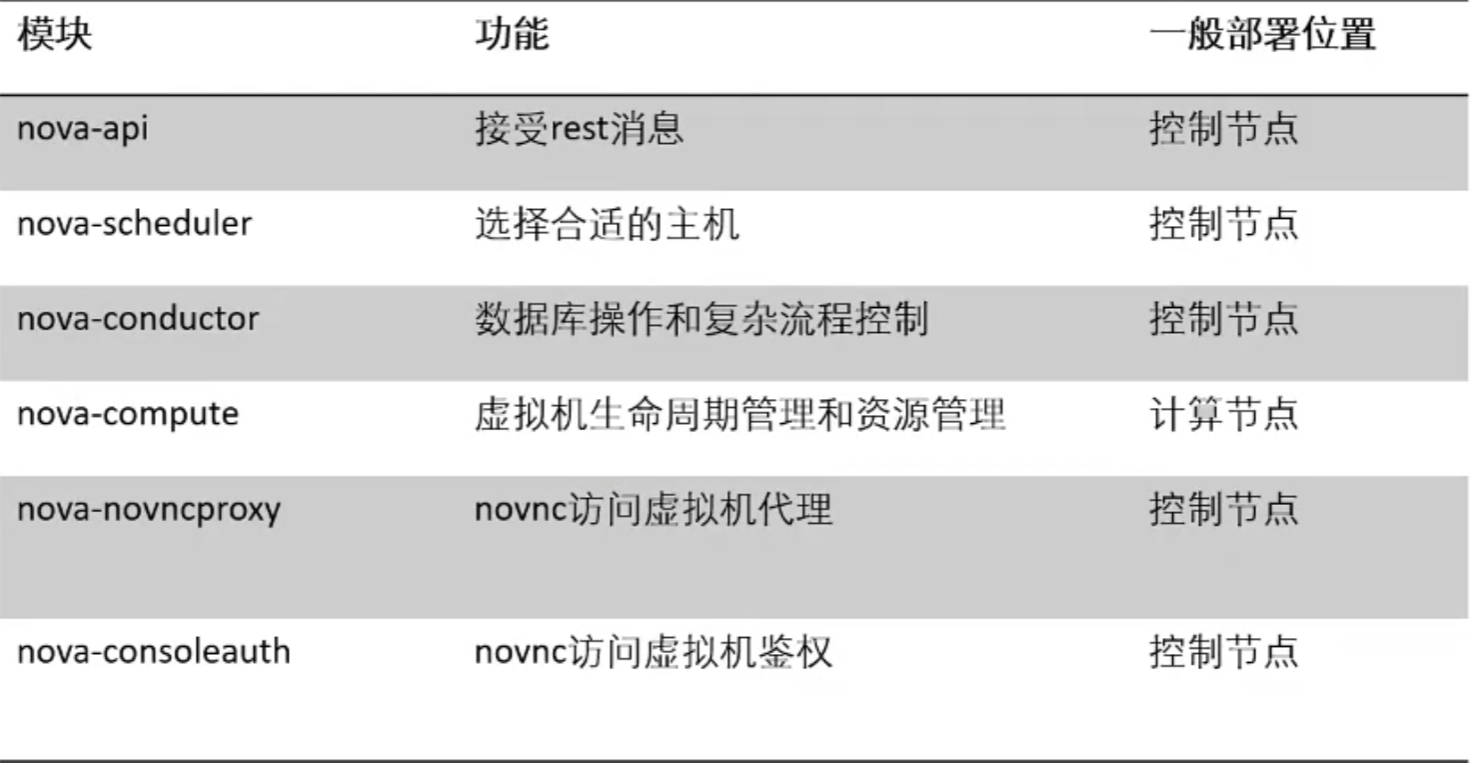

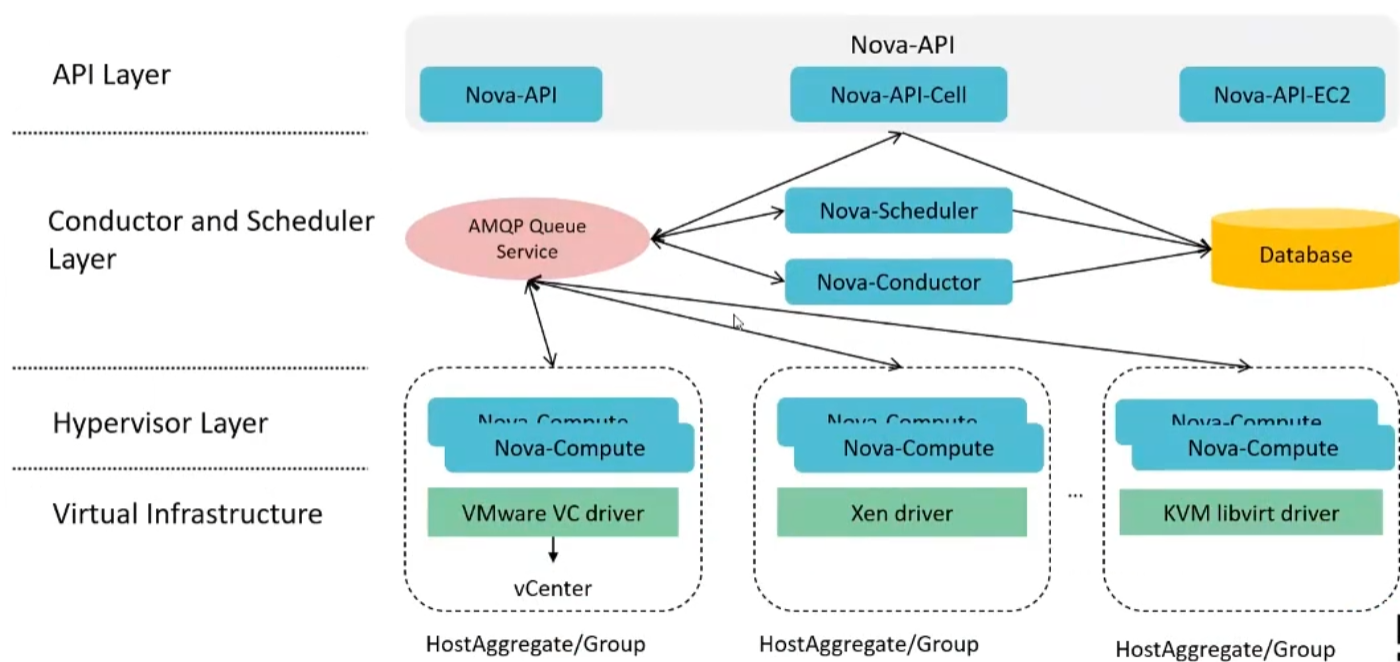

nova-api

对外提供 rest 接口的处理

对传入的参数进行合法性校验和约束限制

对请求的资源进行配额 (quota) 的校验和预留

资源的创建,更新,删除查询等

虚拟机生命周期的入口

可水平扩展部署

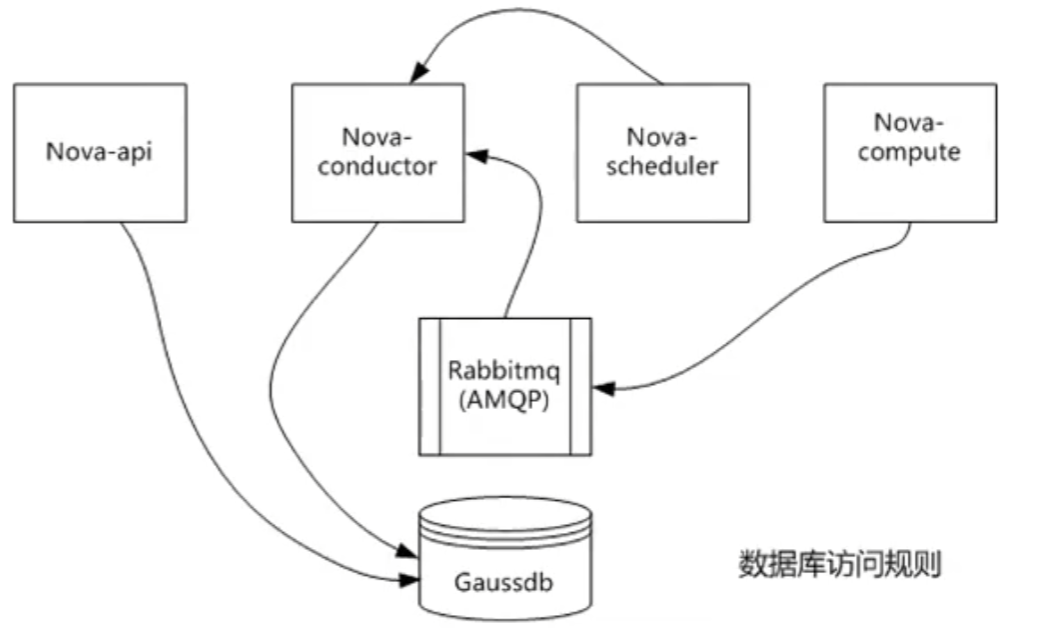

nova-conductor

G 版本引进

数据库操作。解耦其他组件 (nova-compute) 数据库访问。

Nova 复杂流程控制,如创建,冷迁移,热迁移,虚拟机规格调整,虚拟机重建。

其他组件的依赖。如 nova-compute 需要依赖 nova-conductor 启动成功后才能启动成功。

其他组件的心跳定时写入。

可水平扩展

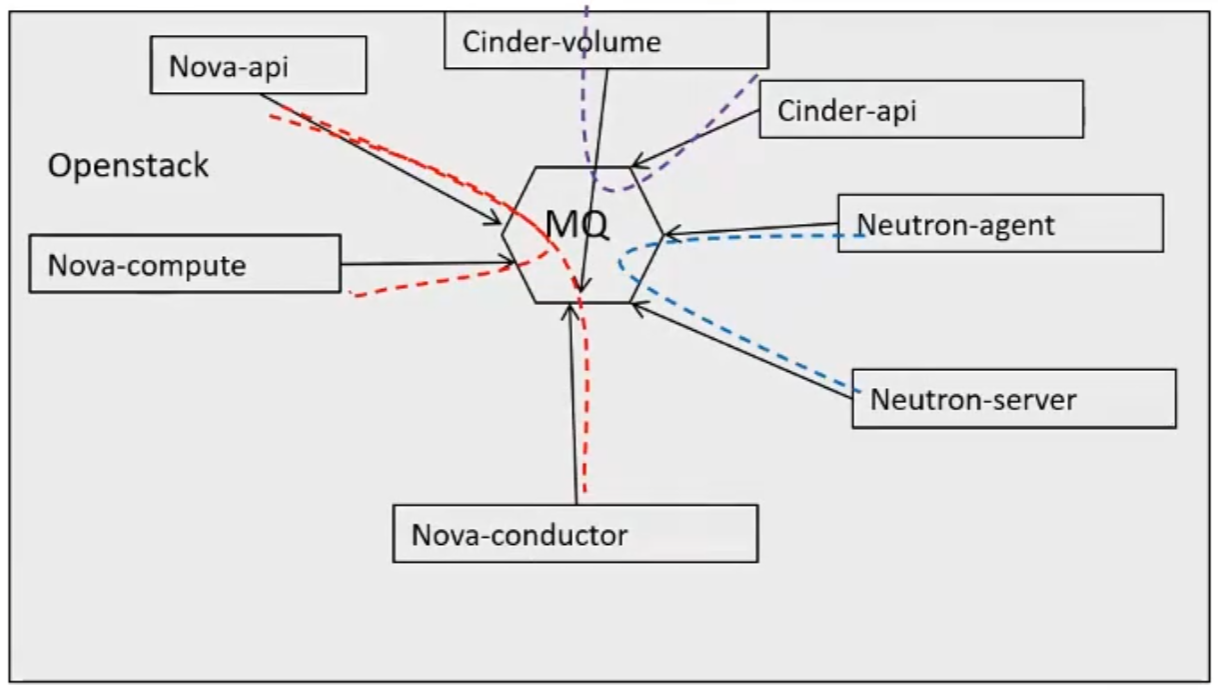

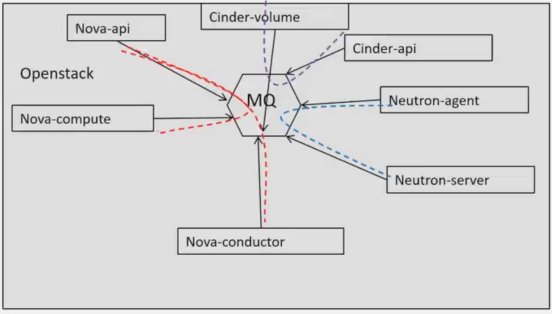

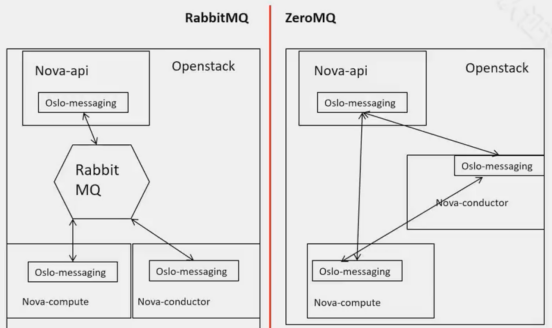

服务内组件之间的消息全部通过 MQ 来进行转发,包括控制、查询、监控指标等。

无中心架构

各组件无本地持久化状态

可水平扩展

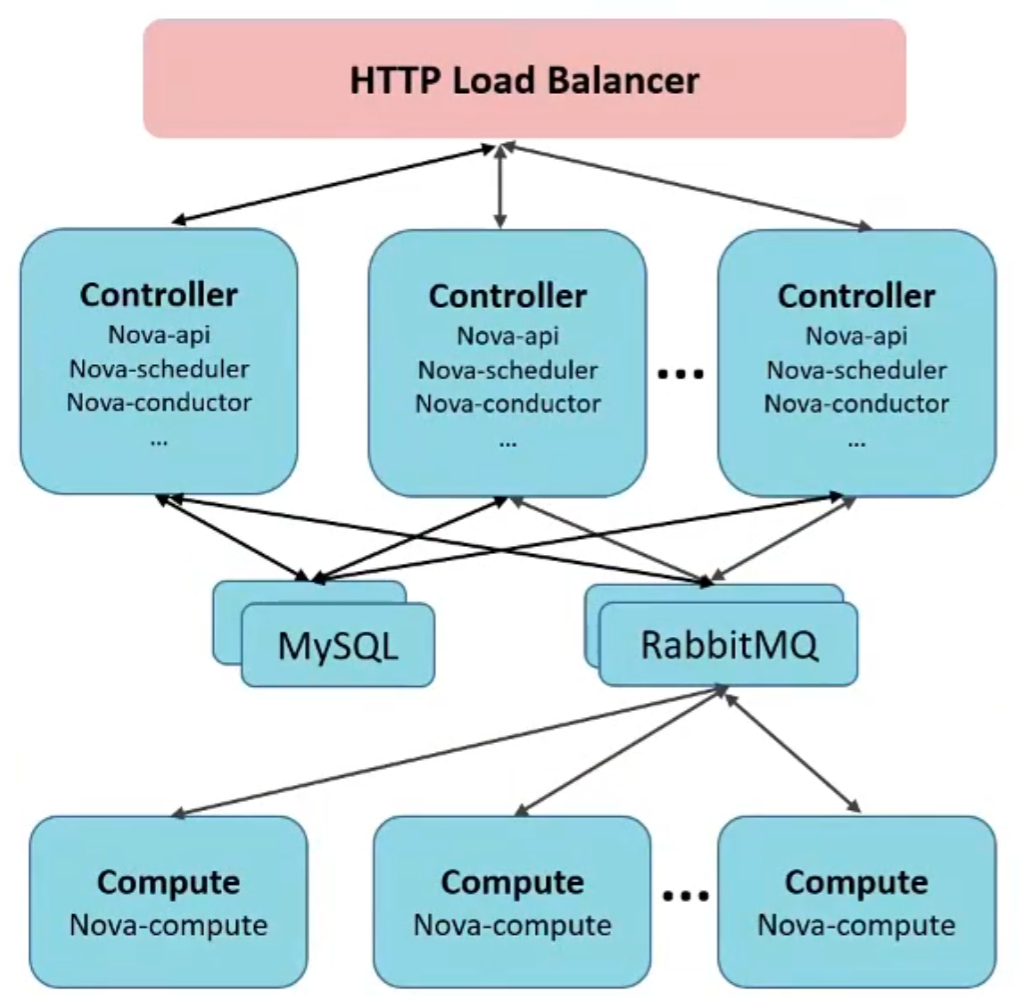

通常将 nova-api、nova-scheduler、nova-condyctor 组件合并部署在控制节点上

通过部署多个控制节点实现 HA 和负载均衡

通过增加控制节点和计算节点实现简单方便的系统扩容

nova-api-Cell 网格化管理,适用于大规模 nova 管理

一个 az 中可以有多个 HA

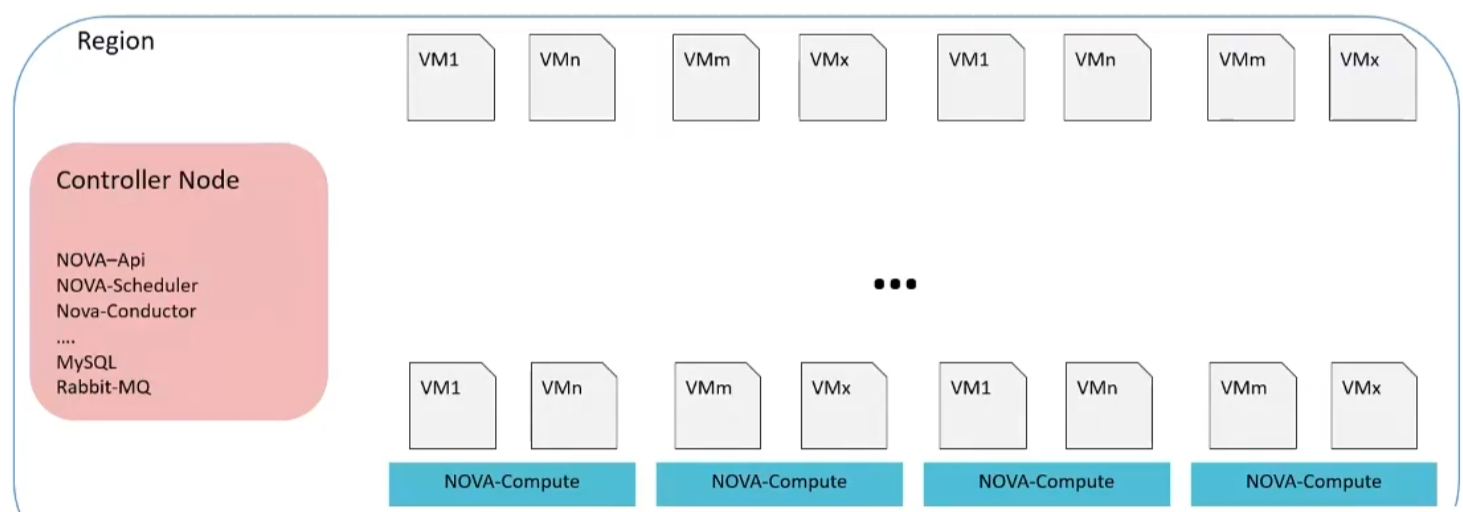

region 一个区域,代表一个数据中心

一个 region 下可以有多个 az,提高资源可用率

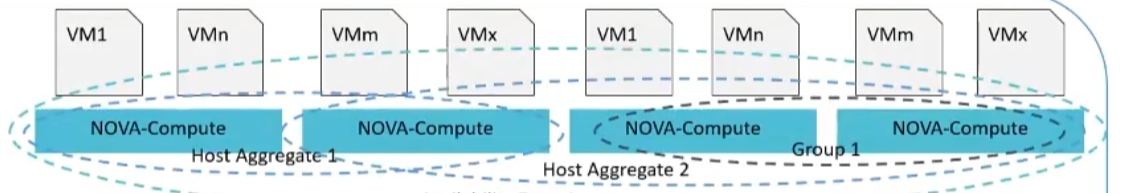

ZA 下可以有多个主机集合。一般会将同一配置的主机放入一个主机集合,方便热迁移

一个主机集合中可以有多个主机组

主机组可以设置亲和性

创建主机集合

主机聚合只能在 admin 下创建

Nova-Scheduler 功能

筛选和确定将虚拟机实例分配到哪一台物理机

分配过程主要分为两步过滤和权重

通过过滤器选择满足条件的计算节点

通过权重选择最优的节点

1 2 3 4 5 6 nova list +--------------------------------------+-----------+--------+------------+-------------+-------------------------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+-----------+--------+------------+-------------+-------------------------------------+ | 93da63d8-4f0b-4818-a01b-50c7af88c6dd | volume111 | ACTIVE | - | Running | VPC01=192.168.200.37, 192.168.1.197 | +--------------------------------------+-----------+--------+------------+-------------+-------------------------------------+

vmstate: 数据库中记录的虚拟机状态 t

ask state: 当前虚拟机的任务状态,一般是中间态或者 None

powerstate: 从 hypervisor 获取的虚拟机的真实状志

Status: 对外呈现的虚拟机状态

状态之间的关系

系统内部!记求 ymstate 和 task state,power state

status 星由 vmstate 和 task state 联合生成的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 openstack server list --all +--------------------------------------+-----------+--------+-------------------------------------+---------+-----------+ | ID | Name | Status | Networks | Image | Flavor | +--------------------------------------+-----------+--------+-------------------------------------+---------+-----------+ | 93da63d8-4f0b-4818-a01b-50c7af88c6dd | volume111 | ACTIVE | VPC01=192.168.200.37, 192.168.1.197 | centos7 | t.large.7 | +--------------------------------------+-----------+--------+-------------------------------------+---------+-----------+ openstack server show 93da63d8-4f0b-4818-a01b-50c7af88c6dd +-------------------------------------+----------------------------------------------------------+ | Field | Value | +-------------------------------------+----------------------------------------------------------+ | OS-DCF:diskConfig | AUTO | | OS-EXT-AZ:availability_zone | nova | | OS-EXT-SRV-ATTR:host | compute02 | | OS-EXT-SRV-ATTR:hypervisor_hostname | compute02 |

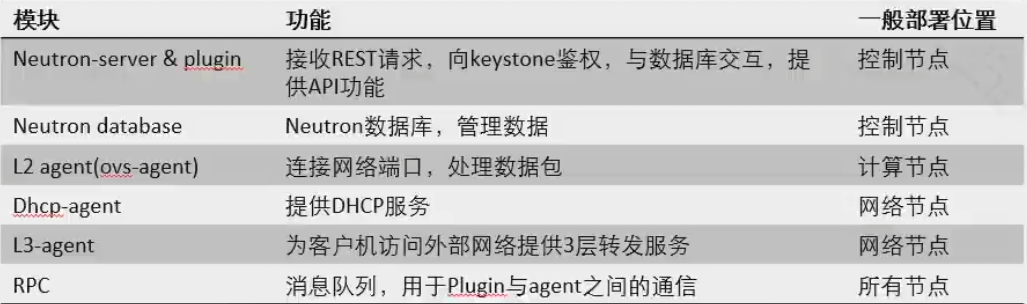

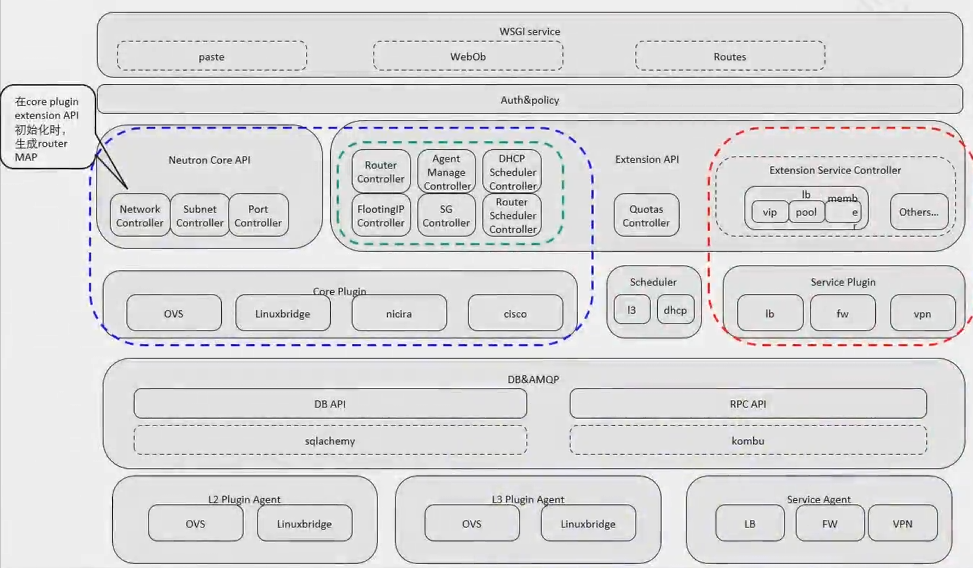

# neutronneutron-server:分为 core api 和 extent api,实现不同的功能, 比如 extent 实现 elb,fw,vpn,core 实现基本网络功能

plugin:类似 driver,可以理解为插件

agent:功能实现,比如 l2 l3

neutron 包含 netutron-server,plugin,agent

运行 l3 agent 的节点是网络节点

1 2 3 4 5 6 7 8 9 10 11 12 [root@controller ~]# neutron agent-list neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead. +--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+ | id | agent_type | host | availability_zone | alive | admin_state_up | binary | +--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+ | 2a77cf87-be5f-40b6-86d1-8b2b60018073 | Linux bridge agent | controller | | :-) | True | neutron-linuxbridge-agent | | 448ef5c9-84ba-4a19-936c-bceb21854113 | Linux bridge agent | compute01 | | :-) | True | neutron-linuxbridge-agent | | 5971fc42-dfb5-4e07-9a1a-597f64ecca14 | DHCP agent | controller | nova | :-) | True | neutron-dhcp-agent | | 9bc53f0e-c66f-439d-8767-23247b4d6aaf | Metadata agent | controller | | :-) | True | neutron-metadata-agent | | eafa283c-0bb8-46f1-9fc1-6da948bb4c11 | Linux bridge agent | compute02 | | :-) | True | neutron-linuxbridge-agent | | fd8e4b80-f243-4e0b-9672-814e14f9f10e | L3 agent | controller | nova | :-) | True | neutron-l3-agent | +--------------------------------------+--------------------+------------+-------------------+-------+----------------+--------------------

neutron-server 分为 core api 和 extension api

core-api

它可以看做是插件功能的最小集合,即每个插件都必须有的功能,也就是对网络、子网和端口的查询、加删和更新操作等。

extension api

它们一般是针对具体插件实现的,这样租户就可以利用这些插件独特的功能,比方说访问控制 (ACL) 和 Qos。

plugin

存储当前逻辑网络的配置信息,判断和存储逻辑网络和物理网络的对应关系 (比如为一个逻辑网络选择一个 van),并与一种或多种交换机通信来实现这种对应关系 (- 般通过宿主机上的插件代理来实现这种操作,或者远程登录到交换机上来配置)。

ml2 用于对接不同的 plugin

1 2 3 4 5 [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security

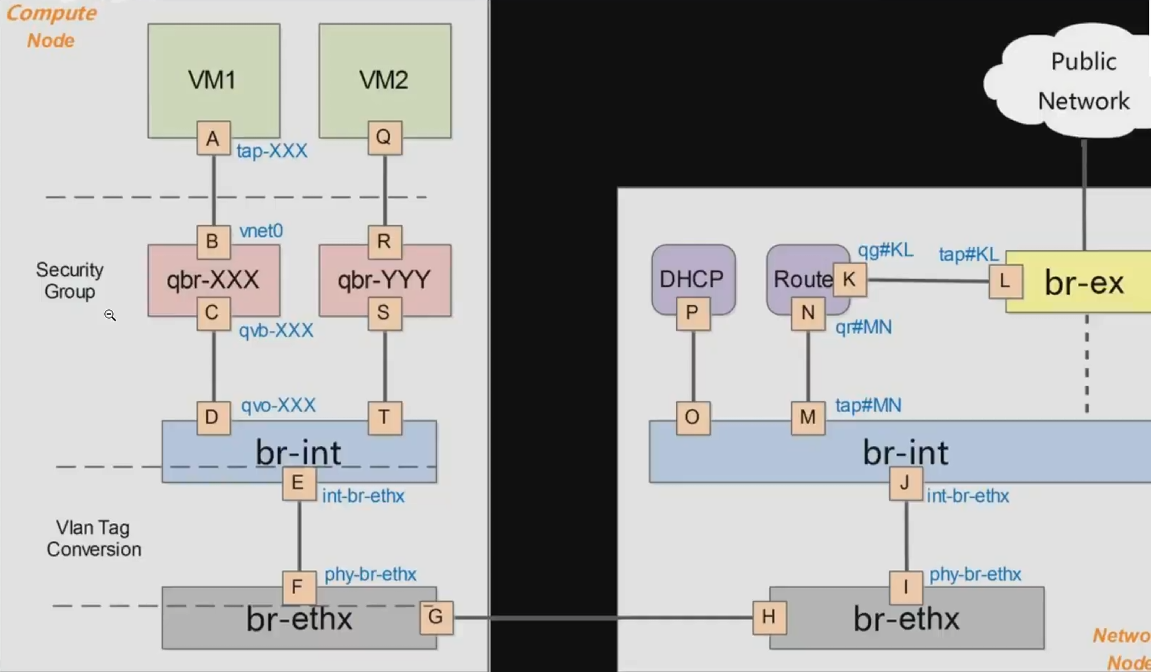

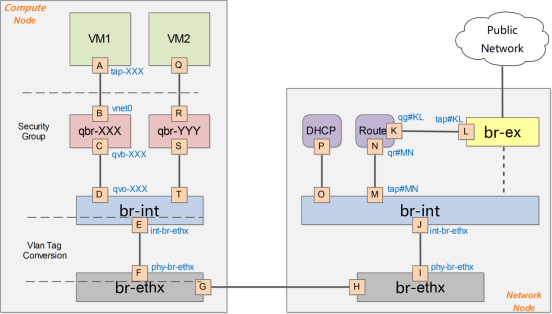

一台主机只有一个 br-int,但是可以有多个 br-ethx

只有通过 br-ex 才能访问 Internet

vm1 和 vm2 在同网段通信的时候,只需要经过 br-int

99.2 是 dhcp 的地址

overlay 网络可以通过 flat 网络通

创建网络时必须创建配置文件中允许的网络类型

1 2 3 4 5 6 7 8 brctl show bridge name bridge id STP enabled interfaces brq0298a5c0-e0 8000.4e18bd44ecd4 no tap46a10425-89 tap90fda3ce-83 vxlan-790 brq5fb8b5b5-2e 8000.000c2903cf83 no ens224 tap3459ccb0-de virbr0 8000.525400f28462 yes

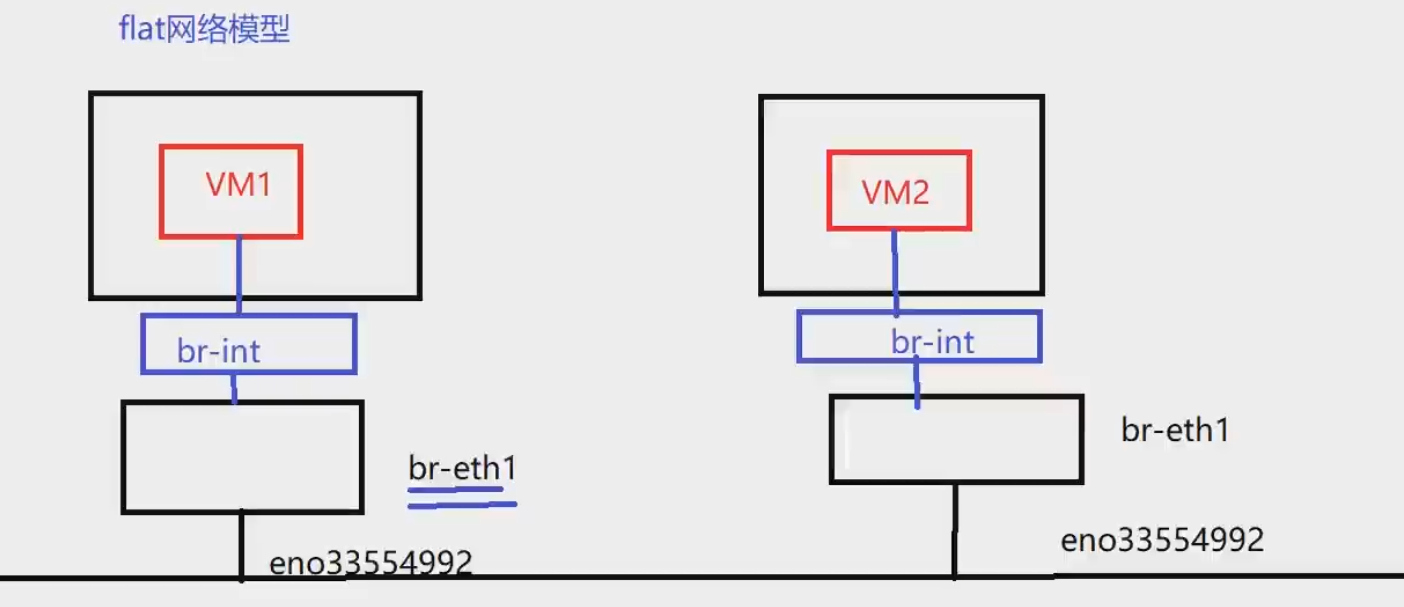

网络类型

local 本地网络

flat 没有 vlan 的网络

vlan 带有 vlan tag 的网络

qbr

linux bridge,用于实现安全组,每个 vm 都有自己的 qbr

br-ex

连接外部 (external) 网络的网桥。在配置文件中指定的名字,可以修改

br-int

集成 (integration) 网桥,所有 instance 的虚拟网卡和其他虚拟网络设备都将连接到该网桥。没有上行链路的虚拟交换机

br-tun

隧道 (tunnel) 网桥,基于隧道技术的 VxLAN 和 GRE 网络将使用该网桥进行通信。

br-eth1

有上行链路虚拟交换机,不同节点之间通信,包括云主机之间通信

openstack 网络类型 flat,vlan,vxlan,local

local :本地网络,经过 br-int 实现内部通信,不能出去

flat :不带 vlan tag 的网络

vlan :

tenant_network_types 决定了网络类型,对应配置是下面的 ml2_type_vlan

集中式路由场景,不同 vpc 互访需要到 network 节点。分布式路由,会把 l3 agent 部署在 compute 上

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vlan mechanism_drivers = openvswitch,l2population extension_drivers = port_security [ml2_type_flat] # flat_networks = provider # # From neutron.ml2 # # List of physical_network names with which flat networks can be created. Use # default '*' to allow flat networks with arbitrary physical_network names. Use # an empty list to disable flat networks. (list value) #flat_networks = * [ml2_type_geneve] # # From neutron.ml2 # # Comma-separated list of <vni_min>:<vni_max> tuples enumerating ranges of # Geneve VNI IDs that are available for tenant network allocation. Note OVN # does not use the actual values. (list value) #vni_ranges = # The maximum allowed Geneve encapsulation header size (in bytes). Geneve # header is extensible, this value is used to calculate the maximum MTU for # Geneve-based networks. The default is 30, which is the size of the Geneve # header without any additional option headers. Note the default is not enough # for OVN which requires at least 38. (integer value) #max_header_size = 30 [ml2_type_gre] # # From neutron.ml2 # # Comma-separated list of <tun_min>:<tun_max> tuples enumerating ranges of GRE # tunnel IDs that are available for tenant network allocation (list value) #tunnel_id_ranges = [ml2_type_vlan] network_vlan_ranges = huawei:10:1000 # # From neutron.ml2 # # List of <physical_network>:<vlan_min>:<vlan_max> or <physical_network> # specifying physical_network names usable for VLAN provider and tenant # networks, as well as ranges of VLAN tags on each available for allocation to # tenant networks. If no range is defined, the whole valid VLAN ID set [1, # 4094] will be assigned. (list value) #network_vlan_ranges = [ml2_type_vxlan] # vni_ranges = 1:1000

1 2 3 4 vim /etc/neutron/plugins/ml2/openvswitch_agent.ini [ovs] bridge_mappings = huawei:eth1 local_ip = OVERLAY_INTERFACE_IP_ADDRESS

ovs 流表,优先级数值大优先级越高

1 2 3 4 5 6 7 8 9 10 ovs-ofctl dump-flows br-int cookie=0x10c394b632250c4c, duration=81941.780s, table=0, n_packets=0, n_bytes=0, priority=65535,dl_vlan=4095 actions=drop cookie=0x10c394b632250c4c, duration=81941.769s, table=0, n_packets=0, n_bytes=0, priority=2,in_port="int-br-provider" actions=drop cookie=0x10c394b632250c4c, duration=81941.784s, table=0, n_packets=8, n_bytes=792, priority=0 actions=resubmit(,58) cookie=0x10c394b632250c4c, duration=81941.785s, table=23, n_packets=0, n_bytes=0, priority=0 actions=drop cookie=0x10c394b632250c4c, duration=81941.781s, table=24, n_packets=0, n_bytes=0, priority=0 actions=drop cookie=0x10c394b632250c4c, duration=81941.779s, table=30, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,58) cookie=0x10c394b632250c4c, duration=81941.779s, table=31, n_packets=0, n_bytes=0, priority=0 actions=resubmit(,58) cookie=0x10c394b632250c4c, duration=81941.783s, table=58, n_packets=8, n_bytes=792, priority=0 actions=resubmit(,60) cookie=0x10c394b632250c4c, duration=3522.763s, table=60, n_packets=4, n_bytes=392, priority=100,in_port="tapbb45b827-28" actions=load:0x3->NXM_NX_REG5[],load:0x1->NXM_NX_REG6[],resubmit(,73)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 ovs-ofctl show br-int OFPT_FEATURES_REPLY (xid=0x2): dpid:00006aad96360649 n_tables:254, n_buffers:0 capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst 1(int-br-provider): addr:6e:ee:f9:c2:36:2f config: 0 state: 0 speed: 0 Mbps now, 0 Mbps max 2(patch-tun): addr:9a:cd:9f:d6:ad:fb config: 0 state: 0 speed: 0 Mbps now, 0 Mbps max 3(tapbb45b827-28): addr:fa:16:3e:fa:16:a6 config: 0 state: 0 speed: 0 Mbps now, 0 Mbps max LOCAL(br-int): addr:6a:ad:96:36:06:49 config: PORT_DOWN state: LINK_DOWN speed: 0 Mbps now, 0 Mbps max OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

在 vlan 模式下,vlan tag 的转换需要在 br-int 和 br-ethx 两个网桥上进行相互配合。即 br-int 负责从 int-br-ethX 过来的包(带外部 vlan)转换为内部 vlan,而 br-ethx 负责从 phy-br-ethx 过来的包(带内部 vlan)转化为外部的 vlan。

同节点上 vm 相同 vlan,只需要走内部 vlan 即可

vmware 中外层交换机是 vment,vmnet 不能放行带 vlan 的。导致 dhcp 到不了其他节点,获取不到 IP

vxlan :