# 集群与存储集群

提高性能

降低成本

提高可扩展性

增加可靠性

集群分类

高性能计算集群

负载均衡集群 (LB) 平均分配请求

高可用集群 (HA) 避免单点故障,在整个集群里不能有单点故障

# LVS# lvs 集群组成前端:负载均衡层

- 由一台或多台负载调度器构成

中间:服务器群组层

- 由一组实际运行应用服务的服务器组成

底端:数据共享存储层

- 提供共享存储空间的存储区域

# lvs 名词解释Director Server : 调度服务器 — 将负载分发到 Real Server 的服务器 (nginx lvs)

Real Server: 真实服务器 - 真正捷供应用服务的服务器

VIP: 虚拟 IP 地址 - 公布给用户访问的虚拟 IP 地址

RIP: 真实 IP 地址 - 集群节点上使用的 IP 地址

DIP: 调度器连接节点服务器的 IP 地址 (nginx 的真实 IP)

CIP:client ip

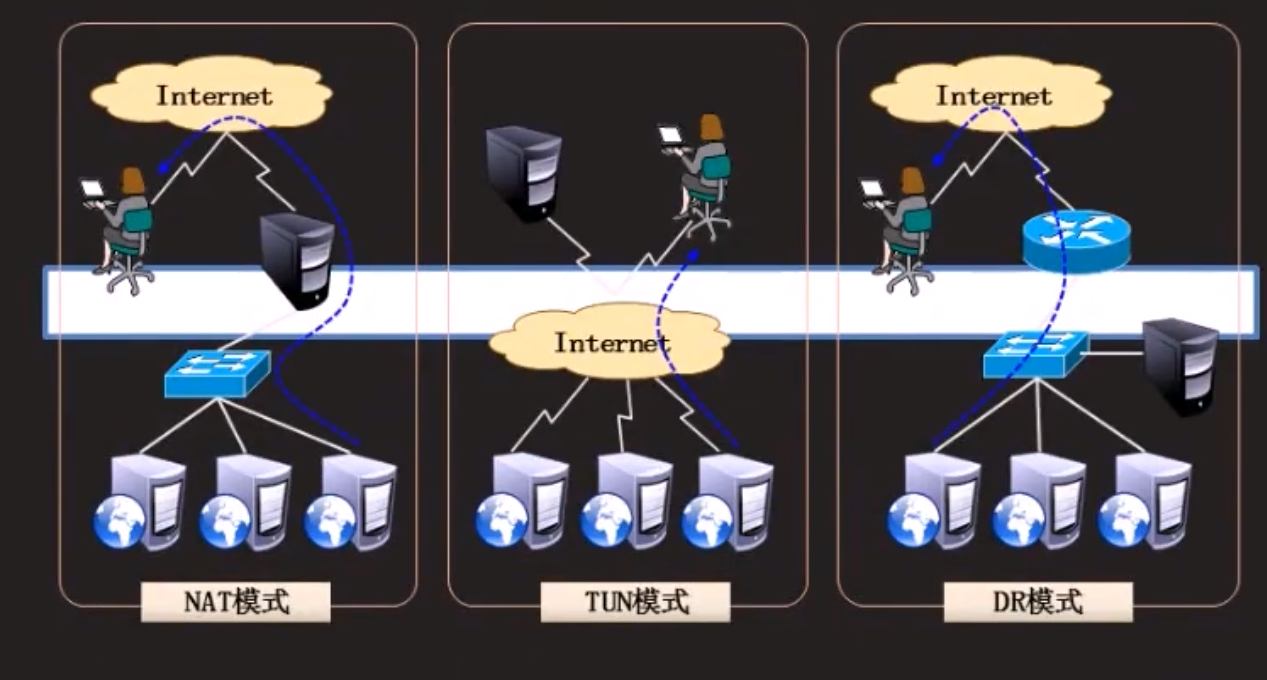

# lvs 工作模式lvs 原理是路由,数据包转发,可以理解为路由器,后端服务器看到的 ip 是 client 的。lvs 具有 nat 功能。路由器没有负载均衡调度功能

nginx 的原理是代理,nginx 访问的后端服务器,后端服务器看到的 ip 是 ngx 的

两者实现的效果基本一致,但是实现原理完全不一致

lsv 效率更高,lvs 在内核中,但是只能做转发,功能比 ngx 少,没有健康检查

nat 模式会导致 lvs 的带宽成为网络瓶颈,适合于中小型集群

DR - 直连路由,回包不走调度器,直接回给用户

tun 模式:调度器和集群不在一个网络中。用户访问调度器,集群返回给用户数据。需要在调度器和集群间做隧道

# LVS 调度算法轮询:Lvs 里轮询不能加权重,加了也不生效

加权轮询

最少连接

加权最少连接

源地址 hash

目标地址 hash

基于局部性的最少链接

带复制的基于局部性最少链接

目标地址散列 (Destination Hashing)

最短的期望的延迟

最少队列调度

# ipvsadm1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 ipvsadm 是一个lvs命令行工具,调度最终还是ipvs实现的 yum install -y ipvsadm [root@iZuf60im9c63xjnpkubjq7Z ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 139.196.42.189:80 rr TCP 139.196.42.189:15021 rr TCP 192.168.0.1:443 rr -> 10.0.0.236:6443 Masq 1 3 1 -> 10.0.0.239:6443 Masq 1 4 1 ipvs -A 创建虚拟服务器(类似于upstream) -D 删除虚拟服务器 -E 修改虚拟服务器 -C 清空所有 -a 添加真实服务器 -e 修改真实服务器 -d 删除真实服务器 -L 查看规则 -s [rr/wrr/lc/wlc/sh] 制定集群算法 -t tcp -u udp [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -A -t 172.1.1.1:38888 -s wrr [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -a -t 172.1.1.1:38888 -r 172.1.2.1 -w 3 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -a -t 172.1.1.1:38888 -r 172.1.2.2 -w 4 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.1.1.1:38888 wrr -> 172.1.2.1:38888 Route 3 0 0 -> 172.1.2.2:38888 Route 4 0 0 如果不指定工作模式,默认是dr模式 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -a -t 172.1.1.1:38888 -r 172.1.2.3 -w 4 -m [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.1.1.1:38888 wrr -> 172.1.2.1:38888 Route 3 0 0 -> 172.1.2.2:38888 Route 4 0 0 -> 172.1.2.3:38888 Masq 4 0 0 -g 默认,直连路由模式 -m 地址欺骗 指定此时为nat模式 -i tun模式 // 修改算法为LC [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -E -t 172.1.1.1:38888 -s lc [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.1.1.1:38888 lc -> 172.1.2.1:38888 Route 3 0 0 -> 172.1.2.2:38888 Route 4 0 0 -> 172.1.2.3:38888 Masq 4 0 0 -> 172.1.2.4:38888 Tunnel 4 0 0 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -e -t 172.1.1.1:38888 -r 172.1.2.1 -m -w 2 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.1.1.1:38888 lc -> 172.1.2.1:38888 Masq 2 0 0 -> 172.1.2.2:38888 Route 4 0 0 -> 172.1.2.3:38888 Masq 4 0 0 -> 172.1.2.4:38888 Tunnel 4 0 0 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -d -t 172.1.1.1:38888 -r 172.1.2.2 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.1.1.1:38888 lc -> 172.1.2.1:38888 Masq 2 0 0 -> 172.1.2.3:38888 Masq 4 0 0 -> 172.1.2.4:38888 Tunnel 4 0 0 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -D -t 172.1.1.1:38888 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn // 永久保存 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm-save -n > /etc/sysconfig/ipvsadm // 清除所有 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -C

# 实验 nat 模式1 2 3 4 5 6 7 8 9 10 11 12 net.ipv4.ip_forward = 1 开启路由转发功能 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# echo 1 > /proc/sys/net/ipv4/ip_forward // 临时生效 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# cat /proc/sys/net/ipv4/ip_forward // 查看 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf // 永久生效,千万别vim这个文件 1.开启web服务器 apache/ngx 2.systemctl stop firewalld setenforce 0 3.ipvsadm -A -t 192.168.4.5:80 -s wrr ipvsadm -a -t 192.168.4.5:80 -r 192.168.2.100:80 -w 1 -m

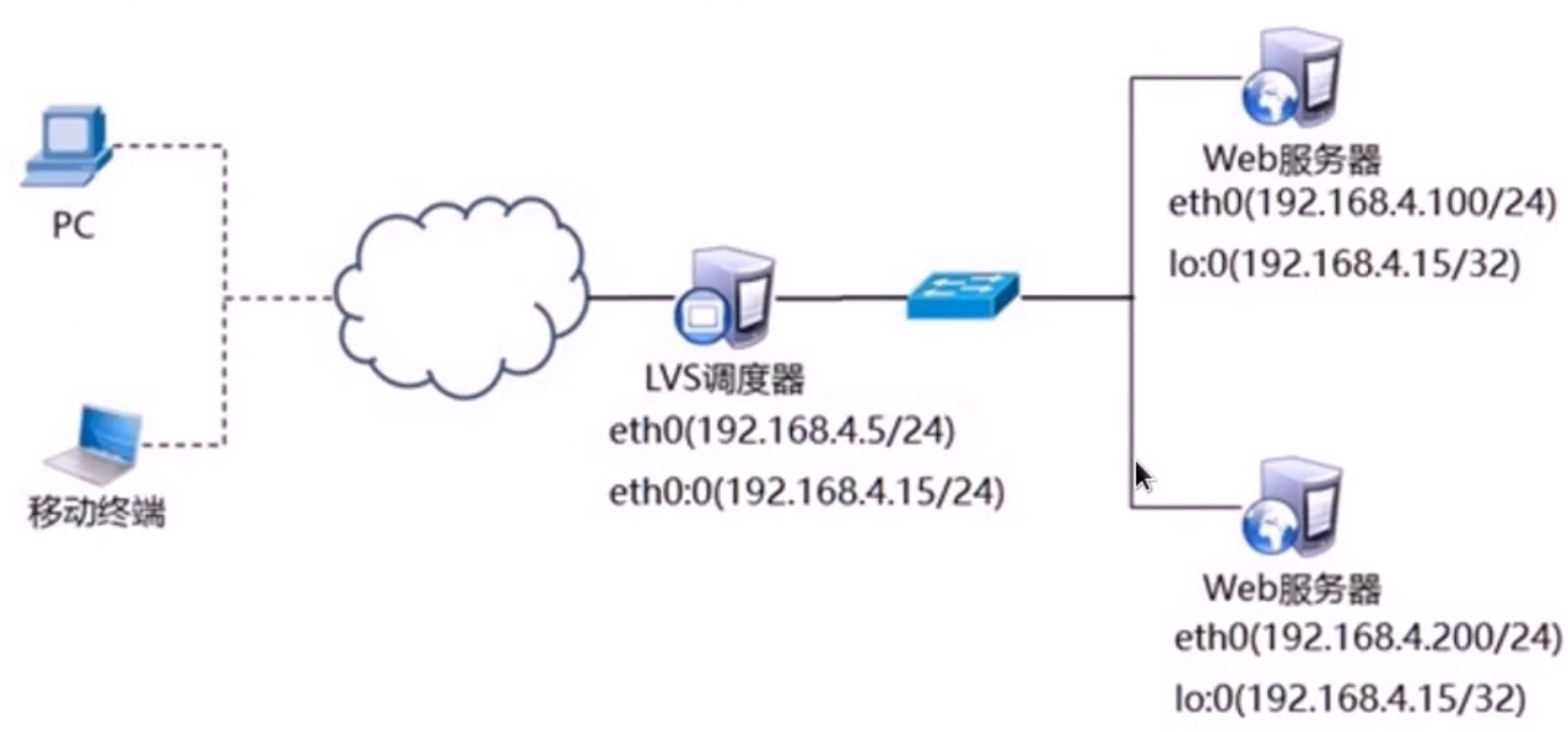

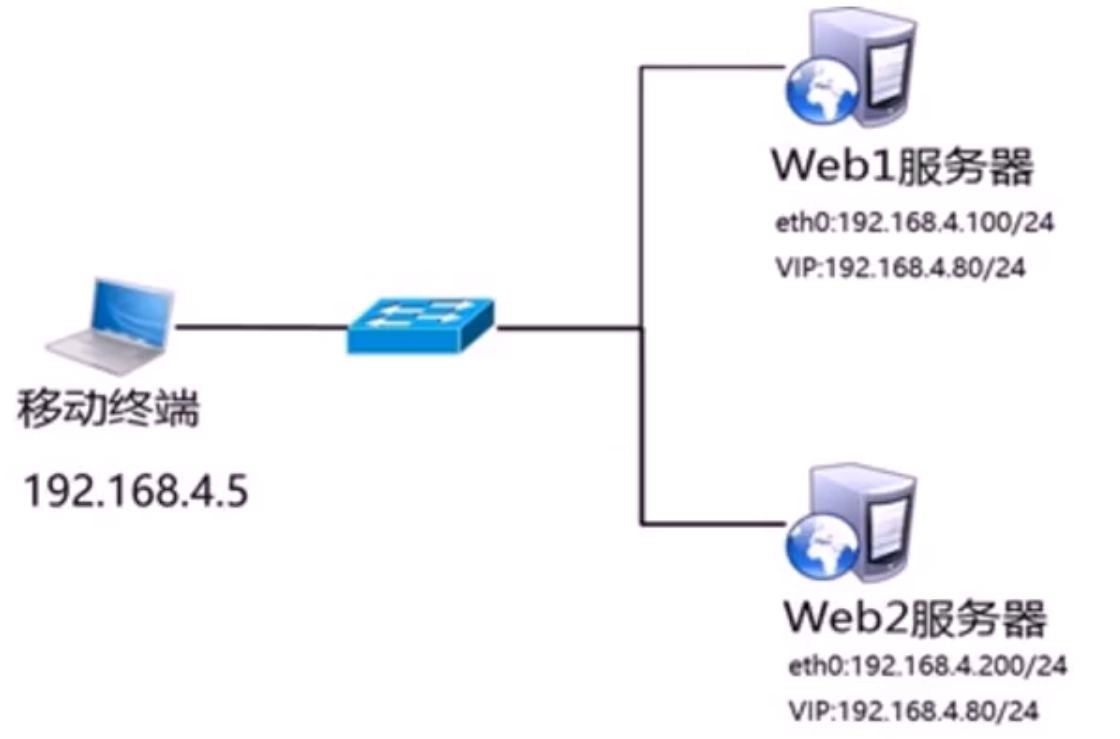

# 实验 lvs-dr使用 LVS 实现 DR 模式的集群调度服务器﹐为用户提供 Web 服务∶

客户端 IP 地址为 192.168.4.10

LVS 调度器 VIP 地址为 192.168.4.15

LVs 调度器 DIP 地址设置为 192.168.4.5

真实 web 服务器地址分别为 192.168.4.100、192.168.4.200

使用加权轮询调度算法,web1 的权重为 1 , web2 的权重为 2

1 2 3 4 5 6 7 8 最简单情况下,pc和web服务器要在同一个网段,如果pc在公网,web服务器也要有公网IP 可以加入路由器,实现web服务器返回数据给client web服务器需要给自己增加一个ip,把自己伪装成VIP,避免pc直接丢弃web服务器的回包 但是会导致地址冲突,需要修改内核参数,并且把vip配在辅助IP上 调度器上vip必须隐藏,一个网卡上第一个IP是主ip,后面的是辅助ip 别人ping我们的所有IP都能通,但是我们访问别人的时候用的是主IP作为sip PC->eth0:0 ,eth0->web server 此时DIP是eth0,DIP永远是主接口的IP

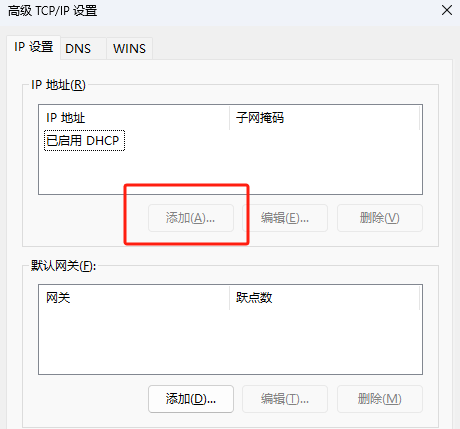

比如 Windows 上可以增加多个 IP

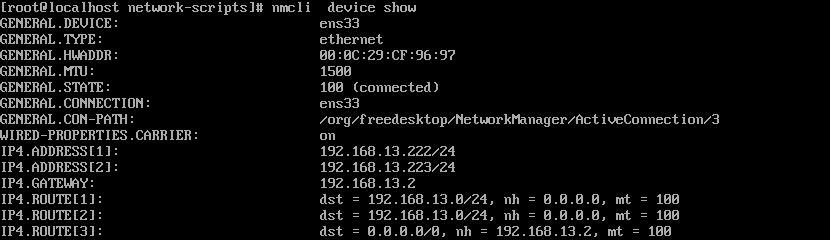

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 调度器上 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# cp /etc/sysconfig/network-scripts/ifcfg-eth0{,:0} [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ll /etc/sysconfig/network-scripts/ifcfg-eth0 ifcfg-eth0 // 主接口 ifcfg-eth0:0 //虚拟接口 vim /etc/sysconfig/network-scripts/ifcfg-eth0:0 BOOTPROTO=none DEVICE=eth0:0 ONBOOT=yes NAME=eth0:0 STARTMODE=auto TYPE=Ethernet USERCTL=no systemctl restart network centos7里面network NetworkManager 都可以管理网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 web server上 伪装 回client地址 在lo上增加vip cp /etc/sysconfig/network-scripts/ifcfg-lo{,:0} IPADDR=192.168.4.15 NETMASK=255.255.255.255 // 必须是全255 NETWORK=192.168.4.15 NAME=lo:0 webserver 上设置不回应4.15的请求,忽略arp广播 vim /etc/sysctl.conf net.ipv4.conf.all.arp_ignore = 1 忽略arp广播 net.ipv4.conf.lo.arp_ignore = 1 net.ipv4.conf.lo.arp_announce = 2 不向外宣告自己的lo地址 net.ipv4.conf.all.arp_announce = 2 sysctl -p

1 2 3 4 5 6 7 8 9 10 11 12 调度器 ipvsadm -A -t 192.168.4.15:80 -s wrr ipvsadm -a -t 192.168.4.15:80 -r 192.168.4.100 -g -w 2 ipvsadm -a -t 192.168.4.15:80 -r 192.168.4.200 -g -w 2 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.4.15:80 wrr -> 192.168.4.100:80 Route 2 0 0 -> 192.168.4.200:80 Route 2 0 0

# keepalived1. 自动配置 lvs 规则

2. 健康检查

3.VRRP

# 实验1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 // web1 && web2 yum install keepalived ! Configuration File for keepalived ! 全局配置 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL // 修改router-id,两端router-id不一样 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } ! VRRP configure vrrp_instance VI_1 { state MASTER // master / backup interface eth0 // VIP 网卡 virtual_router_id 51 // 两边 VRID 一样 priority 100 // 越大越优 advert_int 1 authentication { //两边密码一样 auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.200.16 192.168.200.17 192.168.200.18 } } systemctl restart keepalived [root@iZuf62eeabxrpfw1zarmjsZ ~]# iptables -nxvL Chain INPUT (policy ACCEPT 59 packets, 2888 bytes) pkts bytes target prot opt in out source destination 0 0 DROP all -- * * 0.0.0.0/0 0.0.0.0/0 match-set keepalived dst 清除这条规则 [root@iZuf62eeabxrpfw1zarmjsZ ~]# ip a s eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:26:8f:50 brd ff:ff:ff:ff:ff:ff inet 10.0.0.250/24 brd 10.0.0.255 scope global dynamic eth0 valid_lft 315253049sec preferred_lft 315253049sec inet 192.168.200.16/32 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.200.17/32 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.200.18/32 scope global eth0 valid_lft forever preferred_lft forever inet6 fe80::216:3eff:fe26:8f50/64 scope link valid_lft forever preferred_lft forever [root@iZuf62eeabxrpfw1zarmjsZ ~]# systemctl stop keepalived.service [root@iZuf62eeabxrpfw1zarmjsZ ~]# ip -c a 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:26:8f:50 brd ff:ff:ff:ff:ff:ff inet 10.0.0.250/24 brd 10.0.0.255 scope global dynamic eth0 valid_lft 315253013sec preferred_lft 315253013sec inet6 fe80::216:3eff:fe26:8f50/64 scope link valid_lft forever preferred_lft forever

# 实验1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 ! Configuration File for keepalived ! 全局配置 global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL // 修改router-id,两端router-id不一样 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } ! VRRP configure vrrp_instance VI_1 { state MASTER // master / backup interface eth0 // VIP 网卡 virtual_router_id 51 // 两边 VRID 一样 priority 100 // 越大越优 advert_int 1 authentication { //两边密码一样 auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.200.16 192.168.200.17 192.168.200.18 } } virtual_server 192.168.200.100 443 { delay_loop 6 lb_algo rr // 调度算法 lb_kind NAT // lvs模式 !persistence_timeout 50 // 保持连接 protocol TCP // -t == tcp real_server 192.168.201.101 443 { weight 1 // weight SSL_GET { // health check tcp_check http_get ssl_get url { path / digest ff20ad2481f97b1754ef3e12ecd3a9cc } url { path /mrtg/ digest 9b3a0c85a887a256d6939da88aabd8cd } connect_timeout 3 // 每隔3s一次 nb_get_retry 3 // 重试 3 次 delay_before_retry 3 // 隔3s再试 } } real_server 192.168.201.100 443 { weight 1 SSL_GET { url { path / digest ff20ad2481f97b1754ef3e12ecd3a9cc } url { path /mrtg/ digest 9b3a0c85a887a256d6939da88aabd8cd } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } }

健康检查方式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 tcp_check http_get ssl_get // 全部是大写 real server 192.168.1.1 80 { TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } // 只检查tcp端口 } real server 192.168.1.1 80 { http_get { // 检查url和对应返回内容的md5 url { path /index.html digest md5xxx } } } real server 192.168.1.1 443 { ssl_get { // 检查url和对应返回内容的md5,但是https url { path /index.html digest md5xxx } } }

# haproxy# ngx - lvs - haproxy 对比ngx

优点

工作在 7 层,可以针对 http 做分流策略

1.9 版本开始支持 4 层代理

正则表达式比 HAProxy 强大

安装、配置、测试简单,通过日志可以解决多数问题

并发量可以达到几万次

Nginx 还可以作为 Web 服务器使用

缺点

仅支持 http、https、mail 协议,应用面小

监控检查仅通过端口,无法使用 url 检查

lvs

优点

负载能力强工作在 4 层,对内存、CPU 消耗低

配置性低,没有太多可配置性,减少人为错误

应用面广,几乎可以为所有应用提供负载均衡

缺点

不支持正则表达式,不能实现动静分离

如果网站架构庞大,LVS-DR 配置比较繁琐

haproxy

优点

支持 session、cookie 功能

可以通过 url 进行健康检查

效率、负载均衡速度,高于 Nginx,低于 LVS

HAProxy 支持 TCP,可以对 MySQL 进行负载均衡

调度算法丰富

缺点

正则弱于 Nginx

日志依赖于 syslogd

# 实验1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 [root@iZ2ze79b6r8gdeaxivwkxoZ ~]# yum install haproxy -y vim /etc/haproxy/haprox.cfg global # 全局设置 log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid user haproxy group haproxy daemon maxconn 4000 defaults # 默认设置 mode http # tcp http health 四层 七层 只做健康检查,默认http log global option httplog option dontlognull retries 3 timeout http-request 5s timeout queue 1m timeout connect 5s timeout client 1m timeout server 1m timeout http-keep-alive 5s timeout check 5s maxconn 3000 frontend main bind *:80 default_backend http_back backend http_back balance roundrobin server node1 127.0.0.1:5001 check server node2 127.0.0.1:5002 check server node3 127.0.0.1:5003 check server node4 127.0.0.1:5004 check

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 [root@iZ2zeemxfrv2vvgeutzfnpZ ~]# cat /etc/haproxy/haproxy.cfg #--------------------------------------------------------------------- # Example configuration for a possible web application. See the # full configuration options online. # # http://haproxy.1wt.eu/download/1.4/doc/configuration.txt # #--------------------------------------------------------------------- #--------------------------------------------------------------------- # Global settings #--------------------------------------------------------------------- global # to have these messages end up in /var/log/haproxy.log you will # need to: # # 1) configure syslog to accept network log events. This is done # by adding the '-r' option to the SYSLOGD_OPTIONS in # /etc/sysconfig/syslog # # 2) configure local2 events to go to the /var/log/haproxy.log # file. A line like the following can be added to # /etc/sysconfig/syslog # # local2.* /var/log/haproxy.log # log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon # turn on stats unix socket stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #--------------------------------------------------------------------- defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- frontend main *:5000 acl url_static path_beg -i /static /images /javascript /stylesheets acl url_static path_end -i .jpg .gif .png .css .js use_backend static if url_static default_backend app #--------------------------------------------------------------------- # static backend for serving up images, stylesheets and such #--------------------------------------------------------------------- backend static balance roundrobin server static 127.0.0.1:4331 check #--------------------------------------------------------------------- # round robin balancing between the various backends #--------------------------------------------------------------------- backend app balance roundrobin server app1 127.0.0.1:5001 check server app2 127.0.0.1:5002 check server app3 127.0.0.1:5003 check server app4 127.0.0.1:5004 check

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 集群格式1: frontend main *:5000 acl url_static path_beg -i /static /images /javascript /stylesheets acl url_static path_end -i .jpg .gif .png .css .js use_backend static if url_static default_backend app #--------------------------------------------------------------------- # static backend for serving up images, stylesheets and such #--------------------------------------------------------------------- backend static balance roundrobin server static 127.0.0.1:4331 check 集群格式2: listen servers *:80 balance roundrobin server web1 1.1.1.1 check inter 2000 rise 2 fall 5 5次失败认为server挂了,连续两次成功认为他又好了 server web2 1.1.1.2 check inter 2000 rise 2 fall 5

haproxy 状态检查

1 2 3 4 5 listen stats 0.0.0.0:1080 stats refresh 30s stats uri /stats stats realm Haproxy Manager stats auth admin:admin

Queue 队列数据的信息(当刖队列数量,最大值,队列限制数量);

Session rate 每秒会话率(当前值﹐最大值﹐限制数量);

Sessions 总会话量(当前值﹐最大值﹐总量,Lbtot: total numberof times a server was selected 选中一台服务器所用的总时间);

Bytes (入站、出站流量);

Denied (拒绝请求、拒绝回应);

Errors (错误请求、错误连接、错误回应);

Warnings (重新尝试警告 retry、重新连接 redispatches) ;

Server (状态、最后检查的时间(多久前执行的最后一次检查)、权重、备份服务器数量、down 机服务器数量、down 机时长)。

# 存储存储类型

DAS 直连存储,ide,sata,sas

NAS (网络附加存储) samba nfs ftp 共享文件系统

SAN (存储区域网络) iscsi 块

(SDS - 软件定义存储) 分布式存储 ceph glusterfs hadoop

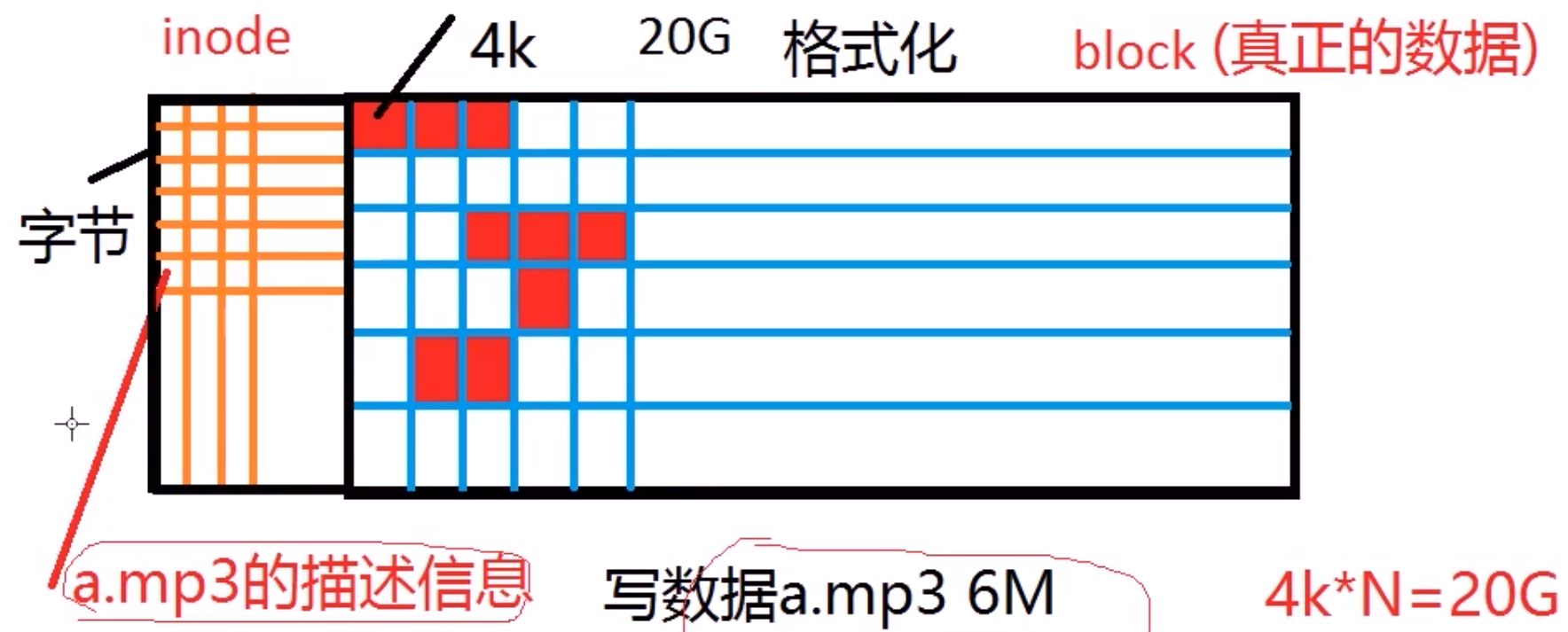

格式化前叫做块 ,格式化后叫做文件系统 (xfs,ext4,ext3,fat32,ntfs)。块不能直接用

分布式文件系统 (Distributed File System) 是指文件系统管理的物理存储资源不一定直接连接在本地节点上,而是通过计算机网络与节点相连

分布式文件系统的设计基于客户机 / 服务器模式

Ceph 是一个分布式文件系统

具有高扩展、高可用、高性能的特点

Ceph 可以提供对象存储、块存储、文件系统存储

Ceph 可以提供 EB 级别的存储空间 (PB→TB→GB)- 1024G*1024G=1048576G

# ceph 组件OSDs 存储设备

Monitors 集群监控组件

RadosGateway (RGW)- 对象存储网关

MDSs 存放文件系统的元数据(对象存储和块存储不需要该组件)

Client ceph 客户端

Mon 和 osd 是必须的组件

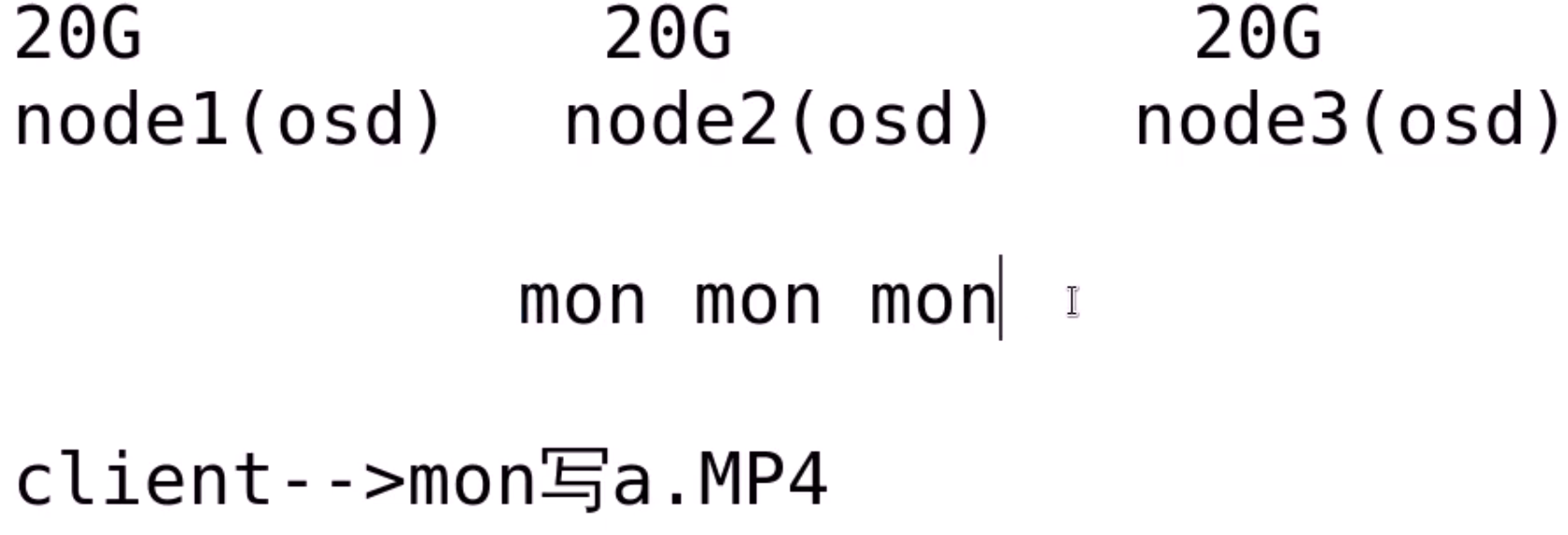

Mon 必须是奇数个,过半原则,集群中必须有一半以上 mon 是好的,至少三个起步

ceph 会把文件切分成很多个 4M 小块,小块同时存储到不同的存储,所以存储 osd 越多,速度越快

可以理解成 raid 0

ceph 默认有 3 副本

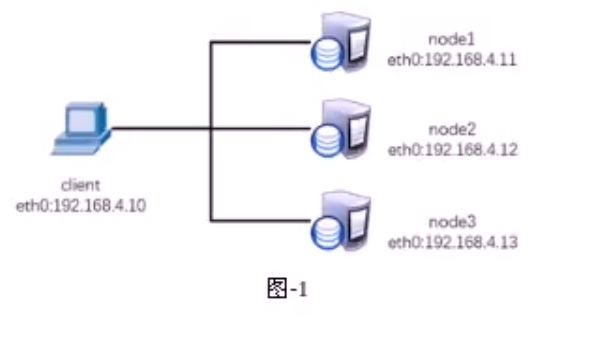

# 实验

每台机器都有 ceph 和 mon

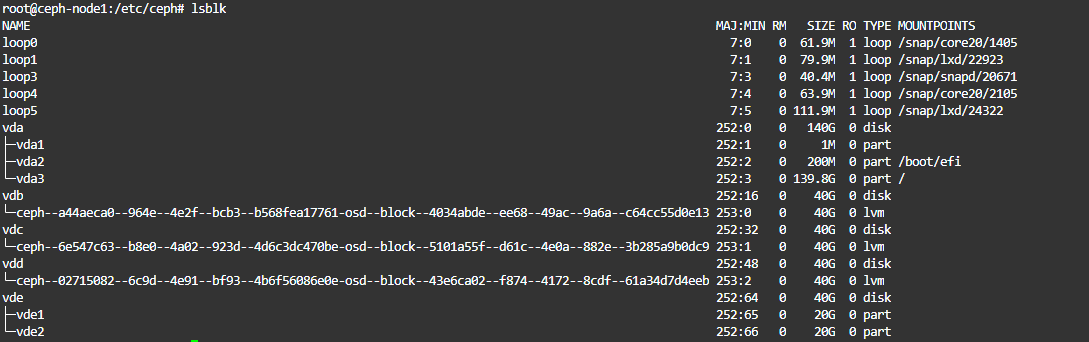

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 yum install createrepo createrepo /opt/repo // 建立repo源,产生repodata vim /etc/chronyd.conf server ntp.cloud.aliyuncs.com minpoll 4 maxpoll 10 iburst [root@iZuf62eeabxrpfw1zarmjsZ ~]# systemctl restart chronyd.service [root@iZuf62eeabxrpfw1zarmjsZ ~]# chronyc sources 210 Number of sources = 15 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 100.100.61.88 1 4 77 13 +427us[ +123us] +/- 10ms ^+ 203.107.6.88 2 4 77 11 +354us[ +354us] +/- 19ms ^- 120.25.115.20 2 4 146 39 +1189us[ +652us] +/- 15ms ^? 10.143.33.49 0 6 0 - +0ns[ +0ns] +/- 0ns ^+ 100.100.3.1 2 4 77 14 +186us[ -119us] +/- 4027us ^+ 100.100.3.2 2 4 77 12 +374us[ +374us] +/- 3623us ^- 100.100.3.3 2 4 137 8 +1220us[+1220us] +/- 4204us ^? 10.143.33.50 0 6 0 - +0ns[ +0ns] +/- 0ns ^? 10.143.33.51 0 6 0 - +0ns[ +0ns] +/- 0ns ^? 10.143.0.44 0 6 0 - +0ns[ +0ns] +/- 0ns ^? 10.143.0.45 0 6 0 - +0ns[ +0ns] +/- 0ns ^? 10.143.0.46 0 6 0 - +0ns[ +0ns] +/- 0ns ^+ 100.100.5.1 2 4 77 14 -86us[ -391us] +/- 4384us ^? 100.100.5.2 2 5 10 42 +30us[ -507us] +/- 3984us ^+ 100.100.5.3 2 4 77 12 +894us[ +894us] +/- 4213us yum install virt-manager virt-manager lsblk // 增加三个磁盘

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 在一个可以无密码远程所有机器的机器上 // chenge-port-engine yum install -y ceph-deploy curl https://bootstrap.pypa.io/ez_setup.py -o - | python // ceph-deploy 是一个自动部署脚本 [root@iZuf69j04rlrdiwile6mjfZ ~]# cat /usr/bin/ceph-deploy #!/usr/bin/python2 # EASY-INSTALL-ENTRY-SCRIPT: 'ceph-deploy==1.5.25','console_scripts','ceph-deploy' __requires__ = 'ceph-deploy==1.5.25' import sys from pkg_resources import load_entry_point if __name__ == '__main__': sys.exit( load_entry_point('ceph-deploy==1.5.25', 'console_scripts', 'ceph-deploy')() ) ceph-deploy --help // 编辑所有的node hostname和/etc/hosts vim /etc/hosts 47.116.119.177 node1 139.224.202.165 node2 47.116.198.184 node3 // 编辑所有yum源 vim /etc/yum.repos.d/ceph.repo [ceph] baseurl = https://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64 enabled = 1 gpgcheck = 0 name = Ceph packages priority = 1 yum makecache fast yum install ceph-mon.x86_64 ceph-osd.x86_64 ceph-mds.x86_64 ceph-selinux.x86_64 ceph-common.x86_64 ceph-base.x86_64 ceph-radosgw.x86_64 -y [root@node1 ceph-cluster]# for i in node2 node3 > do > ssh $i " yum install ceph-mon.x86_64 ceph-osd.x86_64 ceph-mds.x86_64 ceph-selinux.x86_64 ceph-common.x86_64 ceph-base.x86_64 ceph-radosgw.x86_64 -y" > done mkdir ceph-cluster cd ceph-cluster // 以后所有的ceph-deploy都需要在这个目录执行 ceph-deploy new node1 node2 node3 --no-ssh-copykey // 验证 [root@node1 ceph-cluster]# cat ceph.conf [global] fsid = 9bfb5b39-ada6-4d34-b08b-3fb83c7952d3 mon_initial_members = node1, node2, node3 mon_host = 47.116.119.177,139.224.202.165,47.116.198.184 auth_cluster_required = cephx // none 访问无需密码,cephx访问需要密码,他是一个密码占位符 auth_service_required = cephx auth_client_required = cephx filestore_xattr_use_omap = true // 主配置文件 [root@node3 ~]# ls /etc/ceph/ [root@node1 ceph-cluster]# ceph-deploy mon create-initial // 如果这步报错[ceph_deploy.mon][ERROR ] Failed to execute command: /usr/sbin/service ceph -c /etc/ceph/ceph.conf start mon.ceph1 说明ceph-deploy版本有问题,别用1.5.25 参考这篇更新ceph https://www.cnblogs.com/weiwei2021/p/14060186.html

http://www.js-code.com/xindejiqiao/xindejiqiao_246488.html

https://cloud.tencent.com/developer/article/1965164

注意:目前版本用 ceph-deploy 装会有报错,ceph-deploy 1.5.25 会有 lsb 的错,ceph 2.0 也会报错。目前版本使用 cephadm 安装

参考以下链接可以安装,别装 17 版本,node-exporter 会报错,装 18

Deploying a new Ceph cluster — Ceph Documentation

[Linux | Ceph | Ubuntu 中部署 Ceph 集群 - 隔江千万里 - 博客园 (cnblogs.com )](https://www.cnblogs.com/FutureHolmes/p/15424559.html#:~:text=Ceph 部署 %3A 手动 1 1. 配置 Ceph,7 7. 安装 Cephadm (所有节点) 8 8. 安装 Ceph)

Ubuntu 22.04 安装 ceph 集群 | 小汪老师 (xwls.github.io)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 # 验证 每个节点上: root@ceph-node2:~# systemctl status ceph-ed3dd87c-b48a-11ee-9675-056f4225bb69@mon.ceph-node2.service ● ceph-ed3dd87c-b48a-11ee-9675-056f4225bb69@mon.ceph-node2.service - Ceph mon.ceph-node2 for ed3dd87c-b48a-11ee-9675-056f4225bb69 Loaded: loaded (/etc/systemd/system/ceph-ed3dd87c-b48a-11ee-9675-056f4225bb69@.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2024-01-18 21:37:02 CST; 5min ago Main PID: 26494 (bash) Tasks: 9 (limit: 8672) Memory: 8.1M CPU: 97ms CGroup: /system.slice/system-ceph\x2ded3dd87c\x2db48a\x2d11ee\x2d9675\x2d056f4225bb69.slice/ceph-ed3dd87c-b48a-11ee-9675-056f4225bb69@mon.ceph-node2.service ├─26494 /bin/bash /var/lib/ceph/ed3dd87c-b48a-11ee-9675-056f4225bb69/mon.ceph-node2/unit.run └─26510 /usr/bin/docker run --rm --ipc=host --stop-signal=SIGTERM --ulimit nofile=1048576 --net=host --entrypoint /usr/bin/ceph-mon --privileged --group-add=disk --init --name ceph-ed3dd87c-b48a-11ee-9675-056f4225bb69-mon-ceph-node2 --pids-limit=0 -e CONTAIN> mon上 root@ceph-node1:~# ceph -s cluster: id: ed3dd87c-b48a-11ee-9675-056f4225bb69 health: HEALTH_OK services: mon: 3 daemons, quorum ceph-node1,ceph-node2,ceph-node3 (age 4m) mgr: ceph-node1.lhhwcs(active, since 45h), standbys: ceph-node2.svhmyy osd: 9 osds: 9 up (since 3m), 9 in (since 3m) data: pools: 1 pools, 1 pgs objects: 2 objects, 449 KiB usage: 640 MiB used, 359 GiB / 360 GiB avail pgs: 1 active+clean root@ceph-node1:/etc/ceph# cat ceph.client.admin.keyring [client.admin] key = AQCerKZlXFkFBxAADiQXY27WFREVjjpPg8gikg== // ceph用户名密码 caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *"

添加到 osd 中后变为 lvm 格式

添加一块 ESSD AutoPL 作为缓存盘

1 2 3 4 5 6 for i in ceph-node1 ceph-node2 ceph-node3 do ssh $i "parted /dev/vde mklabel gpt" ssh $i "parted /dev/vde mkpart primary 1 50%" ssh $i "parted /dev/vde mkpart primary 50% 100%" done

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 // 全局node执行 root@ceph-node1:/etc/ceph# ll /dev/vde* brw-rw---- 1 root disk 252, 64 Jan 18 22:05 /dev/vde brw-rw---- 1 root disk 252, 65 Jan 18 22:05 /dev/vde1 brw-rw---- 1 root disk 252, 66 Jan 18 22:05 /dev/vde2 注意权限 for i in ceph-node1 ceph-node2 ceph-node3; do chown ceph.ceph /dev/vde1 /dev/vde2 done; 永久生效 vim /etc/udev/rules.d/70-vde.rules ENV{DEVICENAME}=="/dev/vde1",OWNER="ceph",GROUP="ceph" ENV{DEVICENAME}=="/dev/vde2",OWNER="ceph",GROUP="ceph" root@ceph-node3:~# ll /dev/vde* brw-rw---- 1 root disk 252, 64 Jan 18 22:05 /dev/vde brw-rw---- 1 ceph ceph 252, 65 Jan 18 22:05 /dev/vde1 brw-rw---- 1 ceph ceph 252, 66 Jan 18 22:05 /dev/vde2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 查看共享池 root@ceph-node1:/etc/ceph# ceph osd lspools 创建共享池 root@ceph-node1:/etc/ceph# ceph osd pool create pool-name qcow2 创建虚拟机,虚拟机镜像格式 layering 开启cow功能,支持快照 root@ceph-node1:/etc/ceph# rbd create pool-name/demo --image-feature layering --size 20G root@ceph-node1:/etc/ceph# rbd create pool-name/images --image-feature layering --size 30G root@ceph-node1:/etc/ceph# rbd list pool-name demo images root@ceph-node1:/etc/ceph# rbd info pool-name/demo rbd image 'demo': size 20 GiB in 5120 objects order 22 (4 MiB objects) snapshot_count: 0 id: 3885ef2f25ef block_name_prefix: rbd_data.3885ef2f25ef format: 2 features: layering op_features: flags: create_timestamp: Thu Jan 18 22:59:25 2024 access_timestamp: Thu Jan 18 22:59:25 2024 modify_timestamp: Thu Jan 18 22:59:25 2024 // 动态扩容rbd root@ceph-node1:/etc/ceph# rbd resize --size 25G pool-name/demo Resizing image: 100% complete...done. root@ceph-node1:/etc/ceph# rbd info pool-name/demo rbd image 'demo': size 25 GiB in 6400 objects order 22 (4 MiB objects) snapshot_count: 0 id: 3885ef2f25ef block_name_prefix: rbd_data.3885ef2f25ef format: 2 features: layering op_features: flags: create_timestamp: Thu Jan 18 22:59:25 2024 access_timestamp: Thu Jan 18 22:59:25 2024 modify_timestamp: Thu Jan 18 22:59:25 2024 // 动态缩容rbd root@ceph-node1:/etc/ceph# rbd resize --size 17G pool-name/demo --allow-shrink Resizing image: 100% complete...done. root@ceph-node1:/etc/ceph# rbd info pool-name/demo rbd image 'demo': size 17 GiB in 4352 objects order 22 (4 MiB objects) snapshot_count: 0 id: 3885ef2f25ef block_name_prefix: rbd_data.3885ef2f25ef format: 2 features: layering op_features: flags: create_timestamp: Thu Jan 18 22:59:25 2024 access_timestamp: Thu Jan 18 22:59:25 2024 modify_timestamp: Thu Jan 18 22:59:25 2024

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 // 访问 在client上执行 yum install ceph-common -y // 把conf 和 keying 复制到client root@ceph-node1:/etc/ceph# scp ceph.conf root@10.0.0.251:/root root@ceph-node1:/etc/ceph# scp ceph.client.admin.keyring root@10.0.0.251:/root 复制到 /etc/ceph [root@node1 ceph]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vdd 253:48 0 40G 0 disk vdb 253:16 0 40G 0 disk vdc 253:32 0 40G 0 disk vda 253:0 0 140G 0 disk └─vda1 253:1 0 140G 0 part / [root@node1 ceph]# rbd map pool-name/demo /dev/rbd0 [root@node1 ceph]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT rbd0 252:0 0 17G 0 disk vdd 253:48 0 40G 0 disk vdb 253:16 0 40G 0 disk vdc 253:32 0 40G 0 disk vda 253:0 0 140G 0 disk └─vda1 253:1 0 140G 0 part / [root@node1 ceph]# rbd showmapped id pool namespace image snap device 0 pool-name demo - /dev/rbd0 // 取消映射 [root@node1 ceph]# rbd device unmap /dev/rbd1

https://blog.csdn.net/alex_yangchuansheng/article/details/123516025

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 // 快照 root@ceph-node1:~# rbd snap create pool-name/demo --snap snap1 Creating snap: 100% complete...done. root@ceph-node1:~# rbd snap ls pool-name/demo SNAPID NAME SIZE PROTECTED TIMESTAMP 4 snap1 17 GiB Sat Jan 20 14:26:44 2024 // 客户端模拟误删 [root@node1 ppp]# rm -rf shabi [root@node1 /]# umount /ppp // ceph 上回滚 root@ceph-node1:~# rbd snap rollback pool-name/demo --snap snap1 Rolling back to snapshot: 100% complete...done. // 去client 上查看 [root@node1 /]# mount /dev/rbd0 /ppp [root@node1 /]# cd /ppp/ [root@node1 ppp]# ls lost+found shabi

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 // 用旧的快照克隆新的镜像 root@ceph-node1:~# rbd snap protect pool-name/demo --snap snap1 root@ceph-node1:~# rbd snap rm pool-name/demo --snap snap1 Removing snap: 0% complete...failed.2024-01-20T14:38:40.687+0800 7f788903be00 -1 librbd::Operations: snapshot is protected rbd: snapshot 'snap1' is protected from removal. root@ceph-node1:~# rbd clone pool-name/demo@snap1 pool-name/snap2 root@ceph-node1:~# rbd list pool-name demo images snap2 root@ceph-node1:~# rbd info pool-name/snap2 rbd image 'snap2': size 17 GiB in 4352 objects order 22 (4 MiB objects) snapshot_count: 0 id: 39cf34833fff block_name_prefix: rbd_data.39cf34833fff format: 2 features: layering op_features: flags: create_timestamp: Sat Jan 20 14:55:39 2024 access_timestamp: Sat Jan 20 14:55:39 2024 modify_timestamp: Sat Jan 20 14:55:39 2024 parent: pool-name/demo@snap1 overlap: 17 GiB # 如果希望克隆的镜像独立工作,需要将父快照中的数据全局拷贝一份 root@ceph-node1:~# rbd flatten pool-name/snap2 Image flatten: 100% complete...done. root@ceph-node1:~# rbd info pool-name/snap2 rbd image 'snap2': size 17 GiB in 4352 objects order 22 (4 MiB objects) snapshot_count: 0 id: 39cf34833fff block_name_prefix: rbd_data.39cf34833fff format: 2 features: layering op_features: flags: create_timestamp: Sat Jan 20 14:55:39 2024 access_timestamp: Sat Jan 20 14:55:39 2024 modify_timestamp: Sat Jan 20 14:55:39 2024 // 取消保护,删除snap root@ceph-node1:~# rbd snap unprotect pool-name/demo --snap snap1 root@ceph-node1:~# rbd snap rm pool-name/demo --snap snap1 Removing snap: 100% complete...done.

1 2 3 4 5 6 7 8 9 10 11 // 客户段取消映射 [root@node1 /]# umount /ppp [root@node1 /]# rbd unmap pool-name/demo [root@node1 /]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vdd 253:48 0 40G 0 disk vdb 253:16 0 40G 0 disk vdc 253:32 0 40G 0 disk vda 253:0 0 140G 0 disk └─vda1 253:1 0 140G 0 part /

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 // 通过虚拟机访问ceph // node1 [root@node1 /]# rbd create pool-name/vm1-image --image-feature layering --size 10G // client <secret ephemeral='no' private='no'> <usage type='ceph'> <name>client.admin secret</name> </usage> </secret> virsh secret-define secret.xml virsh secret-list // 绑定密码 virsh secret-set-value -secret xxx-xxx-xxx --base64 xxxxxx virsh edit xxx.xml 在kvm中 virtio 接口速率是最高的 <disk type='network' device='disk'> <driver name='qemu' type='raw' /> <auth username='admin'> <secret type='ceph' uuid='' /> # virsh secret list 获取刚刚创建的secret </auth> <source protocol='rbd' name='rbd/vm1'> <host name='1.1.1.1' port='6789' /> </source> <target dev='vda' bus='virtio' /> </disk> 虚拟机reboot生效

# ceph 文件系统

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ ceph osd pool create cephfs_data $ ceph osd pool create cephfs_metadata ceph fs new cephfs cephfs_metadata cephfs_data 128 // metadata 存元数据,data存数据,128是pg数量,为2^x ,可以理解为目录数量,默认64 ceph fs ls root@ceph-node1:~# ceph osd lspools 1 .mgr 2 pool-name 3 cephfs_data 4 cephfs_metadata root@ceph-node1:~# ceph fs ls name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ] [root@client ~]# mount -t ceph 10.0.0.4:6789:/ /000/ -o name=admin,secret=AQCerKZlXFkFBxAADiQXY27WFREVjjpPg8gikg==

# 对象存储1 2 3 4 5 6 7 8 9 10 11 12 对象存储 ceph-radosgw 启动ceph-radosgw 即可 修改端口为8000 [client.rgw.ceph-node1] host = ceph-node1 rgw_frontends = "civetweb port=8000" s3tools.org 测试